- CommandBuffer

- CommandBuffer版本的PPSv2

- AmbientOcclusion

- ScreenSpaceReflections

- DepthOfField

- AutoExposure

- Bloom

- Vignette

- ColorGrading

由于跳槽的原因,断更了小半年,从完美世界跳到了盛大游戏,从引擎组跳到了项目组,算是经历了自己职业生涯的一次小小转变。不过还好属于软着陆,因为在项目组还是负责渲染和优化等引擎向的工作。

入职盛大也三个月了,趁着刚结束一个项目小节点,回头整理一下这忙碌的三个月。三个月的时间,不长也不短,项目组的生活还是比引擎组要忙碌和充实很多,把很多技术储备实实在在的用到了项目上,有时候还是需要比之前更加细致才行。有很多课题可以总结,4月份也在盛大游戏公司范围内办了一次PBR的讲座,但是今天先来聊一下Unity官方推出的后处理全家桶,PostProcessStacke V2。嗯,V2。

现在官网上的user guide还是V1的,但是作为程序员,一个技术更新换代如此之快的行业,我们只看最新的,而且官网上也挂出了V2的github,看git的日志,V2从2016.10.10就开始撰写了,所以早晚有一天V2会替代V1。更何况,我也真的对比了V1和V2的区别,后处理的算法差别不大,但是渲染管线上V2使用了最新的CommandBuffer和SRP技术,作为图形学工作者,我对这两者还是有满满的兴趣,并坚信它俩是未来Unity渲染管线的方向。而V1就和我们自己之前写的后处理没啥两样,所以果断抛弃V1,直接使用V2,并且使用在了我们的项目中。

虽然说使用插件可以直接黑盒操作,但是由于V2还是未完成版,使用过程中也发现了不少问题。再加上后效的算法千千万,PPSv2中只是包含了其中的一部分,所以如果想借用PPSv2的框架,做定制化的后效,那么还是需要深入阅读PPSv2的代码。

CommandBuffer

既然说了V2使用到了CommandBuffer和SRP技术,那么先来聊一下CommandBuffer。

学过图形学底层的东西都知道,GPU是走渲染管线的,OpenGL ES管线可参考我之前的博客:OPENGL ES 2.0 知识串讲(1)――OPENGL ES 2.0 概括,总的来说就是通过OpenGL ES API贯穿整个管线,然后把Shader嵌入管线,成为其中的一环。在没有使用游戏引擎的时代,或者说在游戏引擎的底层,都是通过这些pipeline去完成一次次绘制,但是游戏引擎会把这些底层API包装起来,降低游戏开发的门槛,使得游戏开发者无需去关注pipeline。只需要通过暴露出来的接口,传入管线中需要的信息即可。比如:Unity中,可以通过ShaderLab传入Blend相关的属性,cull相关的属性,shader源码等等。

那么问题就出现了,这种封装固然是降低了开发者的门槛,但是这个模块对开发者变成了黑盒,开发者无法完成很多定制化工作,可编程渲染管线也就变成了固定管线。虽然Unity提供了多种Rendering Paths,但其实这也是只是提供了几种经典的渲染流程,更何况目前手机平台基本都是Forward Rendering path。所以说,CommandBuffer和SRP是Unity的一个重大突破。

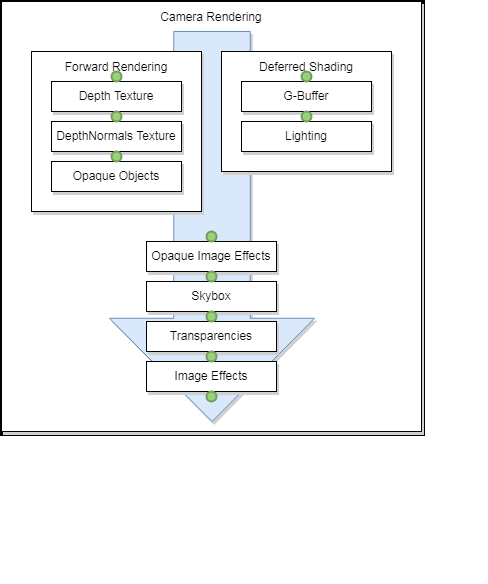

Unity把每一frame绘制的事件进行了拆分,然后在其中定义了一些点,在这些点处,可以通过command buffer嵌入一些事件(比如设置RT,绘制一些物件等)。比如在延迟渲染中,可以当G-Buffer中绘制完毕后,往里面绘制一些额外物件。通过下图可以看到Unty的渲染顺序,并可以清晰的看到在绿色点标记的地方可以嵌入command buffer去执行自定义的命令:

嵌入点是通过Camera.AddCommandBuffer和Light.AddCommandBuffer这两个API完成,通过研究这两个API的参数,可以发现绝大多数点都可以在上图中看到,另外,还可以通过Graphics.ExecuteCommandBuffer这个API来立即执行准备好的Command。

可以嵌入的命令也有很多,具体可以参考CommandBuffer的官方文档,读了这些API后,第一反应就是暴露的接口还是很多的,对于引擎开发者来说这是个炫技的特性,借用这些接口能做的事情很多,但是我们还是先来看如何把command buffer使用到后处理中。

简单的来说,每一帧都要绘制很多物件,Unity把这些物件分类并排序,比如不透明、透明、天空盒等,之前我们理解的后处理,只能通过OnRenderImage 在两个时间节点对framebuffer进行后处理,一个是在所有物件绘制完毕之后,另外一个就是在不透明物件绘制完毕之后(通过ImageEffectOpaque)。但是,其实理论上可以随时随地对framebuffer进行后处理。通过command buffer,我们至少可以做到在固定的时间点进行后处理。使用的API为commandBuffer.Blit,而Blit的时候与Graphics.Blit不同,Graphics.Blit操作的是RT,且通过OnRenderImage的参数获取到源Source和目标dest。而commandBuffer.Blit操作的是Rendering.RenderTargetIdentifier,可以通过BuiltinRenderTextureType.CurrentActive获取到当前RT(CommandBuffer.SetRenderTarget or CommandBuffer.Blit 都可以改变当前RT),或者通过BuiltinRenderTextureType.CameraTarget获取到当前Camera设置的RT。然后对framebuffer通过material进行操作,再传入framebuffer,就完成了后处理的工作。同时,我们也可以看出来,这个功能类似grabpass,由于移动平台grabpass有性能问题,那么我们完全可以使用CommandBuffer的这个特性替代grabpass,去实现类似毛玻璃等的效果。

总结一下,在一frame中的多个drawcall中,我们可以通过renderqueue和Z值控制渲染顺序,在正常的渲染顺序中可以通过OnRenderImage往绘制结束和不透明绘制结束(ImageEffectOpaque)埋入两个全屏后处理的点。但是从现在开始,可以通过CommandBuffer往多个时间点嵌入代码,嵌入的代码可以是全屏后处理,也可以是把当前绘制的framebuffer当做一个texture供下面绘制的物件使用(类似grabpass),可以说,CommandBuffer的功能:是用于使用当前frame已经得到的结果,用于当前frame下面要进行的操作中。CommandBuffer还有很多东西可以说,比如结合CS的特性,这我们先放在TODO list中。

CommandBuffer版本的PPSv2

PPSv2面世的时候还没有SRP,所以最初的PPSv2只用到了CommandBuffer,由于SRP也是很复杂的一块内容,所以,我们先看CommandBuffer版本的PPSv2,看明白之后再看PPSv2是如何结合SRP的。(其实SRP版本反而很简单了,准备好需要哪些后处理,然后在OpaquePostProcessPass和TransparentPostProcessPass中调用相应的PPS的API进行render即可)

PPS中主要使用到了CameraEvent.BeforeReflections、CameraEvent.BeforeLighting、CameraEvent.BeforeImageEffectsOpaque、CameraEvent.BeforeImageEffects 4个时间点,其中前两个为延迟渲染相关的,只有后面两个是前向渲染相关的。

PPSv2自带的后效都是在所有物件绘制完毕后进行的(除了AO、ScreenSpaceReflections、fog,这三个是RenderAfterOpaque),也就是在半透明绘制结束后,而PPSv2支持自定义后效,然后通过Attribute(PostProcessAttribute)定义了三种时间点PostProcessEvent.BeforeTransparent、PostProcessEvent.BeforeStack、PostProcessEvent.AfterStack。通过这三个时间点就可以定义自定义的后效处理的时间是在半透明之前还是在build-in后效前,还是build-in后效之后。

PPSv2的部分后效是需要depthtexture的,比如DOF、Fog、MotionBlur、AO(其中ScalableAO除了depthtexture,还需要normal)、ScreenSpaceReflection、TemporalAA,其中MotionBlur、ScreenSpaceReflection、TemporalAA除了Depthtexture,还需要MotionVector,还有一个神奇的debugLayer,分情况需要depthtexture、normal、MotionVector。所以这些后效是需要比一般的后效对性能影响更大,毕竟针对很多物件需要多绘制数个pass。

PPSv2中,每个后效算法都仿佛是单例,PPSv2的流程是先把每种后效算法的属性都更新好,比如开了Colorgrading,把饱和度跳到最大,那么在PPSv2中的ColorGrading的饱和度就是最大的。然后再去处理对应的算法。后处理的处理顺序为:AO(RenderAfterOpaque)、ScreenSpaceReflections(opaqueOnlyEffects)、fog(opaqueOnlyEffects)、PostProcessEvent.BeforeTransparent中定义的自定义后效、TemporalAA(半透明执行之后)、PostProcessEvent.BeforeStack中定义的自定义后效、DOF、MotionBlur、AutoExposure、LensDistortion、ChromaticAberration、Bloom、Vignette、Grain、ColorGrading、dithering(FINALPASS)、PostProcessEvent.AfterStack中定义的自定义后效、debugLayer。

好吧,下面开始一言不合读代码模式。用PPS的话,需要做三件事情,1.把PostProcessLayer挂载到Camera上,2.在随意地方挂载一个PostProcessVolume,3.在PostProcessVolume上生成一个PostProcessProfile,并在PostProcessProfile配置具体开启的后效。那么我们先从PostProcessLayer读起。

void OnEnable()

{

Init(null);

//实例化TemporalAntialiasing、SubpixelMorphologicalAntialiasing、FastApproximateAntialiasing、Dithering、Fog、PostProcessDebugLayer

if (!haveBundlesBeenInited)

InitBundles();

//这里有点复杂,先创建三个变量类型为SerializedBundleRef的List(m_BeforeTransparentBundles、m_BeforeStackBundles、m_AfterStackBundles),分别用于保存不同阶段的后效。然后每个SerializedBundleRef都包含一个PostProcessBundle,每个PostProcessBundle也都对应一组PostProcessAttribute和PostProcessEffectSettings和PostProcessEffectRenderer(通过具体的后效类,比如AO,可以看到PostProcessEffectSettings和PostProcessAttribute和PostProcessEffectRenderer一一对应,其中PostProcessEffectSettings为该后效的配置,PostProcessAttribute包含后效具体的算法renderer(也就是PostProcessEffectRenderer)、名字menuItem、allowInSceneView、builtinEffect、PostProcessAttribute 等),这里的PostProcessEffectRenderer在get的时候会进行实例化,并与PostProcessEffectSettings产生关联,以及执行init函数

//获取PostProcessManager的单例,其中主要包含了PostProcessVolume、PostProcessEffectSettings、PostProcessAttribute以及Collider的list(PostProcessVolume和PostProcessEffectSettings为两个维度,每个PostProcessVolume都可以包含若干种PP,每组PostProcessEffectSettings和PostProcessAttribute都对应了一个PP)。把这些list清空后,获取项目中所有定义了PostProcessAttribute的、PostProcessEffectSettings的非抽象类的继承类,将这些类实例化作为PPS最低优先级的global volume,也就是退出volume时的默认值,然后将这些实例化的PostProcessEffectSettings的parameters的overrideState设置为true,并加入list m_BaseSettings。然后将PostProcessEffectSettings和对应的PostProcessAttribute加入Dictionary settingsTypes。

//将PostProcessManager的单例中的PostProcessEffectSettings Dictionary settingsTypes所有key值进行实例化,并创建PostProcessBundle,组成PostProcessBundle list m_Bundles。

//将m_BeforeTransparentBundles、m_BeforeStackBundles、m_AfterStackBundles按照PostProcessEvent.BeforeTransparent、BeforeStack、AfterStack进行排序。实际操作是从PostProcessBundle list m_Bundles获取到所有指定PostProcessEvent且非buildin的PostProcessBundle effects,然后删除掉sortedList中所有buildin的以及非buildin的、但是目前已经不存在的SerializedBundleRef,然后将effects存在的,但是sortedList中不存在的SerializedBundleRef(通过assemblyQualifiedName构造)加入sortedList,最后将sortedList中的PostProcessBundle补齐。所以最终得到的是目前项目中所有指定PostProcessEvent且非buildin的SerializedBundleRef。

//将m_BeforeTransparentBundles、m_BeforeStackBundles、m_AfterStackBundles排序组成Dictionary sortedBundles

m_LogHistogram = new LogHistogram();

m_PropertySheetFactory = new PropertySheetFactory();

//用于存放shader和材质球

m_TargetPool = new TargetPool();

//用于存放通过Shader.PropertyToID("_TargetPool" + i)获取到的值

debugLayer.OnEnable();

if (RuntimeUtilities.scriptableRenderPipelineActive)

return;

//SRP是通过OpaquePostProcessPass和TransparentPostProcessPass直接调用postProcessLayer.RenderOpaqueOnly和Render函数。

InitLegacy();

//获取PostProcessLayer所在GO上挂载的Camera m_Camera,并将其强制设置为forceIntoRenderTexture。创建四个CommandBuffer分别对应CameraEvent.BeforeReflections(Deferred)、BeforeLighting(Deferred)、BeforeImageEffectsOpaque、BeforeImageEffects。创建了一个PostProcessRenderContext m_CurrentContext。

}

OK,总结一下。截止到这里基本都是准备工作,实例化了一些东西。最重要的是根据项目中所有符合条件的PostProcessEffectSettings,实例化了一个完整的PostProcessBundle list m_Bundles,以及在PostProcessManager中实例化了相同的PostProcessBundle list m_BaseSettings作为默认值,以及完整的Dictionary settingsTypes。然后不管是不是SRP,其实都new了PostProcessRenderContext。只是非SRP还准备了一堆CommandBuffer。

void Reset()

{

volumeTrigger = transform;

//PostProcessVolume是分global和非global的,当非global的时候,就有trigger的存在了,这里是定义一个用于trigger产生volume切换的GO。

}

void OnPreCull()

//Called before the camera culls the scene. Culling determines which objects are visible to the camera. OnPreCull is called just before culling takes place.

{

// Unused in scriptable render pipelines

if (RuntimeUtilities.scriptableRenderPipelineActive)

return;

//由于SRP的渲染顺序都是自定义的,所以有没有Culling还不一定,所以PPS不走这一套流程。

if (m_Camera == null || m_CurrentContext == null)

InitLegacy();

//初始化Camera和PostProcessRenderContext,理论上在OnEnable函数中已经创建好了。

// Resets the projection matrix from previous frame in case TAA was enabled.

// We also need to force reset the non-jittered projection matrix here as it's not done

// when ResetProjectionMatrix() is called and will break transparent rendering if TAA

// is switched off and the FOV or any other camera property changes.

m_Camera.ResetProjectionMatrix();

m_Camera.nonJitteredProjectionMatrix = m_Camera.projectionMatrix;

#if !UNITY_SWITCH

if (m_Camera.stereoEnabled)

{

m_Camera.ResetStereoProjectionMatrices();

Shader.SetGlobalFloat(ShaderIDs.RenderViewportScaleFactor, XRSettings.renderViewportScale);

}

else

#endif

{

Shader.SetGlobalFloat(ShaderIDs.RenderViewportScaleFactor, 1.0f);

}

BuildCommandBuffers();

//最核心的函数,配置Context,然后按照顺序ao\ssr\fog\m_BeforeTransparentBundles\temporalAntialiasing\m_BeforeStackBundles\RenderBuiltins:DepthOfField/MotionBlur/AutoExposure/LensDistortion/ChromaticAberration/Bloom/Vignette/Grain/ColorGrading(!breakBeforeColorGrading)/dither(!needsFinalPass)\m_AfterStackBundles\discardAlpha(breakBeforeColorGrading & needsFinalPass)\SubpixelMorphologicalAntialiasing(needsFinalPass)\dithering(needsFinalPass)\uberSheet(包含FastApproximateAntialiasing信息)(needsFinalPass)\debugLayer.RenderSpecialOverlays & RenderMonitors。

}

void BuildCommandBuffers()

{

var context = m_CurrentContext;

var sourceFormat = m_Camera.allowHDR ? RuntimeUtilities.defaultHDRRenderTextureFormat : RenderTextureFormat.Default;

//HDR

//HDR -> m_NaNKilled = false

if (!RuntimeUtilities.isFloatingPointFormat(sourceFormat))

m_NaNKilled = true;

context.Reset();

//调用Reset函数,清空了很多值。

context.camera = m_Camera;

//然后将m_Camera赋值给context的camera

context.sourceFormat = sourceFormat;

//将sourceFormat赋值给context的sourceFormat(如果m_Camera打开HDR且硬件支持RGB111110Float HDR,则使用HDR,否则,则使用默认。ES3.0及以上API均支持该格式)

// TODO: Investigate retaining command buffers on XR multi-pass right eye

m_LegacyCmdBufferBeforeReflections.Clear();

m_LegacyCmdBufferBeforeLighting.Clear();

m_LegacyCmdBufferOpaque.Clear();

m_LegacyCmdBuffer.Clear();

//将OnEnable函数中创建的4个CommandBuffer m_LegacyCmdBufferBeforeReflections、m_LegacyCmdBufferBeforeLighting、m_LegacyCmdBufferOpaque、m_LegacyCmdBuffer清空

SetupContext(context);

//使用SetupContext设置context。设置了isSceneView、resources(Patrick:很神奇的我一直没有找到这个m_Resources是在哪里被赋值的)、propertySheets、debugLayer、antialiasing、temporalAntialiasing、logHistogram。根据m_Bundles中被打开的PP的DepthTextureMode以及不属于m_Bundles的temporalAntialiasing、fog、debugLayer(SubpixelMorphologicalAntialiasing、FastApproximateAntialiasing、Dithering不需要DepthTextureMode)是否打开,以及它们的DepthTextureMode,决定context.camera.depthTextureMode。SRP的话,渲染顺序自己定,是否绘制depth/normal/motionVector pass也自己定。Prepare debug overlay。

//需要注意的是,这里把context又赋值给了m_CurrentContext。但是再往后针对context的改变,就没有赋值给m_CurrentContext了。

context.command = m_LegacyCmdBufferOpaque;

//从m_LegacyCmdBufferOpaque开始执行。从这里开始的command将会在CameraEvent.BeforeImageEffectsOpaque的时候执行

TextureLerper.instance.BeginFrame(context);

//new TextureLerper,用于管理RenderTexture。将context的command、propertySheets、resources赋值给TextureLerper。

UpdateSettingsIfNeeded(context);

//总的来说,用于将所有Volume上设置的PP,根据weight等信息,总结到m_Bundles。具体如下:

//借用PostProcessManager.instance.UpdateSettings函数,先将PostProcessManager中作为默认值的PostProcessBundle list m_BaseSettings的parameters依次赋值给m_Bundles中对应的元素。

//根据postProcessLayer.volumeLayer.value获取到所有符合mask的volume,并根据priority从小到大进行排序。

//排除disable的、没有profileRef的、weight <= 0的volume。

//遍历所有符合条件的volume,如果volume为isGlobal,则直接根据weight,将volume对应的所有setting的所有parameters(ParameterOverride的overrideState为true) lerp给m_Bundles中对应的PostProcessEffectSettings的对应parameters。

//如果volumeTrigger为null,则只使用isGlobal的volume,但是默认一般volumeTrigger不为null,所以还会使用非global的volume。

//非Global的volume所在的GO需要有个collider,用于与volumeTrigger做交互。如果GO没有collider,则直接跳过,说明该volume真的没用。

//遍历volume所在GO上所有的collider,跳过disable的,通过Collider.ClosestPoint获取到该collider距离volumeTrigger最近的点,然后算出该collider和volumeTrigger的最近距离(Patrick:这里计算距离用到了((closestPoint - triggerPos) / 2f).sqrMagnitude,很神奇为什么会有一个除以2)。遍历完所有的collider,得到该Volume和volumeTrigger的最近距离。

//PostProcessVolume还有个参数blendDistance,也就是当volumeTrigger还没有进入volume,但是进入blendDistance范围的时候,就可以开始让volume慢慢的生效了。

//将非Global的volume,根据其weight、closestDistanceSqr&blendDistSqr,将volume对应的所有setting的所有parameters(ParameterOverride的overrideState为true) lerp给m_Bundles中对应的PostProcessEffectSettings的对应parameters。

//假设使用2个volume,一个global的,一个非global触发式的,那么非global的优先级一定要>global的优先级,否则永远不会被触发。

// Lighting & opaque-only effects

var aoBundle = GetBundle();

//获取AO的bundle

var aoSettings = aoBundle.CastSettings();

//获取AO的setting

var aoRenderer = aoBundle.CastRenderer();

//获取AO的renderer

bool aoSupported = aoSettings.IsEnabledAndSupported(context);

//获取AO是否打开并被设备支持

bool aoAmbientOnly = aoRenderer.IsAmbientOnly(context);

//只有Deferred rendering path and HDR rendering的时候该值才能生效,在forward中,该值永远为false

bool isAmbientOcclusionDeferred = aoSupported && aoAmbientOnly;

bool isAmbientOcclusionOpaque = aoSupported && !aoAmbientOnly;

var ssrBundle = GetBundle();

//获取SSR的bundle

var ssrSettings = ssrBundle.settings;

//获取SSR的setting

var ssrRenderer = ssrBundle.renderer;

//获取SSR的renderer

bool isScreenSpaceReflectionsActive = ssrSettings.IsEnabledAndSupported(context);

//获取SSR是否打开并被设备支持

// Ambient-only AO is a special case and has to be done in separate command buffers

if (isAmbientOcclusionDeferred)

{

var ao = aoRenderer.Get();

// Render as soon as possible - should be done async in SRPs when available

context.command = m_LegacyCmdBufferBeforeReflections;

ao.RenderAmbientOnly(context);

// Composite with GBuffer right before the lighting pass

context.command = m_LegacyCmdBufferBeforeLighting;

ao.CompositeAmbientOnly(context);

}

else if (isAmbientOcclusionOpaque)

{

context.command = m_LegacyCmdBufferOpaque;

aoRenderer.Get().RenderAfterOpaque(context);

//AO

}

bool isFogActive = fog.IsEnabledAndSupported(context);

//获取fog是否打开并被设备支持

bool hasCustomOpaqueOnlyEffects = HasOpaqueOnlyEffects(context);

//获取是否存在只影响不透明物件的,非buildin的,打开且设备支持的PP,也就是m_BeforeTransparentBundles是否为空。

int opaqueOnlyEffects = 0;

opaqueOnlyEffects += isScreenSpaceReflectionsActive ? 1 : 0;

opaqueOnlyEffects += isFogActive ? 1 : 0;

opaqueOnlyEffects += hasCustomOpaqueOnlyEffects ? 1 : 0;

// This works on right eye because it is resolved/populated at runtime

var cameraTarget = new RenderTargetIdentifier(BuiltinRenderTextureType.CameraTarget);

//Create a identifier of Target texture of currently rendering camera.

if (opaqueOnlyEffects > 0)

{

var cmd = m_LegacyCmdBufferOpaque;

context.command = cmd;

// We need to use the internal Blit method to copy the camera target or it'll fail

// on tiled GPU as it won't be able to resolve

int tempTarget0 = m_TargetPool.Get();

context.GetScreenSpaceTemporaryRT(cmd, tempTarget0, 0, sourceFormat);

//通过cmd.GetTemporaryRT获取一个RT tempTarget0

cmd.BuiltinBlit(cameraTarget, tempTarget0, RuntimeUtilities.copyStdMaterial, stopNaNPropagation ? 1 : 0);

//通过copyStdMaterial把cameraTarget的内容复制到了tempTarget0中

context.source = tempTarget0;

int tempTarget1 = -1;

if (opaqueOnlyEffects > 1)

{

tempTarget1 = m_TargetPool.Get();

context.GetScreenSpaceTemporaryRT(cmd, tempTarget1, 0, sourceFormat);

//通过cmd.GetTemporaryRT获取一个RT tempTarget1

context.destination = tempTarget1;

}

else context.destination = cameraTarget;

//如果opaqueOnlyEffects = 1,则直接将source blit到cameraTarget即可。

if (isScreenSpaceReflectionsActive)

{

ssrRenderer.Render(context);

//SSR

opaqueOnlyEffects--;

var prevSource = context.source;

context.source = context.destination;

//SSR结束了,将其输出作为下面一层的输入

context.destination = opaqueOnlyEffects == 1 ? cameraTarget : prevSource;

//SSR结束后,如果opaqueOnlyEffects = 1,则直接将source blit到cameraTarget即可。

}

if (isFogActive)

{

fog.Render(context);

//fog

opaqueOnlyEffects--;

var prevSource = context.source;

context.source = context.destination;

//fog结束了,将其输出作为下面一层的输入

context.destination = opaqueOnlyEffects == 1 ? cameraTarget : prevSource;

//fog结束后,如果opaqueOnlyEffects = 1,则直接将source blit到cameraTarget即可。

}

if (hasCustomOpaqueOnlyEffects)

RenderOpaqueOnly(context);

//Render只影响不透明物件的,非buildin的PP,也就是m_BeforeTransparentBundles中打开且设备支持的PP

//如果list中只有一个PP,则直接调用其Render函数

//如果list中有两个PP,则Render第一个PP从context.source blit 到 tempTarget1,Render第二个PP从tempTarget1 blit到context.destination,需要注意创建和释放tempTarget1

//如果list中超过两个PP,则context.source -> tempTarget1 -> tempTarget2 -> ... -> tempTarget1 -> tempTarget2 -> ... -> context.destination,需要注意创建和释放tempTarget1 和 tempTarget2

if (opaqueOnlyEffects > 1)

cmd.ReleaseTemporaryRT(tempTarget1);

//释放RT(Patrick:这是个Bug吧,opaqueOnlyEffects在这里已经被--很多次了,永远不会超过1的)

cmd.ReleaseTemporaryRT(tempTarget0);

//释放RT

}

// Post-transparency stack

// Same as before, first blit needs to use the builtin Blit command to properly handle

// tiled GPUs

int tempRt = m_TargetPool.Get();

context.GetScreenSpaceTemporaryRT(m_LegacyCmdBuffer, tempRt, 0, sourceFormat, RenderTextureReadWrite.sRGB);

//通过cmd.GetTemporaryRT获取一个RT tempRt

m_LegacyCmdBuffer.BuiltinBlit(cameraTarget, tempRt, RuntimeUtilities.copyStdMaterial, stopNaNPropagation ? 1 : 0);

//通过copyStdMaterial把cameraTarget的内容复制到了tempRt中

if (!m_NaNKilled)

m_NaNKilled = stopNaNPropagation;

context.command = m_LegacyCmdBuffer;

//从这里开始的command将会在CameraEvent.BeforeImageEffects的时候执行

context.source = tempRt;

context.destination = cameraTarget;

Render(context);

//temporalAntialiasing.Render

//Render m_BeforeStackBundles中打开且设备支持的PP

//RenderBuiltins。DepthOfField/MotionBlur/AutoExposure/LensDistortion/ChromaticAberration/Bloom/Vignette/Grain/ColorGrading(!breakBeforeColorGrading)/dither(isFinalPass),最后再通过uberSheet将context.source blit到context.destination(Patrick:uberSheet是什么)。注意,其中有使用m_LogHistogram针对AutoExposure产生log。

//Render m_AfterStackBundles中打开且设备支持的PP

//如果needsFinalPass:如果breakBeforeColorGrading,则使用discardAlpha将context.source blit到context.destination。反之,SubpixelMorphologicalAntialiasing.Render,之后dithering.Render,最后用uberSheet(包含FastApproximateAntialiasing信息)将context.source blit到context.destination。

//debugLayer.RenderSpecialOverlays & RenderMonitors。

m_LegacyCmdBuffer.ReleaseTemporaryRT(tempRt);

}

void OnPreRender()

{

// Unused in scriptable render pipelines

// Only needed for multi-pass stereo right eye

if (RuntimeUtilities.scriptableRenderPipelineActive ||

(m_Camera.stereoActiveEye != Camera.MonoOrStereoscopicEye.Right))

return;

//由于在OnPreCull就已经完成了PPS,那么在这里除非是VR,否则直接跳过

BuildCommandBuffers();

}

void OnPostRender()

{

// Unused in scriptable render pipelines

if (RuntimeUtilities.scriptableRenderPipelineActive)

return;

if (m_CurrentContext.IsTemporalAntialiasingActive())

{

m_Camera.ResetProjectionMatrix();

//重置一下矩阵

if (m_CurrentContext.stereoActive)

{

if (RuntimeUtilities.isSinglePassStereoEnabled || m_Camera.stereoActiveEye == Camera.MonoOrStereoscopicEye.Right)

m_Camera.ResetStereoProjectionMatrices();

}

}

}

总计一下,绘制完不透明之后,执行:ao\ssr\fog\m_BeforeTransparentBundles,全部绘制完毕后,执行:temporalAntialiasing\m_BeforeStackBundles\RenderBuiltins:DepthOfField/MotionBlur/AutoExposure/LensDistortion/ChromaticAberration/Bloom/Vignette/Grain/ColorGrading(!breakBeforeColorGrading)/dither(!needsFinalPass)/uberSheet\m_AfterStackBundles\discardAlpha(breakBeforeColorGrading & needsFinalPass)\SubpixelMorphologicalAntialiasing(needsFinalPass)\dithering(needsFinalPass)\uberSheet(包含FastApproximateAntialiasing信息)(needsFinalPass)\debugLayer.RenderSpecialOverlays & RenderMonitors。PPS最核心的函数其实就是BuildCommandBuffers,在这里构建所有PP执行的src、dest和时间点等信息。

整体结构说完了,在具体说每个PP之前,先聊下PP相关的几个基类。首先是PostProcessEffectSettings,它包含了一组ParameterOverride类型的变量 list parameters。这组parameters的获取是通过反射的方式,得到类中所有public的、继承自ParameterOverride实例成员,然后以MetadataToken的顺序排序。而ParameterOverride类其实主要是有个overrideState属性,打开这个属性后才可以被override。最后还有PostProcessEffectRenderer抽象类,这个类中定义了几个虚函数Init、SetSettings、Render、GetCameraFlags、Release。当获取PostProcessBundle中的renderer的时候,对attribute.renderer进行实例化,然后调用实例化后的SetSettings函数和init函数,然后在真正使用的时候调用其render函数。

后效虽好,但是性能更重要,先简单的列举一下,目前我项目中未优化版本的PPS的在IphoneX(分辨率2436*1125,A11)上的各种消耗。SAO:15ms,VolumeLight:14ms,Fog:2ms,bloom:10ms,SunShaft:13ms,DOF:14ms,lut:0.1ms,uber:1ms。这个结果也是因为iphoneX的分辨率比较高,如果在iphone8(分辨率1334*750,A11)上跑的话,结果为:SAO:6ms,VolumeLight:5.5ms,Fog:0.8ms,bloom:4ms,SunShaft:5ms,DOF:5ms,lut:0.14ms,uber:0.3ms。基本上2/5分辨率vs.2/5的时间消耗。IphoneXS升级了处理器(A12)

AmbientOcclusion

ScalableAO不支持SRP,需要MRT(ES3),depth texture,normal texture

MultiScaleVolumetricObscurance需要CS、RFloat、RHalf、R8,不支持mobile

AO没有重写SetSettings函数,所以用基类的该函数,也就是将m_Bundles中关联的PostProcessEffectSettings赋值给PostProcessEffectRenderer

AO重写了Init函数,这里只是定义了一个数组m_Methods用于对应AO的两种算法ScalableAO和MultiScaleVO

AO重写了GetCameraFlags函数,ScalableAO 需要DepthTextureMode.Depth | DepthTextureMode.DepthNormals, MultiScaleVO 需要DepthTextureMode.Depth。

AO重写了Release函数,调用了ScalableAO 和MultiScaleVO的Release函数。

AO重写了Render函数,但其实没有用到,AO的调用如下,再多的文字没有直接读代码清楚,下面开始读代码

var aoBundle = GetBundle(); var aoSettings = aoBundle.CastSettings (); var aoRenderer = aoBundle.CastRenderer (); bool aoSupported = aoSettings.IsEnabledAndSupported(context); //ScalableAmbientObscurance不支持SRP,MultiScaleVolumetricObscurance需要CS、RFloat、RHalf、R8,不支持mobile bool aoAmbientOnly = aoRenderer.IsAmbientOnly(context); //ambientOnly只支持DeferredShading+HDR bool isAmbientOcclusionDeferred = aoSupported && aoAmbientOnly; bool isAmbientOcclusionOpaque = aoSupported && !aoAmbientOnly; // Ambient-only AO is a special case and has to be done in separate command buffers if (isAmbientOcclusionDeferred) { var ao = aoRenderer.Get(); // Render as soon as possible - should be done async in SRPs when available context.command = m_LegacyCmdBufferBeforeReflections; //按照unity buildin的延迟渲染,reflection在Gbuffer绘制之后,light之前 ao.RenderAmbientOnly(context); //绘制含有AO信息的贴图 // Composite with GBuffer right before the lighting pass context.command = m_LegacyCmdBufferBeforeLighting; ao.CompositeAmbientOnly(context); //将AO信息存入GBuffer0 } else if (isAmbientOcclusionOpaque) { context.command = m_LegacyCmdBufferOpaque; aoRenderer.Get().RenderAfterOpaque(context); }

下面,先看ScalableAO的RenderAmbientOnly函数,在其中只执行了函数Render(context, cmd, 1);

void Render(PostProcessRenderContext context, CommandBuffer cmd, int occlusionSource)

{

DoLazyInitialization(context);

//创建RT m_Result(没有depth,ARGB32,Linear,尺寸为camera宽高)

m_Settings.radius.value = Mathf.Max(m_Settings.radius.value, 1e-4f);

// Material setup

// Always use a quater-res AO buffer unless High/Ultra quality is set.

bool downsampling = (int)m_Settings.quality.value < (int)AmbientOcclusionQuality.High;

float px = m_Settings.intensity.value;

float py = m_Settings.radius.value;

float pz = downsampling ? 0.5f : 1f;

float pw = m_SampleCount[(int)m_Settings.quality.value];

//Lowest -> 4, Low -> 6, Medium -> 10, High -> 8, Ultra -> 12

var sheet = m_PropertySheet;

sheet.ClearKeywords();

sheet.properties.SetVector(ShaderIDs.AOParams, new Vector4(px, py, pz, pw));

sheet.properties.SetVector(ShaderIDs.AOColor, Color.white - m_Settings.color.value);

//注意这里的AOColor做了一次1-AOColor,因为后面在计算的时候是会减回来的。

// In forward fog is applied at the object level in the grometry pass so we need to

// apply it to AO as well or it'll drawn on top of the fog effect.

// Not needed in Deferred.

if (context.camera.actualRenderingPath == RenderingPath.Forward && RenderSettings.fog)

{

sheet.EnableKeyword("APPLY_FORWARD_FOG");

sheet.properties.SetVector(

ShaderIDs.FogParams,

new Vector3(RenderSettings.fogDensity, RenderSettings.fogStartDistance, RenderSettings.fogEndDistance)

);

}

// Texture setup

int ts = downsampling ? 2 : 1;

const RenderTextureFormat kFormat = RenderTextureFormat.ARGB32;

const RenderTextureReadWrite kRWMode = RenderTextureReadWrite.Linear;

const FilterMode kFilter = FilterMode.Bilinear;

// AO buffer

var rtMask = ShaderIDs.OcclusionTexture1;

int scaledWidth = context.width / ts;

int scaledHeight = context.height / ts;

context.GetScreenSpaceTemporaryRT(cmd, rtMask, 0, kFormat, kRWMode, kFilter, scaledWidth, scaledHeight);

//创建一个没有depth,ARGB32, Linear, Bilinear, 宽高scaled的RT

// AO estimation

cmd.BlitFullscreenTriangle(BuiltinRenderTextureType.None, rtMask, sheet, (int)Pass.OcclusionEstimationForward + occlusionSource);

//材质球scalableAO,ScalableAO.shader的第1个pass的用处是:先获取当前像素点camera空间的坐标,循环SAMPLE_COUNT(做一下偏移,然后获取到该点投影空间坐标,然后再得到该点camera空间的坐标,将两个camera空间的坐标相减,得到偏移向量。然后将该向量与当前像素点camera空间的法线取dot,并减去当前像素点在camera空间的深度,再除以偏移向量的模。假如该像素点旁边的点与该像素点组成的向量与该像素点法线平行,且该像素点距离相机比较近,则该值越大,反之,则越小把这个结果循环相加。),得到的结果乘以RADIUS,并pow一下,输出AO和camera空间的normal。

//第1个pass需要_CameraGBufferTexture2获取Normal,需要_CameraDepthTexture获取depth,所以在GBuffer之后

//材质球scalableAO,ScalableAO.shader的第0个pass的用处是:和第1个pass类似,区别在于该pass获取normal是从_CameraDepthNormalsTexture中获取,所以需要在CameraEvent.BeforeImageEffectsOpaque的时候执行。

// Blur buffer

var rtBlur = ShaderIDs.OcclusionTexture2;

context.GetScreenSpaceTemporaryRT(cmd, rtBlur, 0, kFormat, kRWMode, kFilter);

//创建RT

// Separable blur (horizontal pass)

cmd.BlitFullscreenTriangle(rtMask, rtBlur, sheet, (int)Pass.HorizontalBlurForward + occlusionSource);

//材质球scalableAO,ScalableAO.shader的第3个pass的用处是:将上一步得到的图片rtMask做水平方向blur,具体操作是将当前像素点存储的normal与周围5、9(默认关闭,需要打开宏BLUR_HIGH_QUALITY)个点的normal做dot,然后做smooth(0.8, 1),得到周围点的权重值,乘以周围点的AO,再加上当前像素点的AO,做加权平均值。得到的结果,继续输出AO和camera空间的normal。

//材质球scalableAO,ScalableAO.shader的第2个pass的用处是:和第3个pass类似,区别在于该pass获取normal是从_CameraDepthNormalsTexture中获取,所以需要在CameraEvent.BeforeImageEffectsOpaque的时候执行。

cmd.ReleaseTemporaryRT(rtMask);

// Separable blur (vertical pass)

cmd.BlitFullscreenTriangle(rtBlur, m_Result, sheet, (int)Pass.VerticalBlur);

//材质球scalableAO,ScalableAO.shader的第4个pass的用处是:和第3个pass类似,将上一步得到的图片rtBlur做垂直方向blur,具体操作是将当前像素点存储的normal与周围4、6(默认关闭,需要打开宏BLUR_HIGH_QUALITY)个点的normal做dot,然后做smooth(0.8, 1),得到周围点的权重值,乘以周围点的AO,再加上当前像素点的AO,做加权平均值。得到的结果,继续输出AO和camera空间的normal。

cmd.ReleaseTemporaryRT(rtBlur);

if (context.IsDebugOverlayEnabled(DebugOverlay.AmbientOcclusion))

context.PushDebugOverlay(cmd, m_Result, sheet, (int)Pass.DebugOverlay);

}

经过这,得到的结果为,如果某个像素点到周围像素点的向量与该像素点法线平行,且该像素点距离摄像机比较近,则输出的R比较大,反之R通道的值比较小,GBA为该像素点在camera空间的法线方向。

下面看ScalableAO的CompositeAmbientOnly函数:

public void CompositeAmbientOnly(PostProcessRenderContext context)

{

var cmd = context.command;

cmd.BeginSample("Ambient Occlusion Composite");

cmd.SetGlobalTexture(ShaderIDs.SAOcclusionTexture, m_Result);

cmd.BlitFullscreenTriangle(BuiltinRenderTextureType.None, m_MRT, BuiltinRenderTextureType.CameraTarget, m_PropertySheet, (int)Pass.CompositionDeferred);

//m_MRT为m_MRTGBuffer0和CameraTarget,猜测,绘制的AO结果需要存在m_MRTGBuffer0中,所以该commandbuffer在light之前。这个需要至少ES3,所以ES2会跳过

//材质球scalableAO,ScalableAO.shader的第6个pass的用处是:将m_Result的结果进行blur,具体操作为将当前像素点存储的normal与周围4个像素点的normal做dot,然后做smooth(0.8, 1),得到周围点的权重值,乘以周围点的AO,再加上当前像素点的AO,做加权平均值。得到的结果存储在m_MRTGBuffer0中,保持其RGB通道,A通道为(1 - AO) * m_MRTGBuffer0原本的alpha值。CameraTarget的RGB通道存放(1 - AO * _AOColor) * CameraTarget原本的RGB值,A通道存放CameraTarget原本的A值。(不过感觉CameraTarget的结果无意义?有啥用?测试下来确实效果没区别)

cmd.EndSample("Ambient Occlusion Composite");

}

如果没有打开AmientOnly,则执行RenderAfterOpaque

public void RenderAfterOpaque(PostProcessRenderContext context)

{

var cmd = context.command;

cmd.BeginSample("Ambient Occlusion");

Render(context, cmd, 0);

//回到上面再看一下,其实区别不大,只是获取normal的地方不同(ambientonly从GBuffer2中获取,notAmbientOnly是从depthnormaltexture获取)

cmd.SetGlobalTexture(ShaderIDs.SAOcclusionTexture, m_Result);

cmd.BlitFullscreenTriangle(BuiltinRenderTextureType.None, BuiltinRenderTextureType.CameraTarget, m_PropertySheet, (int)Pass.CompositionForward, RenderBufferLoadAction.Load);

//材质球scalableAO,ScalableAO.shader的第5个pass的用处是:将m_Result的结果进行blur,具体操作为将当前像素点存储的normal与周围4个像素点的normal做dot,然后做smooth(0.8, 1),得到周围点的权重值,乘以周围点的AO,再加上当前像素点的AO,做加权平均值。得到的结果与CameraTarget做混合,然后再存储在CameraTarget中,RGB通道存放(1 - AO * _AOColor) * CameraTarget原本的RGB值,A通道存放(1 - AO) *CameraTarget原本的A值。

cmd.EndSample("Ambient Occlusion");

}

下面看看MultiScaleVO的RenderAmbientOnly函数

public void RenderAmbientOnly(PostProcessRenderContext context)

{

var cmd = context.command;

cmd.BeginSample("Ambient Occlusion Render");

SetResources(context.resources);

PreparePropertySheet(context);

//准备材质球multiScaleAO使用的shader为MultiScaleVO.shader,设置AOColor

CheckAOTexture(context);

//创建RT m_AmbientOnlyAO(没有depth,R8,Linear,尺寸为camera宽高)

GenerateAOMap(cmd, context.camera, m_AmbientOnlyAO, null, false, false);

//创建一系列RT LinearDepth(Original RHalf) LowDepth(L1-L4 RFloat) TiledDepth(L3-L6 RHalf) Occlusion(L1-L4 R8) Combined(L1-L3 R8)

//

PushDebug(context);

cmd.EndSample("Ambient Occlusion Render");

}

优化方案:

- 使用ScalableAO,而非MultiScaleVO

- ScalableAO:quality越低,越省。a.循环次数越少(这个shader的循环运算量倒不大),b.低于high的时候会用半尺寸的RT。

- ScalableAO:使用Fastmode:删除blur。m_Result为中间RT,尺寸可以设置为1/4。

- ScalableAO:修改CS文件:a.删除blur。b.变量m_SampleCount,直接影响循环次数。c.减少材质球scalableAO ScalableAO.shader的第2.3.4.5.6个pass中blur采样点的数量(各5个点)

- ScalableAO:是否关闭AmbientOnly基本没有区别,打开AmbientOnly只是在MRT的时候多绘制一个RT,还没啥用,感觉可以省略,测试下来确实效果没区别。

ScreenSpaceReflections

SSR只支持延迟渲染,需要depth texture、MotionVector,还需要支持CS、copyTextureSupport

SSR没有重写SetSettings函数,所以用基类的该函数,也就是将m_Bundles中关联的PostProcessEffectSettings赋值给PostProcessEffectRenderer

SSR重写了GetCameraFlags函数,SSR 需要DepthTextureMode.Depth | DepthTextureMode.MotionVectors。

SSR重写了Release函数,删除RT m_Resolve和m_History。

SSR重写了Render函数,下面开始读代码

public override void Render(PostProcessRenderContext context)

{

var cmd = context.command;

cmd.BeginSample("Screen-space Reflections");

// Get quality settings

if (settings.preset.value != ScreenSpaceReflectionPreset.Custom)

{

int id = (int)settings.preset.value;

settings.maximumIterationCount.value = m_Presets[id].maximumIterationCount;

//寻找反射点的次数

settings.thickness.value = m_Presets[id].thickness;

settings.resolution.value = m_Presets[id].downsampling;

}

//new QualityPreset { maximumIterationCount = 10, thickness = 32, downsampling = ScreenSpaceReflectionResolution.Downsampled }, // Lower

//new QualityPreset { maximumIterationCount = 16, thickness = 32, downsampling = ScreenSpaceReflectionResolution.Downsampled }, // Low

//new QualityPreset { maximumIterationCount = 32, thickness = 16, downsampling = ScreenSpaceReflectionResolution.Downsampled }, // Medium

//new QualityPreset { maximumIterationCount = 48, thickness = 8, downsampling = ScreenSpaceReflectionResolution.Downsampled }, // High

//new QualityPreset { maximumIterationCount = 16, thickness = 32, downsampling = ScreenSpaceReflectionResolution.FullSize }, // Higher

//new QualityPreset { maximumIterationCount = 48, thickness = 16, downsampling = ScreenSpaceReflectionResolution.FullSize }, // Ultra

//new QualityPreset { maximumIterationCount = 128, thickness = 12, downsampling = ScreenSpaceReflectionResolution.Supersampled }, // Overkill

settings.maximumMarchDistance.value = Mathf.Max(0f, settings.maximumMarchDistance.value);

//设置反射最远追踪多远

// Square POT target

int size = Mathf.ClosestPowerOfTwo(Mathf.Min(context.width, context.height));

//根据窗口宽高,得到一个合适的POT的尺寸

if (settings.resolution.value == ScreenSpaceReflectionResolution.Downsampled)

size >>= 1;

else if (settings.resolution.value == ScreenSpaceReflectionResolution.Supersampled)

size <<= 1;

// The gaussian pyramid compute works in blocks of 8x8 so make sure the last lod has a

// minimum size of 8x8

const int kMaxLods = 12;

int lodCount = Mathf.FloorToInt(Mathf.Log(size, 2f) - 3f);

lodCount = Mathf.Min(lodCount, kMaxLods);

CheckRT(ref m_Resolve, size, size, context.sourceFormat, FilterMode.Trilinear, true);

//创建一个RT m_Resolve,尺寸为Downsampled/FullSize/Supersampled后的结果,context.sourceFormat, FilterMode.Trilinear,HideFlags.HideAndDontSave

var noiseTex = context.resources.blueNoise256[0];

var sheet = context.propertySheets.Get(context.resources.shaders.screenSpaceReflections);

sheet.properties.SetTexture(ShaderIDs.Noise, noiseTex);

var screenSpaceProjectionMatrix = new Matrix4x4();

screenSpaceProjectionMatrix.SetRow(0, new Vector4(size * 0.5f, 0f, 0f, size * 0.5f));

screenSpaceProjectionMatrix.SetRow(1, new Vector4(0f, size * 0.5f, 0f, size * 0.5f));

screenSpaceProjectionMatrix.SetRow(2, new Vector4(0f, 0f, 1f, 0f));

screenSpaceProjectionMatrix.SetRow(3, new Vector4(0f, 0f, 0f, 1f));

var projectionMatrix = GL.GetGPUProjectionMatrix(context.camera.projectionMatrix, false);

screenSpaceProjectionMatrix *= projectionMatrix;

sheet.properties.SetMatrix(ShaderIDs.ViewMatrix, context.camera.worldToCameraMatrix);

sheet.properties.SetMatrix(ShaderIDs.InverseViewMatrix, context.camera.worldToCameraMatrix.inverse);

sheet.properties.SetMatrix(ShaderIDs.InverseProjectionMatrix, projectionMatrix.inverse);

sheet.properties.SetMatrix(ShaderIDs.ScreenSpaceProjectionMatrix, screenSpaceProjectionMatrix);

sheet.properties.SetVector(ShaderIDs.Params, new Vector4((float)settings.vignette.value, settings.distanceFade.value, settings.maximumMarchDistance.value, lodCount));

sheet.properties.SetVector(ShaderIDs.Params2, new Vector4((float)context.width / (float)context.height, (float)size / (float)noiseTex.width, settings.thickness.value, settings.maximumIterationCount.value));

cmd.GetTemporaryRT(ShaderIDs.Test, size, size, 0, FilterMode.Point, context.sourceFormat);

//创建一个RT ShaderIDs.Test,同m_Resolve尺寸一致,FilterMode.Point, context.sourceFormat

cmd.BlitFullscreenTriangle(context.source, ShaderIDs.Test, sheet, (int)Pass.Test);

//Shader screenSpaceReflections ScreenSpaceReflections.shader的第0个pass的用处为:大概意思就是根据当前像素camera空间的位置,得到camera空间的视角方向,然后根据camera空间中该点的normal方向,得到camera空间的反射方向。如果反射方向的Z指向摄像机,则说明无反射,返回0。否则,则根据这个方向,以一定的步长,一定的次数,寻找到一个场景中真实存在物件的一个点,记录下该点的屏幕空间坐标、寻找的次数,输出的第四个分量为mask,找到了为true,没找到为false

if (context.isSceneView)

{

cmd.BlitFullscreenTriangle(context.source, m_Resolve, sheet, (int)Pass.Resolve);

//Shader screenSpaceReflections ScreenSpaceReflections.shader的第1个pass的用处为:有反射物件的像素,输出反射物件颜色处理后的结果,无反射物件的像素,输出物件本身的颜色

}

else

{

CheckRT(ref m_History, size, size, context.sourceFormat, FilterMode.Bilinear, false);

//创建一个RT m_History,同m_Resolve尺寸一致,context.sourceFormat, FilterMode.Bilinear,HideFlags.HideAndDontSave

if (m_ResetHistory)

{

context.command.BlitFullscreenTriangle(context.source, m_History);

m_ResetHistory = false;

}

cmd.GetTemporaryRT(ShaderIDs.SSRResolveTemp, size, size, 0, FilterMode.Bilinear, context.sourceFormat);

//创建一个RT SSRResolveTemp,同m_Resolve尺寸一致,context.sourceFormat, FilterMode.Bilinear

cmd.BlitFullscreenTriangle(context.source, ShaderIDs.SSRResolveTemp, sheet, (int)Pass.Resolve);

//Shader screenSpaceReflections ScreenSpaceReflections.shader的第1个pass的用处为:有反射物件的像素,输出反射物件颜色处理后的结果,无反射物件的像素,输出物件本身的颜色

sheet.properties.SetTexture(ShaderIDs.History, m_History);

cmd.BlitFullscreenTriangle(ShaderIDs.SSRResolveTemp, m_Resolve, sheet, (int)Pass.Reproject);

//Shader screenSpaceReflections ScreenSpaceReflections.shader的第2个pass的用处为:根据当前像素的uv和CameraMotionVectorsTexture,计算出上一帧该像素所在的uv,然后从m_History获取到上一帧的值,与RT SSRResolveTemp当前像素的值混合,得到m_Resolve

cmd.CopyTexture(m_Resolve, 0, 0, m_History, 0, 0);

//将m_Resolve 复制给 m_History,用于之后一帧取前一帧颜色值做混合使用

cmd.ReleaseTemporaryRT(ShaderIDs.SSRResolveTemp);

}

cmd.ReleaseTemporaryRT(ShaderIDs.Test);

// Pre-cache mipmaps ids

if (m_MipIDs == null || m_MipIDs.Length == 0)

{

m_MipIDs = new int[kMaxLods];

for (int i = 0; i < kMaxLods; i++)

m_MipIDs[i] = Shader.PropertyToID("_SSRGaussianMip" + i);

}

var compute = context.resources.computeShaders.gaussianDownsample;

int kernel = compute.FindKernel("KMain");

var last = new RenderTargetIdentifier(m_Resolve);

for (int i = 0; i < lodCount; i++)

{

size >>= 1;

Assert.IsTrue(size > 0);

cmd.GetTemporaryRT(m_MipIDs[i], size, size, 0, FilterMode.Bilinear, context.sourceFormat, RenderTextureReadWrite.Default, 1, true);

cmd.SetComputeTextureParam(compute, kernel, "_Source", last);

cmd.SetComputeTextureParam(compute, kernel, "_Result", m_MipIDs[i]);

cmd.SetComputeVectorParam(compute, "_Size", new Vector4(size, size, 1f / size, 1f / size));

cmd.DispatchCompute(compute, kernel, size / 8, size / 8, 1);

cmd.CopyTexture(m_MipIDs[i], 0, 0, m_Resolve, 0, i + 1);

last = m_MipIDs[i];

}

//大致意思就是通过CS gaussianDownsample生成给RT m_Resolve生成mipmap

for (int i = 0; i < lodCount; i++)

cmd.ReleaseTemporaryRT(m_MipIDs[i]);

sheet.properties.SetTexture(ShaderIDs.Resolve, m_Resolve);

cmd.BlitFullscreenTriangle(context.source, context.destination, sheet, (int)Pass.Composite);

//Shader screenSpaceReflections ScreenSpaceReflections.shader的第3个pass的用处为:这个比较复杂,先获取到当前像素点在m_Resolve的值,当该像素点粗糙度很高的时候,或者说当上面计算出来的hitPacked距离当前像素点比较远的时候,使用比较模糊的mipmap作为反射的图片进行采样,将采样得到的值作为间接高光,与该点世界空间normal、eye、gbuffer0、gbuffer1的信息通过BRDF算出反射后的颜色。将该反射的颜色与reflectionprobe做lerp,乘以AO,后再加上后效前的颜色-reflectionprobe的结果,得到最终的结果。

cmd.EndSample("Screen-space Reflections");

}

总的来说,和Stochastic Screen Space Reflections类似,但是更清晰一些。

优化方案:

- 使用至少High级以下(含High级)的Preset,打开ReduceRT,这样就可以使用1/4屏幕尺寸的RT

- 使用FastMode,可以关闭MotionVector,以及一次从上一帧中读取数据进行混合的blit,以及一次copyTexture的操作。

- Preset的级别越低越好,除了RT的原因,还有就是thickness影响步长,这个合理范围内,越大越好,maximumIterationCount,这个合理范围内,越小越好。性能不达标的时候,使用custom的自己设置。

- Maximum March Distance越小越好,这样在无法找到反射点的时候,能降低循环次数,但是缺点就是万一有反射点,但是由于循环次数少,没有碰到,效果可能略微影响,所以按照实际情况来就好

- 使用fastmode的时候,其实三个blit,可以合成一个

DepthOfField

首先DepthOfFieldRenderer有个构造函数,在构造函数中创建了两组RT m_CoCHistoryTextures

DepthOfField没有重写SetSettings函数,所以用基类的该函数,也就是将m_Bundles中关联的PostProcessEffectSettings赋值给PostProcessEffectRenderer

DepthOfField也没有重写了Init函数,但是DOF重写了GetCameraFlags函数,因为这个后效需要depth texture

DepthOfField重写了Render函数,ok,下面直接开始读代码

public override void Render(PostProcessRenderContext context)

{

var colorFormat = RenderTextureFormat.DefaultHDR;

var cocFormat = SelectFormat(RenderTextureFormat.R8, RenderTextureFormat.RHalf);

// Avoid using R8 on OSX with Metal. #896121, https://goo.gl/MgKqu6

#if (UNITY_EDITOR_OSX || UNITY_STANDALONE_OSX) && !UNITY_2017_1_OR_NEWER

if (SystemInfo.graphicsDeviceType == UnityEngine.Rendering.GraphicsDeviceType.Metal)

cocFormat = SelectFormat(RenderTextureFormat.RHalf, RenderTextureFormat.Default);

#endif

// Material setup

float scaledFilmHeight = k_FilmHeight * (context.height / 1080f);

var f = settings.focalLength.value / 1000f;

var s1 = Mathf.Max(settings.focusDistance.value, f);

var aspect = (float)context.screenWidth / (float)context.screenHeight;

var coeff = f * f / (settings.aperture.value * (s1 - f) * scaledFilmHeight * 2f);

var maxCoC = CalculateMaxCoCRadius(context.screenHeight);

var sheet = context.propertySheets.Get(context.resources.shaders.depthOfField);

sheet.properties.Clear();

float temp = settings.range.value;

//dll+ 焦距 [-0.009,0] 2018/10/25

sheet.properties.SetFloat(ShaderIDs.Range, temp);

float temp2 = settings.blurIntensity.value;

//dll+ 模糊强度 [1,10] 2018/10/25

sheet.properties.SetFloat(ShaderIDs.BlurIntensity, temp2);

//range和blurIntensity是应我们美术需求添加的

sheet.properties.SetFloat(ShaderIDs.Distance, s1);

sheet.properties.SetFloat(ShaderIDs.LensCoeff, coeff);

sheet.properties.SetFloat(ShaderIDs.MaxCoC, maxCoC);

sheet.properties.SetFloat(ShaderIDs.RcpMaxCoC, 1f / maxCoC);

sheet.properties.SetFloat(ShaderIDs.RcpAspect, 1f / aspect);

var cmd = context.command;

cmd.BeginSample("DepthOfField");

// CoC calculation pass

context.GetScreenSpaceTemporaryRT(cmd, ShaderIDs.CoCTex, 0, cocFormat, RenderTextureReadWrite.Linear);

//创建一块单通道,linear空间的RT CoCTex

cmd.BlitFullscreenTriangle(BuiltinRenderTextureType.None, ShaderIDs.CoCTex, sheet, (int)Pass.CoCCalculation);

//材质球depthOfField DepthOfField.shader的第0个pass的用处为:根据当前像素的depth值和focusDistance做比较,得出coc值

// CoC temporal filter pass when TAA is enabled

if (context.IsTemporalAntialiasingActive())

{

float motionBlending = context.temporalAntialiasing.motionBlending;

float blend = m_ResetHistory ? 0f : motionBlending; // Handles first frame blending

var jitter = context.temporalAntialiasing.jitter;

sheet.properties.SetVector(ShaderIDs.TaaParams, new Vector3(jitter.x, jitter.y, blend));

int pp = m_HistoryPingPong[context.xrActiveEye];

var historyRead = CheckHistory(context.xrActiveEye, ++pp % 2, context, cocFormat);

var historyWrite = CheckHistory(context.xrActiveEye, ++pp % 2, context, cocFormat);

m_HistoryPingPong[context.xrActiveEye] = ++pp % 2;

cmd.BlitFullscreenTriangle(historyRead, historyWrite, sheet, (int)Pass.CoCTemporalFilter);

cmd.ReleaseTemporaryRT(ShaderIDs.CoCTex);

cmd.SetGlobalTexture(ShaderIDs.CoCTex, historyWrite);

}

// Downsampling and prefiltering pass

context.GetScreenSpaceTemporaryRT(cmd, ShaderIDs.DepthOfFieldTex, 0, colorFormat, RenderTextureReadWrite.Default, FilterMode.Bilinear, context.width / 2, context.height / 2);

//创建一块HDR格式,宽高尺寸为屏幕尺寸/2的RT DepthOfFieldTex

cmd.BlitFullscreenTriangle(context.source, ShaderIDs.DepthOfFieldTex, sheet, (int)Pass.DownsampleAndPrefilter);

//材质球depthOfField DepthOfField.shader的第2个pass的用处为:采样当前像素周围4个点的颜色值和coc值(使用UNITY_GATHER_SUPPORTED的话,4次贴图采样,否则8次),算出经过cocblur后的值avg,输出avg和coc

// Bokeh simulation pass

context.GetScreenSpaceTemporaryRT(cmd, ShaderIDs.DepthOfFieldTemp, 0, colorFormat, RenderTextureReadWrite.Default, FilterMode.Bilinear, context.width / 2, context.height / 2);

//创建一块HDR格式,宽高尺寸为屏幕尺寸/2的RT DepthOfFieldTemp

cmd.BlitFullscreenTriangle(ShaderIDs.DepthOfFieldTex, ShaderIDs.DepthOfFieldTemp, sheet, (int)Pass.BokehSmallKernel + (int)settings.kernelSize.value);

//材质球depthOfField DepthOfField.shader的第3-6个pass的用处为:采样当前像素周围16、22、43、71个点的DepthOfFieldTex颜色值和coc值,算出经过blur后的值rgb,输出rgb和coc

// Postfilter pass

cmd.BlitFullscreenTriangle(ShaderIDs.DepthOfFieldTemp, ShaderIDs.DepthOfFieldTex, sheet, (int)Pass.PostFilter);

//材质球depthOfField DepthOfField.shader的第7个pass的用处为:采样当前像素周围4个点的颜色值和coc值,算出经过平均后的值,输出rgb和coc

cmd.ReleaseTemporaryRT(ShaderIDs.DepthOfFieldTemp);

// Debug overlay pass

if (context.IsDebugOverlayEnabled(DebugOverlay.DepthOfField))

context.PushDebugOverlay(cmd, context.source, sheet, (int)Pass.DebugOverlay);

// Combine pass

cmd.BlitFullscreenTriangle(context.source, context.destination, sheet, (int)Pass.Combine);

//材质球depthOfField DepthOfField.shader的第8个pass的用处为:根据当前像素DepthOfFieldTex和CoCTex的值,计算出最终的颜色值

cmd.ReleaseTemporaryRT(ShaderIDs.DepthOfFieldTex);

if (!context.IsTemporalAntialiasingActive())

cmd.ReleaseTemporaryRT(ShaderIDs.CoCTex);

cmd.EndSample("DepthOfField");

m_ResetHistory = false;

}

优化方案:

- 1.kernelSize越小越好,对应的shader中的采样次数为:16、22、43、71

- 2.使用FastMode,跳过Bokeh和Postfilter两个步骤。DepthOfFieldTex的尺寸从1/2降低到1/4

AutoExposure

需要CS,不支持AndroidOpenGL

AutoExposure没有重写SetSettings函数,所以用基类的该函数,也就是将m_Bundles中关联的PostProcessEffectSettings赋值给PostProcessEffectRenderer

AutoExposure重写了构造函数,创建了一组两维数组四张RT m_AutoExposurePool。

AutoExposure重写了Release函数,删除RT m_AutoExposurePool。

AutoExposure重写了Render函数,总的来说也就是生成了一个RT m_CurrentAutoExposure 给后面使用,下面开始读代码

public override void Render(PostProcessRenderContext context)

{

var cmd = context.command;

cmd.BeginSample("AutoExposureLookup");

//创建两张RT,宽高为1,没有深度,格式为RFloat,可读写。

// Prepare autoExpo texture pool

//context.xrActiveEye 当前渲染vr中哪个眼睛

CheckTexture(context.xrActiveEye, 0);

CheckTexture(context.xrActiveEye, 1);

//过滤直方图中过亮过暗的百分比

// Make sure filtering values are correct to avoid apocalyptic consequences

float lowPercent = settings.filtering.value.x;

float highPercent = settings.filtering.value.y;

//0.01

const float kMinDelta = 1e-2f;

//clamp亮暗范围百分比,使low在[1,high-0.01],high在[1+0.01,99]

highPercent = Mathf.Clamp(highPercent, 1f + kMinDelta, 99f);

lowPercent = Mathf.Clamp(lowPercent, 1f, highPercent - kMinDelta);

//最大最小平均亮度(曝光值)

// Clamp min/max adaptation values as well

float minLum = settings.minLuminance.value;

float maxLum = settings.maxLuminance.value;

//范围

settings.minLuminance.value = Mathf.Min(minLum, maxLum);

settings.maxLuminance.value = Mathf.Max(minLum, maxLum);

// Compute average luminance & auto exposure

//第一帧标记

bool firstFrame = m_ResetHistory || !Application.isPlaying;

string adaptation = null;

if (firstFrame || settings.eyeAdaptation.value == EyeAdaptation.Fixed)

adaptation = "KAutoExposureAvgLuminance_fixed";

else

adaptation = "KAutoExposureAvgLuminance_progressive";

var compute = context.resources.computeShaders.autoExposure;

int kernel = compute.FindKernel(adaptation);

cmd.SetComputeBufferParam(compute, kernel, "_HistogramBuffer", context.logHistogram.data);

cmd.SetComputeVectorParam(compute, "_Params1", new Vector4(lowPercent * 0.01f, highPercent * 0.01f, RuntimeUtilities.Exp2(settings.minLuminance.value), RuntimeUtilities.Exp2(settings.maxLuminance.value)));

cmd.SetComputeVectorParam(compute, "_Params2", new Vector4(settings.speedDown.value, settings.speedUp.value, settings.keyValue.value, Time.deltaTime));

cmd.SetComputeVectorParam(compute, "_ScaleOffsetRes", context.logHistogram.GetHistogramScaleOffsetRes(context));

if (firstFrame)

{

// We don't want eye adaptation when not in play mode because the GameView isn't

// animated, thus making it harder to tweak. Just use the final audo exposure value.

m_CurrentAutoExposure = m_AutoExposurePool[context.xrActiveEye][0];

cmd.SetComputeTextureParam(compute, kernel, "_Destination", m_CurrentAutoExposure);

cmd.DispatchCompute(compute, kernel, 1, 1, 1);

// Copy current exposure to the other pingpong target to avoid adapting from black

RuntimeUtilities.CopyTexture(cmd, m_AutoExposurePool[context.xrActiveEye][0], m_AutoExposurePool[context.xrActiveEye][1]);

m_ResetHistory = false;

}

else

{

int pp = m_AutoExposurePingPong[context.xrActiveEye];

var src = m_AutoExposurePool[context.xrActiveEye][++pp % 2];

var dst = m_AutoExposurePool[context.xrActiveEye][++pp % 2];

//上一帧曝光图

cmd.SetComputeTextureParam(compute, kernel, "_Source", src);

cmd.SetComputeTextureParam(compute, kernel, "_Destination", dst);

cmd.DispatchCompute(compute, kernel, 1, 1, 1);

m_AutoExposurePingPong[context.xrActiveEye] = ++pp % 2;

m_CurrentAutoExposure = dst;

}

//感觉就是每帧根据当前亮度进行自动曝光

cmd.EndSample("AutoExposureLookup");

context.autoExposureTexture = m_CurrentAutoExposure;

context.autoExposure = settings;

}

Bloom

Bloom没有重写SetSettings函数,所以用基类的该函数,也就是将m_Bundles中关联的PostProcessEffectSettings赋值给PostProcessEffectRenderer

Bloom重写了Init函数,这里只是定义了一个数组m_Pyramid 保存了16组数据用于保存Shader.PropertyToID("_BloomMipDown" + i)和Shader.PropertyToID("_BloomMipUp" + i)(Patrick:16组,那么多,其实可以优化的吧)

Bloom重写了Render函数,再多的文字没有直接读代码清楚,下面开始读代码

public override void Render(PostProcessRenderContext context)

{

var cmd = context.command;

cmd.BeginSample("BloomPyramid");

//开启性能分析

var sheet = context.propertySheets.Get(context.resources.shaders.bloom);

//根据resource中制定的bloom shader,获取到PropertySheetFactory中保存的Shader和PropertySheet(也就是材质球和MPB)

// Apply auto exposure adjustment in the prefiltering pass

sheet.properties.SetTexture(ShaderIDs.AutoExposureTex, context.autoExposureTexture);

//自动曝光,默认为白色纹理,也就是1

// Negative anamorphic ratio values distort vertically - positive is horizontal

float ratio = Mathf.Clamp(settings.anamorphicRatio, -1, 1);

float rw = ratio < 0 ? -ratio : 0f;

float rh = ratio > 0 ? ratio : 0f;

// Do bloom on a half-res buffer, full-res doesn't bring much and kills performances on

// fillrate limited platforms

int tw = Mathf.FloorToInt(context.screenWidth / (2f - rw));

int th = Mathf.FloorToInt(context.screenHeight / (2f - rh));

//根据anamorphicRatio失真程度,设置RT尺寸

// Determine the iteration count

int s = Mathf.Max(tw, th);

float logs = Mathf.Log(s, 2f) + Mathf.Min(settings.diffusion.value, 10f) - 10f;

int logs_i = Mathf.FloorToInt(logs);

int iterations = Mathf.Clamp(logs_i, 1, k_MaxPyramidSize);

//根据RT尺寸,设置iterations

float sampleScale = 0.5f + logs - logs_i;

sheet.properties.SetFloat(ShaderIDs.SampleScale, sampleScale);

//缩放的倍数,一般就是1/2,特殊尺寸的话会超过1/2

// Prefiltering parameters

float lthresh = Mathf.GammaToLinearSpace(settings.threshold.value);

//PPS默认使用linear空间,所以进入Shader的颜色,都要先gamma->linear

float knee = lthresh * settings.softKnee.value + 1e-5f;

//在低于/超过阈值的临界处进行渐变,以防过度太生硬

var threshold = new Vector4(lthresh, lthresh - knee, knee * 2f, 0.25f / knee);

sheet.properties.SetVector(ShaderIDs.Threshold, threshold);

float lclamp = Mathf.GammaToLinearSpace(settings.clamp.value);

//将bloom的source颜色进行clamp,控制颜色上限

sheet.properties.SetVector(ShaderIDs.Params, new Vector4(lclamp, 0f, 0f, 0f));

int qualityOffset = settings.fastMode ? 1 : 0;

// Downsample

var lastDown = context.source;

for (int i = 0; i < iterations; i++)

{

int mipDown = m_Pyramid[i].down;

int mipUp = m_Pyramid[i].up;

int pass = i == 0

? (int)Pass.Prefilter13 + qualityOffset

: (int)Pass.Downsample13 + qualityOffset;

context.GetScreenSpaceTemporaryRT(cmd, mipDown, 0, context.sourceFormat, RenderTextureReadWrite.Default, FilterMode.Bilinear, tw, th);

context.GetScreenSpaceTemporaryRT(cmd, mipUp, 0, context.sourceFormat, RenderTextureReadWrite.Default, FilterMode.Bilinear, tw, th);

//创建两块特定小尺寸的RT

cmd.BlitFullscreenTriangle(lastDown, mipDown, sheet, pass);

//通过cmd.DrawMesh的方式,以lastDown为MainTex,以mipDown为RT,sheet为材质球,使用第pass个pass,绘制一个3*3的三角形(覆盖屏幕,但是会有浪费,这里是优化?还是可以被优化?)

//阅读Hidden/PostProcessing/Bloom,共9个pass,每个pass的vert都一样,内容也就是简单的设置了位置和UV信息。

//Prefilter13 pass,也就是第0个pass的frag,会先根据_MainTex每个像素点周围米字型的13个点,按照权重混合为一个颜色点。然后乘以自动曝光颜色,并和lclamp进行clamp。得到的值与threshold、knee共同计算出来一个被阈值过滤后的颜色值,用于后面的运算

//Prefilter13 + 1 = Prefilter4 pass,也就是第1个pass的frag,是第0个pass的优化版本,会先根据_MainTex每个像素点周围四个角的4个点,混合为一个颜色点。然后乘以自动曝光颜色,并和lclamp进行clamp。得到的值与threshold、knee共同计算出来一个被阈值过滤后的颜色值,用于后面的运算

//Downsample13 pass,也就是第2个pass的frag,是第0个pass的子集,会根据_MainTex每个像素点周围米字型的13个点,按照权重混合为一个颜色点。

//Downsample13 + 1 = Downsample4 pass,也就是第3个pass的frag,是第1个pass的子集,会根据_MainTex每个像素点周围四个角的4个点,混合为一个颜色点。

//经过iterations个循环,就得到了一个经过阈值过滤后的图片被模糊的结果

lastDown = mipDown;

//每个循环结束,都要将dest设置为source,进入下面的循环

tw = Mathf.Max(tw / 2, 1);

th = Mathf.Max(th / 2, 1);

//每个循环,RT尺寸都缩小为之前尺寸的1/2

}

// Upsample

int lastUp = m_Pyramid[iterations - 1].down;

for (int i = iterations - 2; i >= 0; i--)

{

int mipDown = m_Pyramid[i].down;

int mipUp = m_Pyramid[i].up;

cmd.SetGlobalTexture(ShaderIDs.BloomTex, mipDown);

cmd.BlitFullscreenTriangle(lastUp, mipUp, sheet, (int)Pass.UpsampleTent + qualityOffset);

//将Downsample的最后一层输出作为输入,其他的每级输出作为BloomTexture,计算出来的结果放在mipUp中

//UpsampleTent pass,也就是第4个pass的frag,会先根据_MainTex每个像素点周围9个点,按照权重混合为一个颜色点。然后加上BloomTexture。

//UpsampleTent + 1 = UpsampleBox pass,也就是第5个pass的frag,是第4个pass的优化版本,会先根据_MainTex每个像素点周围四个角的4个点,混合为一个颜色点。然后加上BloomTexture。

//经过iterations - 1个循环,就得到了一个经过阈值过滤后的图片被模糊的结果

lastUp = mipUp;

}

var linearColor = settings.color.value.linear;

float intensity = RuntimeUtilities.Exp2(settings.intensity.value / 10f) - 1f;

var shaderSettings = new Vector4(sampleScale, intensity, settings.dirtIntensity.value, iterations);

// Debug overlays

if (context.IsDebugOverlayEnabled(DebugOverlay.BloomThreshold))

{

context.PushDebugOverlay(cmd, context.source, sheet, (int)Pass.DebugOverlayThreshold);

}

else if (context.IsDebugOverlayEnabled(DebugOverlay.BloomBuffer))

{

sheet.properties.SetVector(ShaderIDs.ColorIntensity, new Vector4(linearColor.r, linearColor.g, linearColor.b, intensity));

context.PushDebugOverlay(cmd, m_Pyramid[0].up, sheet, (int)Pass.DebugOverlayTent + qualityOffset);

}

// Lens dirtiness

// Keep the aspect ratio correct & center the dirt texture, we don't want it to be

// stretched or squashed

var dirtTexture = settings.dirtTexture.value == null

? RuntimeUtilities.blackTexture

: settings.dirtTexture.value;

var dirtRatio = (float)dirtTexture.width / (float)dirtTexture.height;

var screenRatio = (float)context.screenWidth / (float)context.screenHeight;

var dirtTileOffset = new Vector4(1f, 1f, 0f, 0f);

if (dirtRatio > screenRatio)

{

dirtTileOffset.x = screenRatio / dirtRatio;

dirtTileOffset.z = (1f - dirtTileOffset.x) * 0.5f;

}

else if (screenRatio > dirtRatio)

{

dirtTileOffset.y = dirtRatio / screenRatio;

dirtTileOffset.w = (1f - dirtTileOffset.y) * 0.5f;

}

// Shader properties

var uberSheet = context.uberSheet;

if (settings.fastMode)

uberSheet.EnableKeyword("BLOOM_LOW");

else

uberSheet.EnableKeyword("BLOOM");

uberSheet.properties.SetVector(ShaderIDs.Bloom_DirtTileOffset, dirtTileOffset);

uberSheet.properties.SetVector(ShaderIDs.Bloom_Settings, shaderSettings);

uberSheet.properties.SetColor(ShaderIDs.Bloom_Color, linearColor);

uberSheet.properties.SetTexture(ShaderIDs.Bloom_DirtTex, dirtTexture);

cmd.SetGlobalTexture(ShaderIDs.BloomTex, lastUp);

//准备环境

// Cleanup

for (int i = 0; i < iterations; i++)

{

if (m_Pyramid[i].down != lastUp)

cmd.ReleaseTemporaryRT(m_Pyramid[i].down);

if (m_Pyramid[i].up != lastUp)

cmd.ReleaseTemporaryRT(m_Pyramid[i].up);

}

cmd.EndSample("BloomPyramid");

context.bloomBufferNameID = lastUp;

}

可以看到,在Bloom中,其实只得到了一张保存有,超过阈值的且被模糊后的纹理lastUp,以及一些参数。剩下的操作,在uberSheet中进行,我们可以看到,在Bloom中打开了uberSheet的BLOOM_LOW或者BLOOM关键字。所以,下面我们要看看在uberSheet中做了什么。

uberSheet是在RenderBuiltins中被调用,所以它至少会被调用一次,而且如果needsFinalPass,则在RenderFinalPass还会被调用一次。且都是通过cmd.BlitFullscreenTriangle(context.source, context.destination, uberSheet, 0);这个函数。大致看一下,context.source为前面绘制的结果,context.destination为cameraTarget。然后下面仔细看一下uberSheet的shader部分

Vert部分比较简单,就是计算坐标和UV

Frag部分,先获取_MainTex,也就是context.source的值,乘以_AutoExposureTex 得到col值。然后把_BloomTex通过UpsampleTent/UpsampleBox方式,再次混合一下,得到超过阈值的模糊值bloom。然后_Bloom_DirtTex读取_Bloom_DirtTex dirt。最后 bloom * intensity * _Bloom_Color * dirt * settings.dirtIntensity 加上 col得到最终的颜色值。Bloom也就到此结束。

优化方案:

- 1.使用fastMode,这样的话每次blur的时候采样只采4个点,而非13个点。中间RT的尺寸可以更小,从1/2 -> 1/4

- 2.iterations(diffusion)越少,blur次数越少。

Vignette

Vignette没有重写SetSettings函数,所以用基类的该函数,也就是将m_Bundles中关联的PostProcessEffectSettings赋值给PostProcessEffectRenderer

Vignette重写了Render函数,而Render函数中其实什么都没做,只是设置了一些参数,这些参数将用在Uber中

public override void Render(PostProcessRenderContext context)

{

var sheet = context.uberSheet;

sheet.EnableKeyword("VIGNETTE");

sheet.properties.SetColor(ShaderIDs.Vignette_Color, settings.color.value);

if (settings.mode == VignetteMode.Classic)

{

sheet.properties.SetFloat(ShaderIDs.Vignette_Mode, 0f);

sheet.properties.SetVector(ShaderIDs.Vignette_Center, settings.center.value);

float roundness = (1f - settings.roundness.value) * 6f + settings.roundness.value;

sheet.properties.SetVector(ShaderIDs.Vignette_Settings, new Vector4(settings.intensity.value * 3f, settings.smoothness.value * 5f, roundness, settings.rounded.value ? 1f : 0f));

}

else // Masked

{

sheet.properties.SetFloat(ShaderIDs.Vignette_Mode, 1f);

sheet.properties.SetTexture(ShaderIDs.Vignette_Mask, settings.mask.value);

sheet.properties.SetFloat(ShaderIDs.Vignette_Opacity, Mathf.Clamp01(settings.opacity.value));

}

}

ColorGrading

ColorGrading没有重写SetSettings函数,所以用基类的该函数,也就是将m_Bundles中关联的PostProcessEffectSettings赋值给PostProcessEffectRenderer

ColorGrading重写了Release函数,释放项目中的RT

ColorGrading重写了Render函数,总的来说也就是生成了一张RT m_InternalLdrLut _Lut2D为Uber使用

原创技术文章,撰写不易,转载请注明出处:电子设备中的画家|王烁 于 2018 年 5 月 29 日发表,原文链接(http://geekfaner.com/unity/blog11_PostProcessStackV2.html)