- Unity 2018.1新功能解析大串烧

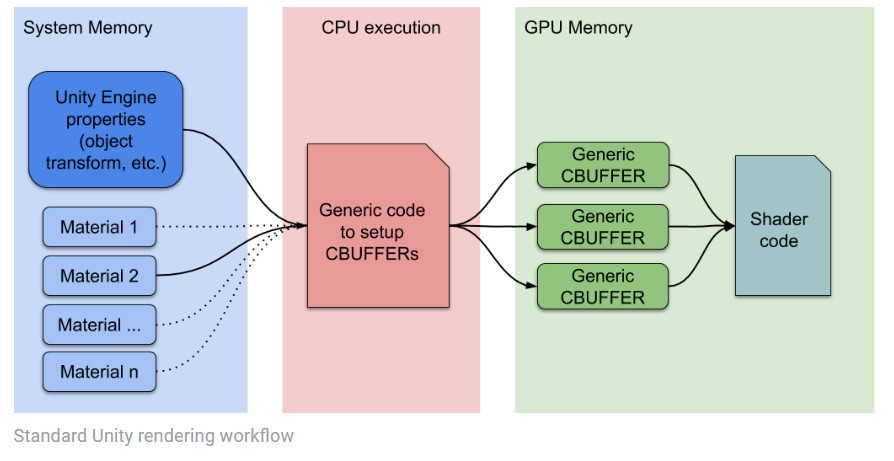

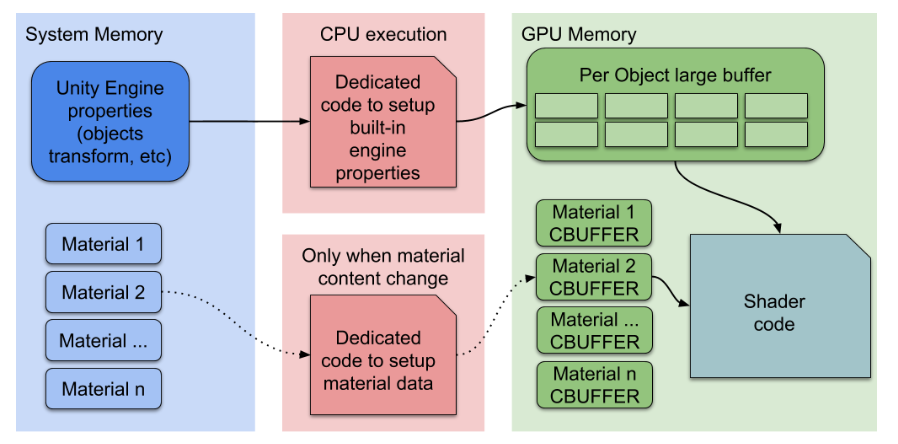

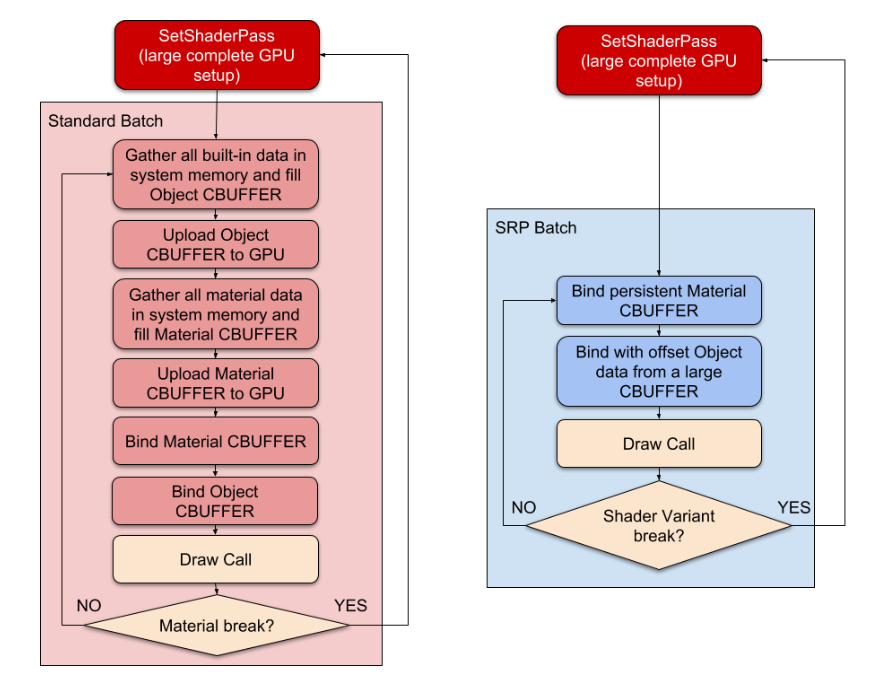

- Unity Build-in 渲染管线

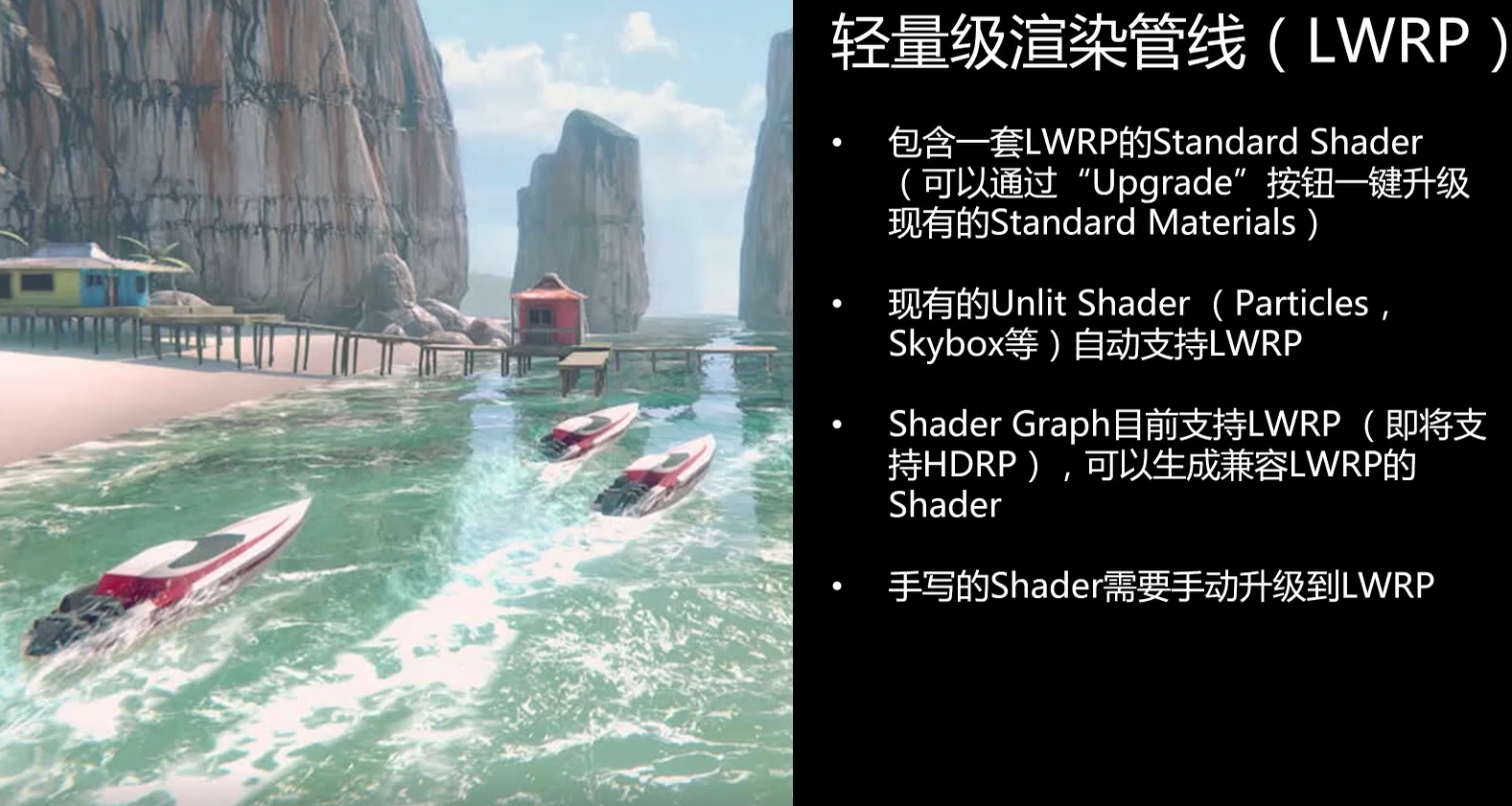

- LWRP

- LWRP Release(2019.1.0f2)

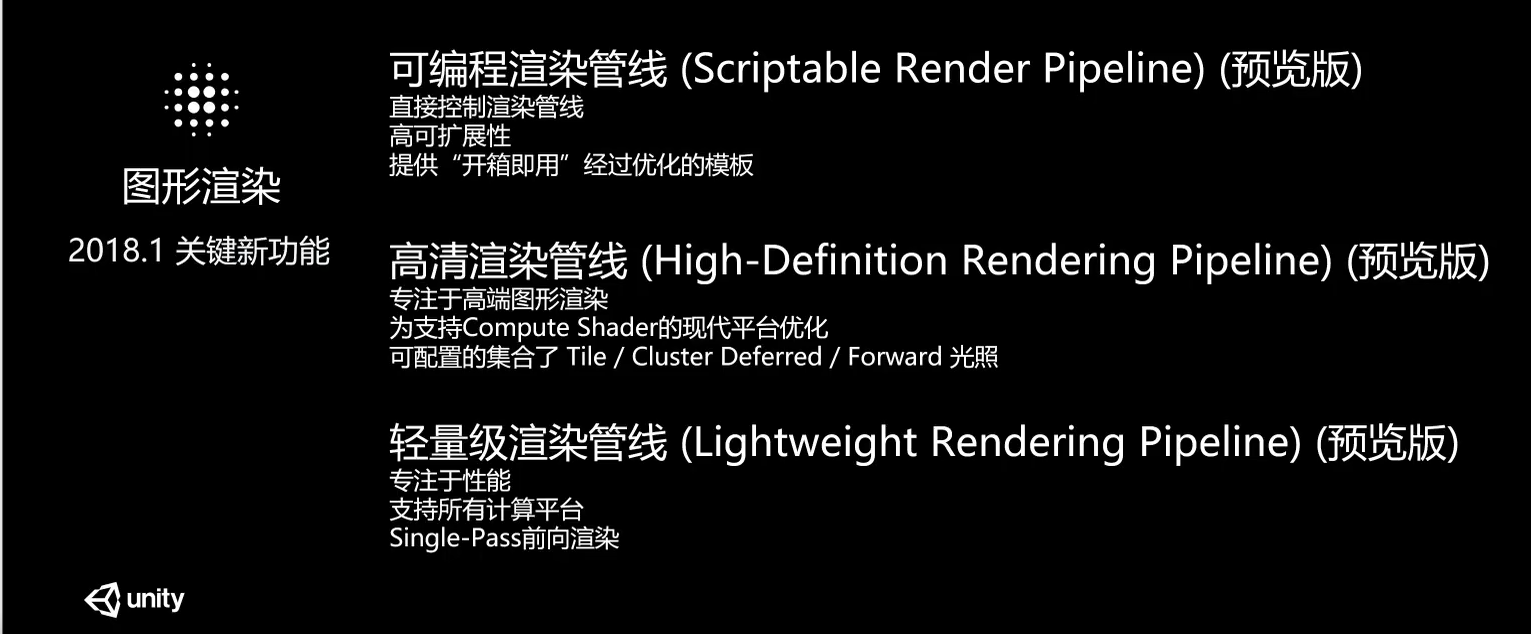

- Scriptable Render Pipeline Overview

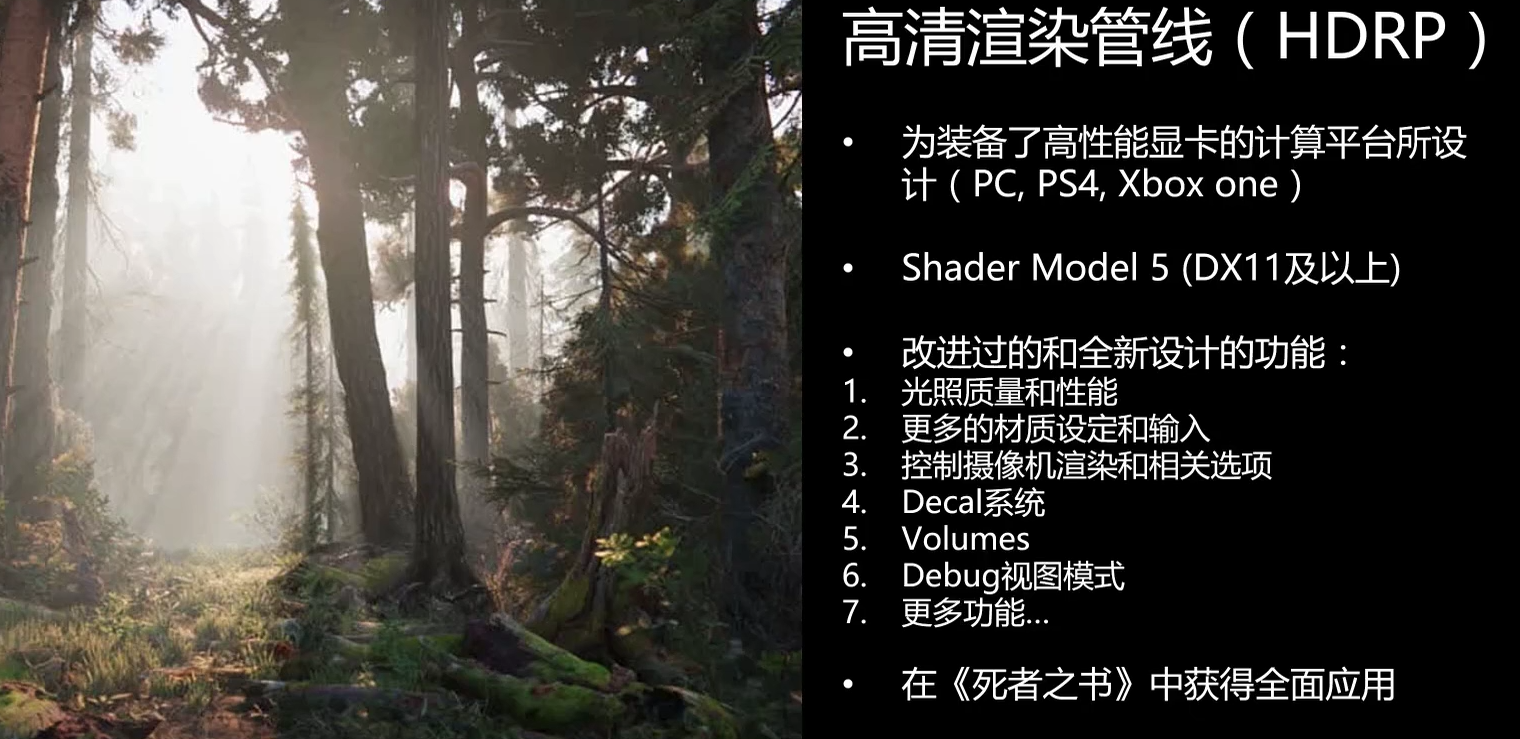

- HDRP

- DIY-PatrickDeferredRP from HDRP

- Advantage Of SRP

之前在说PostProcessStackV2的时候提到过SRP,但是当时只详细的介绍了CommandBuffer,而SRP远比Command Buffer复杂的多。

举个例子来说,假如要绘制10个物件,Unity会根据自己设定的规则,决定绘制这10个物件的顺序。比如前向渲染的时候,先按照Z值从前往后绘制不透明,然后绘制alpha test的物件,然后绘制天空盒,然后再按照Z值从后往前绘制半透明的物件,最后绘制overlay的物件。而延迟渲染又是按照Unity规定的另外一套规则,按照顺序绘制物件。使用Command Buffer的话,无法打乱前向渲染或者延迟渲染规定的这些顺序,而只能在前向渲染或者延迟渲染的中间某些时间点,埋入一些命令。但是SRP,却可以完全不顾Unity内置的这些前向渲染或者延迟渲染的规则,使用C#去定义自己的规则,据Unity的人说,之后Unity会把这个作为发展的主要方向,关乎Unity的未来,而传统的前向渲染或者延迟渲染则会慢慢的冷落(在某些情况下,用传统的渲染管线还会出现性能问题,毕竟定制的肯定比通用的好)。Unity未来针对图形渲染的工具将不在支持传统的内置渲染管线,比如Shader Graph(只能和SRP配合使用,2018.1支持LWRP,2018.2开始支持HDRP),HDRP中的decal。当然,SRP的使用是有门槛的,所以传统的前向渲染或者延迟渲染还不会被抛弃。然后还是以那句话开始今天的课题,SRP为自研引擎渲染工程师和普通渲染工程师的不同之处。

下面先总结一下Unity官方的一个名叫《Unity 2018.1新功能解析大串烧》的直播:直播地址,录播地址

Unity官方提供了两个例子,LWRP(针对移动平台、VR\AR\MR,考虑到硬件性能和电池)、HDRP(针对PC、XBox One、PS4,支持皮肤渲染SSS、体积光、clearcoat、iridescence,支持layer material,类似detail贴图,用于显示细节,在2018.3还会支持毛发、流体)

通过PackageManager可以安装LWRP或者HDRP,然后在工程中可以看到LWRP/HDRP的csproj,以及在Libaray/ScriptAssemblies还可以看到对应的dll。

通过Assets->Create->Rendering创建属于自己的SRP assets,然后可以通过ProjectSetting的Graphics中设置使用哪个SRP asset。

Unity Build-in 渲染管线

聊SRP之前,先聊一下前向渲染和延迟渲染吧。先翻译一篇文章吧,附上原文链接

前向渲染中,会针对每个物件进行绘制,然而这样的话每个物件都需要考虑与场景中光照的交互,所以如果场景有10个物件,5盏灯,所有物件又都在5盏灯的光照中,那么计算量就相当于10*5=50个pass。虽然我们可以通过culling尽量减少物件和灯的数量,但是这种优化治标不治本。所以大部分引擎都会限制像素光和顶点光的数量。在传统的固定管线中,动态光照的数量不能超过8盏。即使是当今的图形硬件,动态光也不会超过100盏。

延迟渲染中,会把所有的物件都绘制到一套2D图片中,这些图片保存了光照计算所需要的几何信息,以便在接下来的光照pass中使用。这些几何信息包含了:屏幕空间depth、normal、diffuse、specular color和specular power。这些图片被称为G-Buffer(Geometric Buffer,可以在Unity的SceneView以及FrameDebug中看到RT0-3的数据)。如果光照pass还需要其他信息,也可以保存在G-Buffer中,但是一个HD(1080P)的机型,一套32-bit per pixel的G-Buffer的大小大约8.29MB。G-Buffer创建好之后,一个光照会通过一个光照pass被计算一次。

延迟渲染的优势是,前向渲染中头疼的光照计算量的问题,在延迟渲染中,每多一盏动态光源,只会多一个光照pass,只要他们不投射阴影就没问题。在当今图形硬件中,HD设备(1080P)在只绘制不透明物件的时候大约可以支持2500盏动态光照。第2个优势是,不管是ScreenSpaceShadowmap,还是很多后效,都需要用到depth texture,而延迟渲染在创建GBuffer的时候就有一张Depth texture,所以不需要额外计算了。第3个优势是:reflectionprobe是逐像素的,所以反射不会超过物体边界。

延迟渲染的缺点除了G-Buffer很占用内存和带宽之外,延迟渲染中只有不透明物件可以被渲染进入G-Buffer。毕竟因为同一个屏幕像素,可能会包含多个半透明物件信息,而只有一个像素只有一个值可以存入G-Buffer中。所以半透明物件无法参与光照pass。而半透明物件只能通过标准前向渲染进行绘制。延迟渲染还有第三个缺点,那就是只能使用一种光照模式,因为在绘制光照pass的时候只能绑定一个shader(Unity中方向光使用的shader为Internal-DeferredShading,从缓冲区中获取几何数据,从UnityDeferredLibrary导入文件来配置光源。然后像一个前向着色器一样计算光照),对于聚光灯来说(只有位于聚光光源照亮的且可见的且不被挡住的锥形区域会被渲染。如果这个锥形区域的片段被渲染,那么将对这个片段执行光照计算。为了防止渲染这些不必要的片段,锥形区域首先使用Internal-StencilWrite着色器进行渲染。这个渲染通道会将结果写入模板缓冲区,可以用于后面对哪些片段需要渲染进行掩码处理。这种技术无法使用的唯一情况是聚光光源照亮的范围与相机的近平面相交的情况。)点光源使用相同的方法,除了它照亮的范围是一个球而不是锥形区域。如果你的渲染中使用了多个shader、集合了多种光照模式,那么无法使用延迟渲染。(Patrick:这一点我之前没注意过,所以如果有多个动态光,但是也只能选择要么用blinnphong,要么使用PBR了?),延迟渲染的第四个缺点就是不支持MSAA,需要用后处理的AA。延迟渲染的第4个缺点是,如果不透明物件有只支持前向渲染的物件,那么会在绘制这些物件之前绘制一次depth pass,用于计算这些物件的阴影。而且这些物件的深度信息没有进入GBuffer,也就无法参加后效的计算。

延迟渲染和前向渲染的区别有:1.非HDR模式下,需要exp2+log2计算,2.reflectionprobe的范围不同(可以通过在延迟渲染总设置Deferred Reflections为不支持来解决,这样的话就会逐object的算reflection了,然后放在自发光中)

除了前向渲染还有延迟渲染,其实还有forward+(tiled forward shading)。foward+就是forward渲染管线和tiled light culling的合并,通过tiled light culling来减少shader中必须要计算的光的数量。forward+分为两个阶段:1.light culling,2.forward rendering。第一个pass使用一个屏幕空间中tiled的网格把灯光分为基于tiled网格的list,然后在第二个pass中使用标准forward渲染去绘制场景中的每个物件,但是并非会遍历场景中所有的动态光照,而是根据当前像素的屏幕空间坐标,去访问上一个pass中计算得到的,所在tiled网格中的光照列表。可以想象到,light culling可以在计算像素光照的时候,减少那些肯定重复的多余光照信息,所以性能上会有提升。(Patrick:不过Unity中,像素光的数量是会小于4,不知道Unty是否已经这么做了,还是说,还会在每个物件进行全部遍历所有光照,然后再删减)foward+和forward一样,不透明和半透明都可以处理,且可以使用多种光照模式。所有使用forward渲染管线的都可以改成forward+渲染管线。据说,当今硬件,HD设备(1080P)使用forward+可以支持5000-6000个动态光照。

LWRP

- 黄色永远不支持,蓝色正在开发中

- 支持HDR、MSAA、Dynamic Resolution、Depth

- 不支持Depth+Normal Texture、Motion Vectors

- 单pass forward。支持1个方向光,4个逐物件,16个逐camera

- 不支持球谐光

- 不支持点光源的实时阴影

- 支持static batch、dynamic batch、GPU Instance

- 不支持阴影的dynamic batch

- 支持linear、gamma

- 支持Enlighten、grogressive CPU/GPU,不支持实时Enlighten

- 支持MixedLighting Subtractive,不支持Baked Indirect、ShadowMask

- 支持Baked AO,不支持Realtime AO

- 支持Linear、Exponential、Exponetial Squared Fog

- 支持Billboard Render、Trail Renderer

- 不支持Halo、Lensflare、Projector

- 支持Shader Graph,不支持Surface Shader

- 支持部分PPS,不支持AO、Motion Blur、SSR

- 支持地形的RBS,不支持Speed Tree

- UI支持Screen Spacke-Overlay、World Space,Text Mesh Pro,不支持Screen Space-Camera

好吧,还是干点正事,先聊聊Unity提供的两个模板,先说LWRP。LWRP只有一个前向渲染的pass,会对每个物件进行light culling,优势在于所有的光照都会在一个pass中被计算。与Unity build-in的前向渲染(一个光照一个pass)比起来,LWRP的DC更少,代价是shader稍微复杂一些。LWRP支持最多每个物件8盏灯(可以全部像素光),但是支持的功能只是Unity默认渲染管线功能的子集,具体见链接,如果不喜欢看英文,这里还有中文版本,只是中文版本其实是一个文章,不会定期更新,目前看上去虽然大部分还成立,不过小部分已经有更新了。这里有个LWRP的demo。

在github中可以下载到SRP的源码,其中包含LWRP的shader和C#文件。下面先仔细阅读一下LWRP提供的几个Shader。

LWRP需要使用自己的shader,在SubShader的Tags中需要设置RenderPipeline为LightweightPipeline,然而这样设置后改SubShader就不支持Unity build-in的渲染管线了,只能通过其他SubShader或者fallback的方式支持build-in渲染管线。如果在shader中需要光照计算,则需要在pass的tags中设置LightMode为LightweightForward,这里的LightweightForward需要和LightweightPipeline.cs匹配。如果不需要光照,这个tag可以不设置。

LightweightPipeline/Standard (Physically Based)的shader和普通的PBR shader区别不大,大体上看下来,区别只有一个:LWRP支持的多盏像素光,在一个pass中计算。两者同样的支持:法线贴图、alpha test、alpha预乘、自发光、金属度工作流、高光工作流、粗糙度/光滑度、AO、阴影、lightmap、顶点光、雾、instance。VS的输入为:顶点位置、法线、切线、uv、2u(lightmapuv)、instance。VS的输出为:uv、lightmapuv或者SH信息、世界空间位置、法线贴图三要素、视角方向、雾、顶点光照、阴影坐标、裁减空间坐标、instance。PS中不一样的地方就是:在算完常规的GI和直接光之后,还会用一个循环语句,针对其他像素光计算直接光信息,累加到最终的color上。最后再加上VS中顶点光算出的diffuse信息与材质diffuse相乘,再加上自发光,最后算上Unity自带的雾,就是最终的颜色了。

LightweightPipeline/Standard (Simple Lighting)和LightweightPipeline/Standard (Physically Based)基本类似,区别只有两个:1.前者只有高光工作流,2.前者使用blinnphong。

LightweightPipeline/Standard Unlit就是更简化版了,不过有意思的UnLit版本居然还可以选择有GI(Lightmap、SH)和normalMap(为了计算GI使用)。

LWRP的Shader还有粒子shader和地形shader,但是大差不差,就先不看了,下面开始看LWRP的核心,也就是用C#写渲染管线这一块。目前我看的LWRP版本为AppData\Local\Unity\cache\packages\packages.unity.com\com.unity.render-pipelines.lightweight@1.1.11-preview,从这里可以看到目前项目使用的代码,还可以根据unity的发布随时更新。一眼看过去最亮眼的两个CS文件为LightweightPipeline.cs以及LightweightPipelineAsset.cs。其中LightweightPipelineAsset.cs没什么好看的,整个就是一个序列化文件,用于保存开发者配置信息的。如果只使用LWRP的话,创建一个属于自己的LightweightPipelineAsset.cs,然后设置一些参数即可。真正使用这些参数去做事情的,是通过LightweightPipeline.cs。下面先看下LightweightPipeline.cs的构造函数,可以看到构造函数的传入参数就是LightweightPipelineAsset:

public LightweightPipeline(LightweightPipelineAsset asset)

{

m_Asset = asset;

BuildShadowSettings();

//设置阴影属性。根据硬件API(OpenGL ES2使用非screenSpace,其它使用screenSpace),根据用户设置的asset参数,设置cascade等级(directionalLightCascadeCount)、每级的距离(directionalLightCascades)、shadowmap大小(shadowAtlasWidth、shadowAtlasHeight)、shadowmap的距离(maxShadowDistance)、shadowmap的格式(shadowmapTextureFormat)、screenspaceShadowmap的格式(screenspaceShadowmapTextureFormat)。

SetRenderingFeatures();

//虽然上面文档已经给出了LWRP和Build-in Pipeline的区别,但是我们还是从代码上确认一下吧。从LightweightPipeline.cs中我们可以看到不支持motionVector(所以不能做真正的的motionblur),lightmap支持的也不全(这个需要进一步确认哪些lightmap不支持)。

//SceneView支持的功能也不全,比如alpha channel等显示模式都不支持。

PerFrameBuffer._GlossyEnvironmentColor = Shader.PropertyToID("_GlossyEnvironmentColor");

//从shader中可以看出来:间接光的diffuse是靠Lightmap和SH,specular是靠reflectionprobe。然而如果关闭GLOSSY_REFLECTIONS,也就是specular不使用reflectionprobe,则将通过_GlossyEnvironmentColor这个固定值乘以AO得到间接光的高光部分。

PerFrameBuffer._SubtractiveShadowColor = Shader.PropertyToID("_SubtractiveShadowColor");

//如果使用_MIXED_LIGHTING_SUBTRACTIVE的话,阴影颜色为bakedGI - 主光源的lamert * ( 1 - atten),其中主光源没有阴影的时候atten为1。这个值越小,说明阴影颜色越深,当等于0的时候,阴影为黑色。但是有时候为了以防阴影颜色太深,就需要设置_SubtractiveShadowColor值,之后计算阴影颜色的时候,会跟这个值进行比较取最大值。

// Lights are culled per-camera. Therefore we need to reset light buffers on each camera render

PerCameraBuffer._MainLightPosition = Shader.PropertyToID("_MainLightPosition");

PerCameraBuffer._MainLightColor = Shader.PropertyToID("_MainLightColor");

PerCameraBuffer._MainLightDistanceAttenuation = Shader.PropertyToID("_MainLightDistanceAttenuation");

PerCameraBuffer._MainLightSpotDir = Shader.PropertyToID("_MainLightSpotDir");

PerCameraBuffer._MainLightSpotAttenuation = Shader.PropertyToID("_MainLightSpotAttenuation");

PerCameraBuffer._MainLightCookie = Shader.PropertyToID("_MainLightCookie");

PerCameraBuffer._WorldToLight = Shader.PropertyToID("_WorldToLight");

PerCameraBuffer._AdditionalLightCount = Shader.PropertyToID("_AdditionalLightCount");

PerCameraBuffer._AdditionalLightPosition = Shader.PropertyToID("_AdditionalLightPosition");

PerCameraBuffer._AdditionalLightColor = Shader.PropertyToID("_AdditionalLightColor");

PerCameraBuffer._AdditionalLightDistanceAttenuation = Shader.PropertyToID("_AdditionalLightDistanceAttenuation");

PerCameraBuffer._AdditionalLightSpotDir = Shader.PropertyToID("_AdditionalLightSpotDir");

PerCameraBuffer._AdditionalLightSpotAttenuation = Shader.PropertyToID("_AdditionalLightSpotAttenuation");

PerCameraBuffer._ScaledScreenParams = Shader.PropertyToID("_ScaledScreenParams");

ShadowConstantBuffer._WorldToShadow = Shader.PropertyToID("_WorldToShadow");

ShadowConstantBuffer._ShadowData = Shader.PropertyToID("_ShadowData");

ShadowConstantBuffer._DirShadowSplitSpheres = Shader.PropertyToID("_DirShadowSplitSpheres");

ShadowConstantBuffer._DirShadowSplitSphereRadii = Shader.PropertyToID("_DirShadowSplitSphereRadii");

ShadowConstantBuffer._ShadowOffset0 = Shader.PropertyToID("_ShadowOffset0");

ShadowConstantBuffer._ShadowOffset1 = Shader.PropertyToID("_ShadowOffset1");

ShadowConstantBuffer._ShadowOffset2 = Shader.PropertyToID("_ShadowOffset2");

ShadowConstantBuffer._ShadowOffset3 = Shader.PropertyToID("_ShadowOffset3");

ShadowConstantBuffer._ShadowmapSize = Shader.PropertyToID("_ShadowmapSize");

m_ShadowMapRTID = Shader.PropertyToID("_ShadowMap");

m_ScreenSpaceShadowMapRTID = Shader.PropertyToID("_ScreenSpaceShadowMap");

CameraRenderTargetID.color = Shader.PropertyToID("_CameraColorRT");

CameraRenderTargetID.copyColor = Shader.PropertyToID("_CameraCopyColorRT");

CameraRenderTargetID.depth = Shader.PropertyToID("_CameraDepthTexture");

CameraRenderTargetID.depthCopy = Shader.PropertyToID("_CameraCopyDepthTexture");

m_ScreenSpaceShadowMapRT = new RenderTargetIdentifier(m_ScreenSpaceShadowMapRTID);

//RT。创建了多张RT,用于ScreenSpaceShadowmap、Color、CopyColor、DepthTexture、CopyDepthTexture

m_ColorRT = new RenderTargetIdentifier(CameraRenderTargetID.color);

m_CopyColorRT = new RenderTargetIdentifier(CameraRenderTargetID.copyColor);

m_DepthRT = new RenderTargetIdentifier(CameraRenderTargetID.depth);

m_CopyDepth = new RenderTargetIdentifier(CameraRenderTargetID.depthCopy);

m_PostProcessRenderContext = new PostProcessRenderContext();

//SRP结合PPS,所以创建了一个PPS的context

m_CopyTextureSupport = SystemInfo.copyTextureSupport;

for (int i = 0; i < kMaxCascades; ++i)

m_DirectionalShadowSplitDistances[i] = new Vector4(0.0f, 0.0f, 0.0f, 0.0f);

m_DirectionalShadowSplitRadii = new Vector4(0.0f, 0.0f, 0.0f, 0.0f);

// Let engine know we have MSAA on for cases where we support MSAA backbuffer

if (QualitySettings.antiAliasing != m_Asset.MSAASampleCount)

QualitySettings.antiAliasing = m_Asset.MSAASampleCount;

Shader.globalRenderPipeline = "LightweightPipeline";

m_BlitQuad = LightweightUtils.CreateQuadMesh(false);

//创建一个四顶点Mesh

m_BlitMaterial = CoreUtils.CreateEngineMaterial(m_Asset.BlitShader);

//blitShader(将传入的texture blit到dest上,ztest为always,zwrite为off)。

m_CopyDepthMaterial = CoreUtils.CreateEngineMaterial(m_Asset.CopyDepthShader);

//copyDepthShader(将DepthAttachment blit的深度写入dest,colormask为0,ztest为always,zwrite为on)。

m_ScreenSpaceShadowsMaterial = CoreUtils.CreateEngineMaterial(m_Asset.ScreenSpaceShadowShader);

//screenSpaceShadowShader(从_CameraDepthTexture获取到某一点的depth值,然后转换到view空间,然后转换到世界空间,然后转换到shadow空间也就是光照空间,得到一个3D坐标,然后从_DirectionalShadowmapTexture这张2D图片根据3D坐标的XY获取到该点的阴影信息,也就深度信息,与该3D坐标的z值进行比较,得到0(在阴影中)或者1(不在阴影中)。如果是mobile上的软阴影,则获取到周围四点的信息再取平均。如果3D坐标超过了远裁减面,则返回1(不在阴影中))

m_ErrorMaterial = CoreUtils.CreateEngineMaterial("Hidden/InternalErrorShader");

}

构造函数还是在准备环境,设置了一些属性后创建了材质球和RT。下面要看的是Render函数(Defines custom rendering for this RenderPipeline.)

public override void Render(ScriptableRenderContext context, Camera[] cameras)

{

base.Render(context, cameras);

RenderPipeline.BeginFrameRendering(cameras);

//Call the delegate used during SRP rendering before a render begins.

GraphicsSettings.lightsUseLinearIntensity = true;

//使用linear空间

SetupPerFrameShaderConstants();

//设置逐帧的shader参数。根据RenderSettings.ambientProbe(Custom or skybox ambient lighting data.)和RenderSettings.reflectionIntensity(How much the skybox / custom cubemap reflection affects the scene.)设置_GlossyEnvironmentColor。根据RenderSettings.subtractiveShadowColor(The color used for the sun shadows in the Subtractive lightmode.)设置_SubtractiveShadowColor。

// Sort cameras array by camera depth

Array.Sort(cameras, m_CameraComparer);

//根据camera的depth,给camera排序

foreach (Camera camera in cameras)

{

m_CurrCamera = camera;

bool sceneViewCamera = m_CurrCamera.cameraType == CameraType.SceneView;

bool stereoEnabled = IsStereoEnabled(m_CurrCamera);

// Disregard variations around kRenderScaleThreshold.

m_RenderScale = (Mathf.Abs(1.0f - m_Asset.RenderScale) < kRenderScaleThreshold) ? 1.0f : m_Asset.RenderScale;

//调整RenderScale可以改变游戏绘制的RT大小,可以使其与nativeRT大小不同,UI不受到这个参数的影响。

// XR has it's own scaling mechanism.

m_RenderScale = (m_CurrCamera.cameraType == CameraType.Game && !stereoEnabled) ? m_RenderScale : 1.0f;

m_IsOffscreenCamera = m_CurrCamera.targetTexture != null && m_CurrCamera.cameraType != CameraType.SceneView;

//由于targetTexture不为null,所以说明其不是直接往framebuffer上绘制,而是绘制到FBO上,所以是OffScreenCamera。

SetupPerCameraShaderConstants();

//设置逐camera的shader参数。设置被RenderScale调整后的视口:_ScaledScreenParams

RenderPipeline.BeginCameraRendering(m_CurrCamera);

//Call the delegate used during SRP rendering before a single camera starts rendering.

ScriptableCullingParameters cullingParameters;

if (!CullResults.GetCullingParameters(m_CurrCamera, stereoEnabled, out cullingParameters))

continue;

var cmd = CommandBufferPool.Get("");

cullingParameters.shadowDistance = Mathf.Min(m_ShadowSettings.maxShadowDistance,

m_CurrCamera.farClipPlane);

#if UNITY_EDITOR

// Emit scene view UI

if (sceneViewCamera)

ScriptableRenderContext.EmitWorldGeometryForSceneView(camera);

#endif

CullResults.Cull(ref cullingParameters, context, ref m_CullResults);

//cull也就这一句话,具体怎么cull的还要看unity源码。Culling results (visible objects, lights, reflection probes).

List visibleLights = m_CullResults.visibleLights;

LightData lightData;

InitializeLightData(visibleLights, out lightData);

//根据cull后的可视光采集光照信息。根据LightRenderMode.ForcePixel、LightType.Directional、LightShadows、cookie、intensity、SquaredDistanceToCamera排序,决定哪个光照是主光源。然后确定pixelAdditionalLightsCount、totalAdditionalLightsCount。

bool shadows = ShadowPass(visibleLights, ref context, ref lightData);

//绘制shadow,从表面上能看到的只有如下,最核心的部分还是要看Unity源码

1.根据cascade获取shadowmap的实际尺寸。

2.创建一张RT,作为shadowpass的输出。也就是_ShadowMap。

3.使用cullResults.ComputeDirectionalShadowMatricesAndCullingPrimitives计算V和P的矩阵,根据V和P计算出worldToShadow将在非screenspaceshadowmap的时候采样shadow或者在计算screenspaceshadowmap的时候使用

4.设置_ShadowBias和_LightDirection,设置Viewport和Scissor,根据V和P计算出viewProjectionMatrices(cmd.SetViewProjectionMatrices(view, proj);),然后通过context.DrawShadows(ref settings);绘制shadow,得到m_ShadowMapRT也就是_ShadowMap(光照空间)

5.绘制完毕之后,把shadowmap等参数记录,用于之后的shadow计算中

FrameRenderingConfiguration frameRenderingConfiguration;

SetupFrameRenderingConfiguration(out frameRenderingConfiguration, shadows, stereoEnabled, sceneViewCamera);

//设置当前帧的渲染配置:Stereo、Msaa、BeforeTransparentPostProcess、PostProcess、DepthPrePass、DepthCopy、DefaultViewport、IntermediateTexture

SetupIntermediateResources(frameRenderingConfiguration, shadows, ref context);

//根据设置(IntermediateTexture/m_RequireDepthTexture/DepthCopy/screenSpace/m_RequireCopyColor/m_IsOffscreenCamera/Stereo)设置RT

// SetupCameraProperties does the following:

// Setup Camera RenderTarget and Viewport

// VR Camera Setup and SINGLE_PASS_STEREO props

// Setup camera view, proj and their inv matrices.

// Setup properties: _WorldSpaceCameraPos, _ProjectionParams, _ScreenParams, _ZBufferParams, unity_OrthoParams

// Setup camera world clip planes props

// setup HDR keyword

// Setup global time properties (_Time, _SinTime, _CosTime)

context.SetupCameraProperties(m_CurrCamera, stereoEnabled);

if (LightweightUtils.HasFlag(frameRenderingConfiguration, FrameRenderingConfiguration.DepthPrePass))

DepthPass(ref context, frameRenderingConfiguration);

//绘制深度信息

1.设置m_DepthRT为RT,通过cmd.SetRenderTarget(colorBuffer, depthBuffer, miplevel, cubemapFace, depthSlice);和cmd.ClearRenderTarget((clearFlag & ClearFlag.Depth) != 0, (clearFlag & ClearFlag.Color) != 0, clearColor);,具体实现参照Unity源码。

2.设置当前camera和所使用的shader pass为m_DepthPrepass(DepthOnly)

3.设置绘制顺序为SortFlags.CommonOpaque,绘制filter为RenderQueueRange.opaque(也就是只绘制不透明)

4.如果没有开启stereo的话,则调用context.DrawRenderers(m_CullResults.visibleRenderers, ref opaqueDrawSettings, opaqueFilterSettings);进行绘制,具体实现见Unity源码

if (shadows && m_ShadowSettings.screenSpace)

ShadowCollectPass(visibleLights, ref context, ref lightData, frameRenderingConfiguration);

//如果开启了screenSpaceShadowMap,则需要用这个去生成一个screenspaceShadowMap

1.设置Shader Property _SHADOWS_SOFT和_SHADOWS_CASCADE

2.设置m_ScreenSpaceShadowMapRT为RT

3.设置使用的shader为m_ScreenSpaceShadowsMaterial(ScreenSpaceShadows),其中使用到了DepthPass得到的_CameraDepthTexture以及ShadowPass得到的_ShadowMap

4.使用cmd.Blit(m_ScreenSpaceShadowMapRT, m_ScreenSpaceShadowMapRT, m_ScreenSpaceShadowsMaterial);去绘制screenspaceShadowMap,其中source其实没有用到

ForwardPass(visibleLights, frameRenderingConfiguration, ref context, ref lightData, stereoEnabled);

//真的绘制最终结果了

1.设置光照参数(主光源的cookie纹理、主光源的世界到光照空间的矩阵、所有光的位置、颜色、atten、聚光灯方向、聚光灯atten)

2.设置shader variant。_MAIN_LIGHT_DIRECTIONAL、_MAIN_LIGHT_SPOT、_SHADOWS_ENABLED、_MAIN_LIGHT_COOKIE、_ADDITIONAL_LIGHTS、_MIXED_LIGHTING_SUBTRACTIVE、_VERTEX_LIGHTS、SOFTPARTICLES_ON、FOG_LINEAR、FOG_EXP2

3.设置渲染配置。RendererConfiguration.PerObjectReflectionProbes、PerObjectLightmaps、PerObjectLightProbe、PerObjectLightIndices8

4.BeginForwardRendering:设置RT。当需要intermediateTexture且不是m_IsOffscreenCamera的话,colorRT为FBO,而非framebuffer,当需要intermediateTexture且m_RequireDepthTexture的话depthRT为FBO。

5.根据camera的设置,设置clearFlag为ClearFlag.Depth/Color

6.如果绘制到RT,则重新设置viewport

7.RenderOpaques:设置当前camera,以及使用的pass name为m_LitPassName(LightweightForward)和m_UnlitPassName(SRPDefaultUnlit。Renders all shaders without a lightmode tag)

8.设置绘制顺序为SortFlags.CommonOpaque,绘制filter为RenderQueueRange.opaque(也就是只绘制不透明)

9.调用context.DrawRenderers(m_CullResults.visibleRenderers, ref opaqueDrawSettings, opaqueFilterSettings);进行绘制,具体实现见Unity源码

10.使用m_ErrorMaterial(Hidden/InternalErrorShader)材质,绘制使用legacy shader(Always、ForwardBase、PrepassBase、Vertex、VertexLMRGBM、VertexLM)的不透明物件

11.使用context.DrawSkybox(m_CurrCamera);绘制天空盒

12.AfterOpaque:设置depthRT为刚才DepthPass得到的结果。

13.如果有BeforeTransparentPostProcess,则需要手动调用PPSV2的API m_CameraPostProcessLayer.RenderOpaqueOnly(m_PostProcessRenderContext);执行后处理,最终dest为m_CurrCameraColorRT,然后将m_CurrCameraColorRT设置为RT

14.如果有FrameRenderingConfiguration.DepthCopy,则通过cmd.CopyTexture(sourceRT, destRT);或者cmd.Blit(sourceRT, destRT, copyMaterial);其中copyMaterial为m_CopyDepthMaterial (CopyDepth)材质将m_DepthRT copy到m_CopyDepth,然后将m_CopyDepth设置为当前depthRT。

15.RenderTransparents:同RenderOpaques一样。设置当前camera,以及使用的pass name为m_LitPassName(LightweightForward)和m_UnlitPassName(SRPDefaultUnlit。Renders all shaders without a lightmode tag)

16.设置绘制顺序为SortFlags.CommonTransparent,绘制filter为RenderQueueRange.transparent(也就是只绘制半透明物件)

17.调用context.DrawRenderers(m_CullResults.visibleRenderers, ref transparentSettings, transparentFilterSettings);进行绘制,具体实现见Unity源码

18.使用m_ErrorMaterial(Hidden/InternalErrorShader)材质,绘制使用legacy shader(Always、ForwardBase、PrepassBase、Vertex、VertexLMRGBM、VertexLM)的半透明物件

19.AfterTransparent:如果有PostProcess。则需要手动调用PPSV2的API m_CameraPostProcessLayer.Render(m_PostProcessRenderContext);执行后处理,其中dest为BuiltinRenderTextureType.CameraTarget,也就是渲染到屏幕上了

20.EndForwardRendering:如果没有开启PostProcess等情况,如果使用DefaultViewport,则通过API cmd.Blit(sourceRT, destRT, material);将m_CurrCameraColorRT通过材质blitMaterial(Blit)blit到BuiltinRenderTextureType.CameraTarget,如果不是DefaultViewport,则需要通过前面创建的四顶点mesh m_BlitQuad,设置BuiltinRenderTextureType.CameraTarget为RT,设置标准矩阵,设置viewport为m_CurrCamera.pixelRect,然后通过API cmd.DrawMesh(m_BlitQuad, Matrix4x4.identity, material);将m_CurrCameraColorRT通过材质blitMaterial(Blit)绘制到BuiltinRenderTextureType.CameraTarget。

21.最后将BuiltinRenderTextureType.CameraTarget设置回为RT。

cmd.name = "After Camera Render";

#if UNITY_EDITOR

if (sceneViewCamera)

CopyTexture(cmd, CameraRenderTargetID.depth, BuiltinRenderTextureType.CameraTarget, m_CopyDepthMaterial, true);

#endif

cmd.ReleaseTemporaryRT(m_ScreenSpaceShadowMapRTID);

cmd.ReleaseTemporaryRT(CameraRenderTargetID.depthCopy);

cmd.ReleaseTemporaryRT(CameraRenderTargetID.depth);

cmd.ReleaseTemporaryRT(CameraRenderTargetID.color);

cmd.ReleaseTemporaryRT(CameraRenderTargetID.copyColor);

context.ExecuteCommandBuffer(cmd);

CommandBufferPool.Release(cmd);

//最后清理RT

context.Submit();

//最后的最后context.Submit();即可。

if (m_ShadowMapRT)

{

RenderTexture.ReleaseTemporary(m_ShadowMapRT);

m_ShadowMapRT = null;

}

}

}

刚看完之后就发现尴尬了,如果把Unity提升到2018.2,就会发现LWRP已经升级到3.0版本了,改动还不小,好吧,重新看LightweightPipeline.cs的构造函数,传入参数依然是LightweightPipelineAsset

public LightweightPipeline(LightweightPipelineAsset asset)

{

pipelineAsset = asset;

SetSupportedRenderingFeatures();

//虽然上面文档已经给出了LWRP和Build-in Pipeline的区别,但是我们还是从代码上确认一下吧。从LightweightPipeline.cs中我们可以看到不支持motionVector(所以不能做真正的的motionblur),lightmap支持的也不全(这个需要进一步确认哪些lightmap不支持)。

//SceneView支持的功能也不全,比如alpha channel等显示模式都不支持。

SetPipelineCapabilities(asset);

//根据SRP asset的设置,选择开启或者关闭AdditionalLights、VertexLights、DirectionalShadows、LocalShadows、SoftShadows。

PerFrameBuffer._GlossyEnvironmentColor = Shader.PropertyToID("_GlossyEnvironmentColor");

//从shader中可以看出来:间接光的diffuse是靠Lightmap和SH,specular是靠reflectionprobe。然而如果关闭GLOSSY_REFLECTIONS,也就是specular不适用reflectionprobe,则将通过_GlossyEnvironmentColor这个固定值乘以AO得到间接光的高光部分。

PerFrameBuffer._SubtractiveShadowColor = Shader.PropertyToID("_SubtractiveShadowColor");

//如果使用_MIXED_LIGHTING_SUBTRACTIVE的话,阴影颜色为bakedGI - 主光源的lamert * ( 1 - atten),其中主光源没有阴影的时候atten为1。这个值越小,说明阴影颜色越深,当等于0的时候,阴影为黑色。但是有时候为了以防阴影颜色太深,就需要设置_SubtractiveShadowColor值,之后计算阴影颜色的时候,会跟这个值进行比较取最大值。

SetupLightweightConstanstPass.PerCameraBuffer._ScaledScreenParams = Shader.PropertyToID("_ScaledScreenParams");

//设置视口尺寸。

m_Renderer = new LightweightForwardRenderer(asset);

//这个厉害了,SRP的核心之一就是这个,创建一个render。包含:

1.材质。

I.copyDepthShader(将DepthAttachment blit的深度写入dest,colormask为0,ztest为always,zwrite为on)。

II.samplingShader(将传入的texture通过downsample的方式blit到dest上,downsample的比例可以设置,ztest为always,zwrite为off)。

III.blitShader(和samplingShader类似,只是不downsample,直接blit到dest,ztest为always,zwrite为off)。

IV.screenSpaceShadowShader(从_CameraDepthTexture获取到某一点的depth值,然后转换到view空间,然后转换到世界空间,然后转换到shadow空间也就是光照空间,得到一个3D坐标,然后从_DirectionalShadowmapTexture这张2D图片根据3D坐标的XY获取到该点的阴影信息,也就深度信息,与该3D坐标的z值进行比较,得到0(在阴影中)或者1(不在阴影中)。如果是mobile上的软阴影,则获取到周围四点的信息再取平均。如果3D坐标超过了远裁减面,则返回1(不在阴影中))

2.PostProcessRenderContext。SRP结合PPS,所以创建了一个PPS的context

3.FilterRenderersSettings。针对不透明和半透明的Filter settings。

// Let engine know we have MSAA on for cases where we support MSAA backbuffer

if (QualitySettings.antiAliasing != pipelineAsset.msaaSampleCount)

QualitySettings.antiAliasing = pipelineAsset.msaaSampleCount;

Shader.globalRenderPipeline = "LightweightPipeline";

m_IsCameraRendering = false;

}

下面还是要继续去看Render函数(Defines custom rendering for this RenderPipeline.)

public override void Render(ScriptableRenderContext context, Camera[] cameras)

{

if (m_IsCameraRendering)

{

Debug.LogWarning("Nested camera rendering is forbidden. If you are calling camera.Render inside OnWillRenderObject callback, use BeginCameraRender callback instead.");

return;

}

pipelineAsset.savedXRGraphicsConfig.renderScale = pipelineAsset.renderScale;

pipelineAsset.savedXRGraphicsConfig.viewportScale = 1.0f; // Placeholder until viewportScale is all hooked up

// Apply any changes to XRGConfig prior to this point

pipelineAsset.savedXRGraphicsConfig.SetConfig();

base.Render(context, cameras);

BeginFrameRendering(cameras);

//Call the delegate used during SRP rendering before a render begins.

GraphicsSettings.lightsUseLinearIntensity = true;

//使用linear空间

SetupPerFrameShaderConstants();

//设置逐帧的shader参数。根据RenderSettings.ambientProbe(Custom or skybox ambient lighting data.)和RenderSettings.reflectionIntensity(How much the skybox / custom cubemap reflection affects the scene.)设置_GlossyEnvironmentColor。根据RenderSettings.subtractiveShadowColor(The color used for the sun shadows in the Subtractive lightmode.)设置_SubtractiveShadowColor。

// Sort cameras array by camera depth

Array.Sort(cameras, m_CameraComparer);

//根据camera的depth,给camera排序

foreach (Camera camera in cameras)

{

BeginCameraRendering(camera);

//Call the delegate used during SRP rendering before a single camera starts rendering.

string renderCameraTag = "Render " + camera.name;

CommandBuffer cmd = CommandBufferPool.Get(renderCameraTag);

//使用using,定义范围,在该范围结束时回收资源。注意使用前提:该对象必须继承了IDisposable接口,功能等同于try{}Finally{}。

//在这里间接的使用了BeginSample和EndSample,用于profiler检测renderCameraTag的耗时

using (new ProfilingSample(cmd, renderCameraTag))

{

CameraData cameraData;

InitializeCameraData(camera, out cameraData);

//设置当前camera、MSAA、isSceneViewCamera、isOffscreenRender、isHdrEnabled、postProcessLayer、postProcessEnabled、isDefaultViewport、renderScale、requiresDepthTexture(gameCamera如果不开启后效,除非特别设定,否则不需要这个)、requiresSoftParticles、requiresOpaqueTexture(GameCamera除非特别设定,否则不需要这个)、opaqueTextureDownsampling、maxShadowDistance

SetupPerCameraShaderConstants(cameraData);

//设置被RenderScale调整后的视口:_ScaledScreenParams

ScriptableCullingParameters cullingParameters;

if (!CullResults.GetCullingParameters(camera, cameraData.isStereoEnabled, out cullingParameters))

{

CommandBufferPool.Release(cmd);

continue;

}

cullingParameters.shadowDistance = Mathf.Min(cameraData.maxShadowDistance, camera.farClipPlane);

context.ExecuteCommandBuffer(cmd);

cmd.Clear();

#if UNITY_EDITOR

try

#endif

{

m_IsCameraRendering = true;

#if UNITY_EDITOR

// Emit scene view UI

if (cameraData.isSceneViewCamera)

ScriptableRenderContext.EmitWorldGeometryForSceneView(camera);

#endif

CullResults.Cull(ref cullingParameters, context, ref m_CullResults);

//cull也就这一句话,具体怎么cull的还要看unity源码。Culling results (visible objects, lights, reflection probes).

List visibleLights = m_CullResults.visibleLights;

RenderingData renderingData;

InitializeRenderingData(ref cameraData, visibleLights,

m_Renderer.maxSupportedLocalLightsPerPass, m_Renderer.maxSupportedVertexLights,

out renderingData);

//准备绘制用的数据:

1.根据光照的类型和阴影,设置hasDirectionalShadowCastingLight、hasLocalShadowCastingLight、m_LocalLightIndices

2.根据cull后的可视光采集光照信息。第一个方向光为主光源。然后确定pixelAdditionalLightsCount、totalAdditionalLightsCount、visibleLights、visibleLocalLightIndices。

3.设置阴影信息。阴影相比之前多了local的概念,难道是多盏灯的阴影?renderDirectionalShadows、directionalLightCascadeCount、directionalShadowAtlasWidth、directionalShadowAtlasHeight、directionalLightCascades、renderLocalShadows、localShadowAtlasWidth、supportsSoftShadows、bufferBitCount、renderedDirectionalShadowQuality、renderedLocalShadowQuality

4.supportsDynamicBatching

var setup = cameraData.camera.GetComponent();

//这个很厉害,也就是每个camera可以用不同的渲染管线。

if (setup == null)

setup = defaultRendererSetup;

//这个是3.0.0LWRP的核心,前面的render现在只设置材质,pass和rt在这里设置,pass的执行顺序也在这里设置,有一个默认的版本,开发者还可以根据自己的需求自定义自己的,包括pass、rt、pass的执行顺序等。

setup.Setup(m_Renderer, ref context, ref m_CullResults, ref renderingData);

//设置Pass、RT以及pass的执行顺序

1.Pass。在Unity build-in forward渲染管线中,我们知道绘制每个物件,会以物件为单位绘制其shadowcaster pass(用于制作阴影贴图)、meta pass(用于lightmap)、foward-base pass和forward-add pass(用于得到物体最终颜色,并一层层会绘制到framebuffer上,全部绘制完后framebuffer即为最终效果),在Unity build-in deffer渲染管线中,我们会先以物件为单位绘制deffer pass(用于得到G-Buffer),然后根据光照shader和G-buffer计算得到一张照片,这张照片绘制的是屏幕中所有不透明物件的可见部分。在Unity build-in 渲染管线中,这些pass我们可以通过shader自定义,但是无法改变其顺序。那么在SRP中,我们也需要很多pass,同时,我们还可以设置这些pass的执行顺序,这就是SRP的核心魅力。

I.DepthOnlyPass,lightmode为DepthOnly。每个物件都有,用于绘制深度信息(ZWrite为On,colormask为0,ztest为默认)。

II.DirectionalShadowsPass,lightmode为ShadowCaster。每个物件都有,用于绘制阴影贴图_DirectionalShadowmapTexture,配套的参数有:分级(cascade)的世界转Shadow矩阵、cascadeshadow区域中心位置、shadow偏移,以及shadowmap的尺寸格式等信息用于采样_DirectionalShadowmapTexture。

III.LocalShadowsPass,和DirectionalShadowsPass使用同一个lightmode为ShadowCaster,但是是用于计算实时阴影贴图_LocalShadowmapTexture,所以配套的参数有:可见光数量个世界转Shadow矩阵、阴影强度,以及shadow偏移、shadowmap大小,因为这里没有cascade,所以不需要cascadeshadow区域中心位置用于采样_LocalShadowmapTexture。

IV.SetupForwardRenderingPass,其实这个pass只有一句话,也就是设置camera。因为shadowpass绘制的时候是以光照视角绘制,而下面的pass都是以摄像机视角,所以需要使用camera的信息,设置矩阵等信息。类似1.11中的context.SetupCameraProperties(m_CurrCamera, stereoEnabled);

V.ScreenSpaceShadowResolvePass,使用的是材质中的screenSpaceShadowShader,看上去像是使用DirectionalShadowsPass生成的_DirectionalShadowmapTexture,以及DepthOnlyPass得到的_CameraDepthTexture去计算得到阴影screenspaceshadowmap。

VI.CreateLightweightRenderTexturesPass,创建RT的pass,属于1.11中的SetupIntermediateResources(frameRenderingConfiguration, shadows, ref context);

VII.BeginXRRenderingPass,XR相关,类似1.11中的StartStereoRendering(ref context, frameRenderingConfiguration);

VIII.SetupLightweightConstanstPass,设置主光源cookie,所有光源的位置、颜色等信息还有世界到光照空间矩阵、光照衰减、聚光灯方向以及每个物件的_LightIndexBuffer,类似1.11 ForwardPass中的SetupShaderLightConstants(cmd, visibleLights, ref lightData);

IX.RenderOpaqueForwardPass,绘制不透明物件,属于1.11 ForwardPass中的RenderOpaques(ref context, rendererSettings);

X.OpaquePostProcessPass,处理不透明物件的后处理,属于1.11 ForwardPass中的AfterOpaque(ref context, frameRenderingConfiguration);

XI.DrawSkyboxPass,绘制天空盒,属于1.11 ForwardPass中的RenderOpaques(ref context, rendererSettings);

XII.CopyDepthPass,将深度信息存入,属于1.11 ForwardPass中的AfterOpaque(ref context, frameRenderingConfiguration);

XIII.CopyColorPass,将颜色信息存入,可以downsample。

XIV.RenderTransparentForwardPass,绘制半透明物件,类似1.11 ForwardPass中的RenderTransparents(ref context, rendererSettings);

XV.TransparentPostProcessPass,处理全部物件的后处理,类似1.11 ForwardPass中的AfterTransparent(ref context, frameRenderingConfiguration);

XVI.FinalBlitPass,最终将数据blit/copy到framebuffer上,属于1.11 ForwardPass中的EndForwardRendering(ref context, frameRenderingConfiguration);

XVII.EndXRRenderingPass,XR相关,属于1.11 ForwardPass中的EndForwardRendering(ref context, frameRenderingConfiguration);

XVIII.SceneViewDepthCopyPass,如果是UNITY_EDITOR,则将DepthTexture写入 BuiltinRenderTextureType.CameraTarget

2.RT。配置多张RT(未创建),用于Color、DepthAttachment、DepthTexture、OpaqueColor、DirectionalShadowmap、LocalShadowmap、ScreenSpaceShadowmap

3.清空pass的list m_ActiveRenderPassQueue

4.设置光照index

5.设置pass顺序

I.如果绘制renderDirectionalShadows,则先将刚配置的RT DirectionalShadowmap 赋予给 DirectionalShadowsPass做为dest,然后将DirectionalShadowsPass加入m_ActiveRenderPassQueue

II.如果绘制renderLocalShadows,则先将刚配置的RT LocalShadowmap 赋予给 LocalShadowsPass做为dest,然后将LocalShadowsPass加入m_ActiveRenderPassQueue

III.将SetupForwardRenderingPass加入m_ActiveRenderPassQueue

IV.如果需要DepthPrepass,则将刚配置的RT DepthTexture赋予给 DepthOnlyPass,colorFormat为RenderTextureFormat.Depth,depthBufferBits为32,不开启MSAA,也就是sample = 1,然后将DepthOnlyPass加入m_ActiveRenderPassQueue

V.如果需要requiresScreenSpaceShadowResolve,则将刚配置的RT ScreenSpaceShadowmap赋予给 ScreenSpaceShadowResolvePass,colorFormat为RenderTextureFormat.R8/ARGB32,depthBufferBits为0,然后将ScreenSpaceShadowResolvePass加入m_ActiveRenderPassQueue

VI.如果需要requiresDepthAttachment/requiresColorAttachment,则将刚配置的RT DepthAttachment/Color 以及是否MSAA 赋予给CreateLightweightRenderTexturesPass,然后将CreateLightweightRenderTexturesPass加入m_ActiveRenderPassQueue

VII.如果需要isStereoEnabled,则将BeginXRRenderingPass加入m_ActiveRenderPassQueue

VIII.将SetupLightweightConstanstPass加入m_ActiveRenderPassQueue

IX.先设置渲染配置,RendererConfiguration.PerObjectReflectionProbes、PerObjectLightmaps、PerObjectLightProbe、PerObjectLightIndices8/ProvideLightIndices。然后将colorHandle(RenderTargetHandle.CameraTarget/Color)、depthHandle(RenderTargetHandle.CameraTarget/Color)、camera的clearflag、camera的backgroundcolor、刚设置好的渲染配置、dynamicBatching 赋予给RenderOpaqueForwardPass,然后将RenderOpaqueForwardPass加入m_ActiveRenderPassQueue

X.如果设置了针对不透明物件的后效,则将colorHandle(RenderTargetHandle.CameraTarget/Color)赋予给OpaquePostProcessPass作为source和dest,然后将OpaquePostProcessPass加入m_ActiveRenderPassQueue

XI.如果camera的clearFlag为skybox,则将DrawSkyboxPass加入m_ActiveRenderPassQueue

XII.如果depthHandle不是RenderTargetHandle.CameraTarget,则将depthHandle 作为src、DepthTexture 做为dest,赋予给CopyDepthPass,然后将CopyDepthPass加入m_ActiveRenderPassQueue(Patrick:这里我就不理解了,只要depthRT不是framebuffer,就copy?copy一个多余的depthtexture有啥用?)

XIII.如果requiresOpaqueTexture,则将colorHandle作为src、OpaqueColor作为dest 赋予给CopyColorPass,然后将CopyColorPass加入m_ActiveRenderPassQueue

XIV.将colorHandle(RenderTargetHandle.CameraTarget/Color)、depthHandle(RenderTargetHandle.CameraTarget/Color)、camera的backgroundcolor、IX设置好的渲染配置、dynamicBatching 赋予给RenderTransparentForwardPass,然后将RenderTransparentForwardPass加入m_ActiveRenderPassQueue

XV.如果开启了后处理,则将colorHandle(RenderTargetHandle.CameraTarget/Color)赋予给TransparentPostProcessPass作为source,BuiltinRenderTextureType.CameraTarget作为dest,然后将TransparentPostProcessPass加入m_ActiveRenderPassQueue

XVI.如果没有开后处理,且没有开启isOffscreenRender(也就是GameCamera的dest不是RT),且colorHandle不是RenderTargetHandle.CameraTarget,则将colorHandle赋予给FinalBlitPass作为source,BuiltinRenderTextureType.CameraTarget作为dest,然后将FinalBlitPass加入m_ActiveRenderPassQueue

XVII.如果需要isStereoEnabled,则将EndXRRenderingPass加入m_ActiveRenderPassQueue

XVIII.如果是UNITY_EDITOR,且为sceneview,则将DepthTexture赋予给SceneViewDepthCopyPass作为src,将BuiltinRenderTextureType.CameraTarget作为dest,进行DepthCopy,将SceneViewDepthCopyPass加入m_ActiveRenderPassQueue(Patrick:这里我也不理解了,最后了把depth copy过去干啥,感觉是unity的小动作)

m_Renderer.Execute(ref context, ref m_CullResults, ref renderingData);

//按照m_ActiveRenderPassQueue进行Execute,全部Execute完毕后,再按照顺序进行Dispose

I.DirectionalShadowsPass。同1.11的bool shadows = ShadowPass(visibleLights, ref context, ref lightData);区别在于这里的主光源默认为方向光,不考虑聚光灯

//Execute:绘制shadow,从表面上能看到的只有如下,最核心的部分还是要看Unity源码

1.将作为RT的m_DirectionalShadowmapTexture、存储各级cascade matrix的m_DirectionalShadowMatrices、存储各级cascade距离的m_CascadeSplitDistances、存储各级cascade的信息(矩阵、offset、resolution)slice的m_CascadeSlices置为空

2.确认主光源为方向光

3.使用using开启一个profiler用于检测这个pass的性能

4.根据cascade获取shadowmap的实际尺寸。

5.根据配置 创建RT DirectionalShadowmap,作为DirectionalShadowsPass的输出。也就是_DirectionalShadowmapTexture。

6.分cascade级别去通过cullResults.ComputeDirectionalShadowMatricesAndCullingPrimitives计算各级别的V和P的矩阵,根据V和P计算出worldToShadow将在非screenspaceshadowmap的时候采样shadow或者在计算screenspaceshadowmap的时候使用。并设置各个cascade级别的shadowSliceData(比如每个级别对应纹理中offset和矩阵)

7.分cascade级别设置_ShadowBias和_LightDirection,设置Viewport和Scissor,根据每个级别cascade V和P计算出viewProjectionMatrices(cmd.SetViewProjectionMatrices(view, proj);),然后通过context.DrawShadows(ref settings);绘制shadow

8.各级shadowmap全部绘制完毕后,就得到一个完整的m_DirectionalShadowmapTexture也就是_DirectionalShadowmapTexture(主光源的光照空间的阴影贴图)

9.设置软/影阴影,记录shadowmap等参数,用于之后的shadow计算中

//Dispose,删除m_DirectionalShadowmapTexture

II.LocalShadowsPass。大体上同DirectionalShadowsPass类似,区别是DirectionalShadowsPass只是针对主光源(方向光)分cascade进行阴影贴图的生成,而LocalShadowsPass是针对其他所有可见光照进行阴影贴图的生成。但是要求其他可见光为聚光灯,点光源还在todolist中,目前每个pass只支持4盏可见光

//Execute:绘制shadow,从表面上能看到的只有如下,最核心的部分还是要看Unity源码

1.将作为RT的m_LocalShadowmapTexture、存储各个可见光 matrix的m_LocalShadowMatrices、存储各个可见光信息(矩阵、offset、resolution)slice的m_LocalLightSlices、存储各个可见光阴影强度的m_LocalShadowStrength置为空

2.确认可见光中带阴影的聚光灯的数量shadowCastingLightsCount,为这些光源生成阴影

3.使用using开启一个profiler用于检测这个pass的性能

4.根据shadowCastingLightsCount获取shadowmap的实际尺寸。

5.根据配置 创建RT LocalShadowmap,作为LocalShadowsPass的输出。也就是_LocalShadowmapTexture。

6.逐个光源去通过cullResults.ComputeSpotShadowMatricesAndCullingPrimitives计算各个可见光的V和P的矩阵,根据V和P计算出shadowMatrix。在这里限定一下可见光的数量不超过4,且种类为聚光灯。并设置各个可见光的shadowSliceData(比如每个可见光对应纹理中offset和矩阵以及阴影强度)

7.逐个光源设置_ShadowBias和_LightDirection,设置Viewport和Scissor,根据每个可见光 V和P计算出viewProjectionMatrices(cmd.SetViewProjectionMatrices(view, proj);),然后通过context.DrawShadows(ref settings);绘制shadow

8.全部可见光的shadowmap全部绘制完毕后,就得到一个完整的m_LocalShadowmapTexture也就是_LocalShadowmapTexture(每个可见光的光照空间的阴影贴图)

9.设置软/影阴影,记录shadowmap等参数,用于之后的shadow计算中

//Dispose,删除m_LocalShadowmapTexture

III.SetupForwardRenderingPass

IV.DepthOnlyPass。

//Execute:将深度信息绘制到RT DepthTexture

1.根据配置,创建RT DepthTexture,将其通过cmd.SetRenderTarget(colorBuffer, depthBuffer, miplevel, cubemapFace, depthSlice);和cmd.ClearRenderTarget((clearFlag & ClearFlag.Depth) != 0, (clearFlag & ClearFlag.Color) != 0, clearColor);设置为当前RT,具体实现参照Unity源码。

2.设置当前camera和所使用的shader pass为DepthOnly

3.设置绘制顺序为SortFlags.CommonOpaque,使用opaqueFilterSettings的绘制filter(也就是只绘制不透明),获取配置renderingData.supportsDynamicBatching

4.context.DrawRenderers(cullResults.visibleRenderers, ref drawSettings, renderer.opaqueFilterSettings);进行绘制,具体实现见Unity源码

//Dispose,删除DepthTexture,并将depthAttachmentHandle重置为RenderTargetHandle.CameraTarget

V.ScreenSpaceShadowResolvePass。

//Execute:创建screenspaceShadowmap,可以看出来ssm只涉及到了主光源的阴影DirectionalShadowsPass,不涉及到其他光源的阴影,其他光源的阴影在绘制物件的pass中绘制。

1.根据配置,创建RT ScreenSpaceShadowmap

2.设置Shader Property _SHADOWS_SOFT和_SHADOWS_CASCADE

3.将ScreenSpaceShadowmap 设置为当前RT

4.使用cmd.Blit(screenSpaceOcclusionTexture, screenSpaceOcclusionTexture, m_ScreenSpaceShadowsMaterial);去绘制screenSpaceOcclusionTexture,其中source其实没有用到。使用的shader为m_ScreenSpaceShadowsMaterial(ScreenSpaceShadows),其中使用到了DepthOnlyPass得到的_CameraDepthTexture以及DirectionalShadowsPass得到的_DirectionalShadowmapTexture

//Dispose,删除ScreenSpaceShadowmap,并将depthAttachmentHandle重置为RenderTargetHandle.CameraTarget

VI.CreateLightweightRenderTexturesPass。

//Execute:根据配置,创建RT Color和 DepthAttachment

//Dispose,删除Color和 DepthAttachment,并将colorAttachmentHandle和depthAttachmentHandle重置为RenderTargetHandle.CameraTarget

VII.BeginXRRenderingPass。context.StartMultiEye(camera);

VIII.SetupLightweightConstanstPass。

//Execute:设置光照参数和Shader变体

1.设置主光源cookie,所有光源的位置、颜色、世界到光照空间矩阵,其他光照的数量、_LightIndexBuffer、光照衰减、聚光灯方向、聚光灯atten等信息

2.根据上述的光照参数,设置Shader变体(AdditionalLights、MixedLightingSubtractive、VertexLights、DirectionalShadows、LocalShadows、SoftShadows、CascadeShadows、SOFTPARTICLES_ON)

IX.RenderOpaqueForwardPass。

//Execute:真正的绘制不透明物件

1.使用using开启一个profiler用于检测这个pass的性能

2.先根据配置,设置当前RT为framebuffer或者FBO

3.使用shader pass LightweightForward和SRPDefaultUnlit按照SortFlags.CommonOpaque的顺序绘制不透明物件,开启instance和dynamicbatch,具体绘制命令为:context.DrawRenderers(cullResults.visibleRenderers, ref drawSettings, renderer.opaqueFilterSettings);

4.使用m_ErrorMaterial(Hidden/InternalErrorShader)材质,绘制使用legacy shader(Always、ForwardBase、PrepassBase、Vertex、VertexLMRGBM、VertexLM)的不透明物件

X.OpaquePostProcessPass。

//Execute:如果设置了针对不透明物件的后效,则手动调用PPSV2的API cameraData.postProcessLayer.RenderOpaqueOnly(context);执行后处理,其中source和dest根据配置,同时设置为Color或者RenderTargetHandle.CameraTarget

XI.DrawSkyboxPass。

//Execute:绘制天空盒。这个是1.11和3.0的区别,3.0的天空盒不受到针对不透明的后效的影响

XII.CopyDepthPass。

//Execute:如果depthHandle不是RenderTargetHandle.CameraTarget,则将depthHandle blit到DepthTexture,使用的材质为DepthCopy,blit的过程通过shader实现了MSAA

//Dispose,删除DepthTexture

XIII.CopyColorPass。

//Execute:如果requiresOpaqueTexture,则将colorHandle blit到OpaqueColor,使用的材质为Sampling。如果开启了Downsampling。_2x/4xBilinear使用小的RT 直接blit,而_4xBox则通过shader进行特殊采样

//Dispose,删除OpaqueColor

XIV.RenderTransparentForwardPass。

//Execute:真正的绘制半透明物件

1.使用using开启一个profiler用于检测这个pass的性能

2.先根据配置,设置当前RT为framebuffer或者FBO

3.使用shader pass LightweightForward和SRPDefaultUnlit按照SortFlags.CommonTransparent的顺序绘制半透明物件,开启instance和dynamicbatch,具体绘制命令为:context.DrawRenderers(cullResults.visibleRenderers, ref drawSettings, renderer.transparentFilterSettings);

4.使用m_ErrorMaterial(Hidden/InternalErrorShader)材质,绘制使用legacy shader(Always、ForwardBase、PrepassBase、Vertex、VertexLMRGBM、VertexLM)的半透明物件

XV.TransparentPostProcessPass。

//Execute:如果开启了后处理,则手动调用PPSV2的API cameraData.postProcessLayer.Render(context);执行后处理,其中source根据配置设置为Color或者RenderTargetHandle.CameraTarget,dest为BuiltinRenderTextureType.CameraTarget

XVI.FinalBlitPass。

//Execute:将RT作为Src,将RT上的效果绘制到framebuffer上。如果是renderingData.cameraData.isDefaultViewport,则通过blit的方式,材质为 Blit。否则,则设置了矩阵和viewport后,通过commandBuffer.DrawMesh(fullscreenMesh, Matrix4x4.identity, material, 0, shaderPassId, properties);的方式

XVII.EndXRRenderingPass。context.StopMultiEye(camera);context.StereoEndRender(camera);

XVIII.SceneViewDepthCopyPass。UNITY_EDITOR的sceneview中,将DepthTexture作为src blit到BuiltinRenderTextureType.CameraTarget,材质使用DepthCopy

}

#if UNITY_EDITOR

catch (Exception)

{

CommandBufferPool.Release(cmd);

throw;

}

finally

#endif

{

m_IsCameraRendering = false;

}

}

context.ExecuteCommandBuffer(cmd);

CommandBufferPool.Release(cmd);

context.Submit();

}

}

总的看下来。3.0.0比1.11优点在于:1.有了localshadow的概念、每个可见光还可以独立设置其阴影强度,但是要求其他光照为聚光灯(点光源后期会加上)。2.不透明后效不包括skybox。3.整体思路更清晰了,特别是RT方面。4.pass可以precamera,每个camera可以自己设置自己的渲染管线。

看完了LWRP,对SRP有了点心得,游戏行业的渲染需要懂三级:第一级:GPU API和驱动,最终游戏是要跑到硬件上的,所以我们要了解硬件,了解不同硬件的驱动架构,不同硬件暴露出来的接口(OpenGL(ES)/DX/Vulkan/Metal),以及为什么会有这些接口,还需要什么接口,如何使用这些接口完成一个完整的DC。第二级:具体的图形学算法,比如BRDF、lambert、phong、lightmass、screenspaceshadowmap、sss、bloom、motionblur等等等等,这里算法就太多太多了,每年从sigraph到GDC,要学的算法太多太多了,懂得算法越多越容易实现量变到质变。第三级:渲染管线,区别于第一级的渲染管线,第一级的渲染管线只是为了完成一个DC,将一个三角形绘制出来,而这里的渲染管线是什么时候执行culling,按照什么顺序绘制多个物件,什么时候后效等等等等,这里的第三级就是SRP。之前unity只有forward和deffer渲染管线,现在我们可以写自己的SRP,比如tile/cluster,看个人能力来控制渲染管线。

Scriptable Render Pipeline Overview

好吧,总结一下,翻译一篇unity blog上标题为:Scriptable Render Pipeline Overview的文章,翻译完后突然发现,官方已经给了翻译版本了。。尴尬

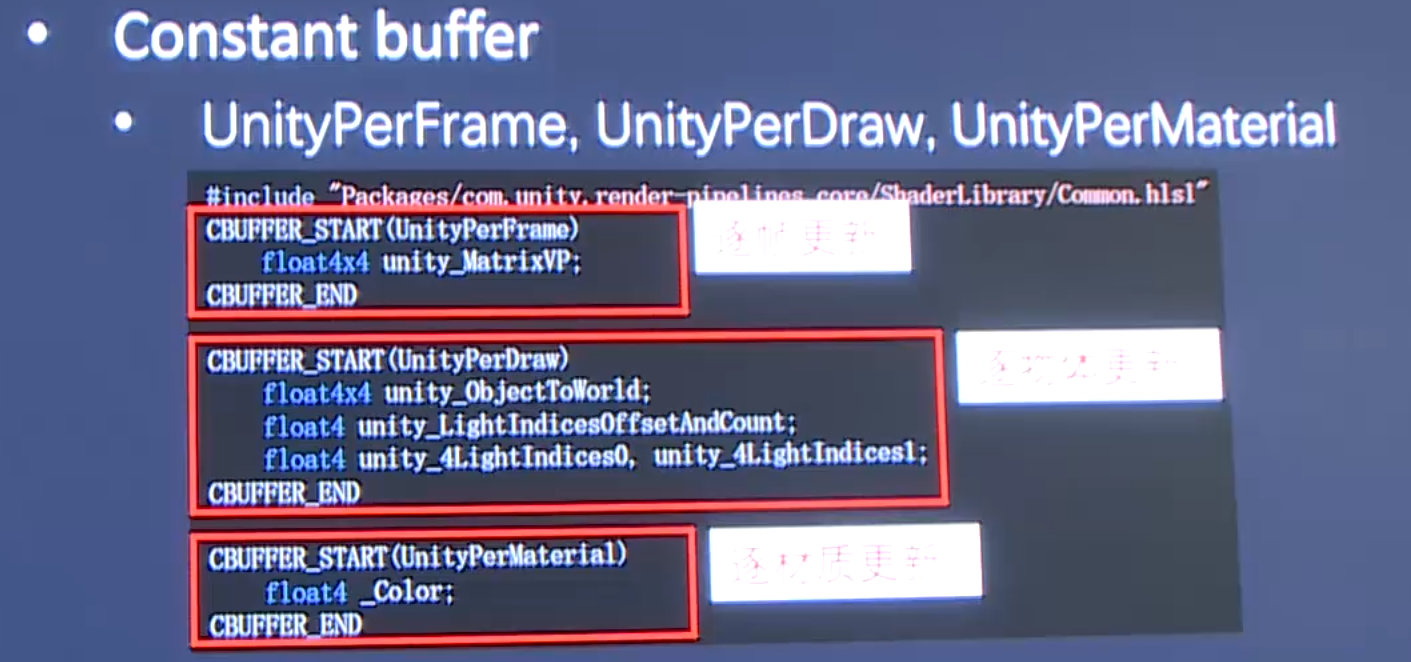

"渲染管线"是一系列用于将物件绘制到屏幕上的技术点的涵盖性术语,总的来说包含了比如如下这些关键点:culling、rendering objects、后处理。这些关键点也可以根据开发者的兴趣去用多种方式实现。比如rendering object可以通过multi-pass rendering(一个pass一盏灯)、single-pass(一个pass一个物件)、deferred(先将所有物件的表面属性绘制到G-Buffer,然后使用屏幕空间光照)。当写SRP的时候,需要决定使用哪种方式去实现。毕竟每种方式都有优缺点。下面开始手把手做一个属于自己的SRP

先创建一个继承自RenderPipeline的类,然后重载其入口函数render函数,render函数的两个参数分别为:render context以及一系列用于绘制的camera

SRP使用的是延迟执行,也就是先准备一些命令,然后再去执行。先把命令关联上render context,然后再通过Submit去一次性执行。比如使用一个command buffer去clear一个RT,然后通过render context执行。代码如下:

base.Render(renderContext, cameras);

var cmd = new CommandBuffer();

cmd.ClearRenderTarget(true, true, m_ClearColor);

renderContext.ExecuteCommandBuffer(cmd);

cmd.Release();

renderContext.Submit();

除此之外,还需要创建一个继承自RenderPipelineAsset的类,重载其InternalCreatePipeline函数,用于与刚才创建的继承自RenderPipeline的类进行关联,因为RenderPipelineAsset这个类只相当于一个配置文件,可以在Unity创建多个配置文件,然后执行的时候,还是触发真正的RenderPipeline进行执行。把创建好的asset,通过Graphics Settings窗口的Scriptable Render Pipeline Settings中指定一个管线资源,或者通过脚本为GraphicsSettings.renderPipelineAsset赋值。还可以在同类渲染管线的不同实例中存储多个质量设定。管线资源控制全局渲染质量,负责创建管线实例。管线实例包含中间资源和渲染循环实现。

这样,一个最简单的SRP就写完了。no exciting。

Culling

culling是用来指出哪些物件将会被绘制到屏幕上。在Unity中有两种culling:1.frustum culling:找出camera视角范围内(fov/near/far plane)的可见物件,2.Occlusion culling:找出哪些物件会被其他物件挡住,然后把这些物件排除在rendering之外。

绘制的第一步,就是先用culling计算出哪些物件和灯需要被绘制,这需要通过当前camera的设置来进行计算。这些被计算出来的物件和灯会在之后的渲染管线中使用到

SRP提供一些列的API用于culling,具体代码如下:

// Create an structure to hold the culling paramaters ScriptableCullingParameters cullingParams; //Populate the culling paramaters from the camera if (!CullResults.GetCullingParameters(camera, stereoEnabled, out cullingParams)) continue; // if you like you can modify the culling paramaters here cullingParams.isOrthographic = true; // Create a structure to hold the cull results CullResults cullResults = new CullResults(); // Perform the culling operation CullResults.Cull(ref cullingParams, context, ref cullResults);

culling的结果会在之后渲染物件的使用到。

Drawing

我们现在已经了解哪些物件需要会被绘制,但是绘制的办法有很多,所以我们先要根据一系列的因素,决定一个绘制方案:1.目标机型的硬件。2.希望表达的视角和感觉。3.开发的是什么类型的游戏。比如开发一个手机2D横向卷轴(即游戏画面的前景与背景从左向右移动的卷轴模式,常用于2D射击游戏中)和开发一个高端PC的FPS游戏肯定采取的渲染管线是不同的。这些游戏有这很多不同的限制,所以需要采用不同的渲染管线。一些具体的技能点也需要确认:1.HDR LDR。2.Linear Gamma。3.MSAA PostProcessAA。4.PBR material Simple material。5.Light No lighting。6.Light Technique。7.Shadow Technique。当写SRP的时候决定这些技能点,也就确定了相应的限制。

在这里我们先写一个SRP用于No Lighting,绘制几个不透明物件。

Filtering: Render Buckets and Layers

Unity使用queue来给物件分类,每个物件根据自己材质球中设置的queue,决定自己属于不透明、半透明,或者其他,当使用SRP绘制的时候,需要先确定绘制哪类的物件。

除了queue,还有layer可以用于filtering。下面这段代码,就说明了如何通过filter确定哪些物件将会被绘制

// Get the opaque rendering filter settings

var opaqueRange = new FilterRenderersSettings();

//Set the range to be the opaque queues

opaqueRange.renderQueueRange = new RenderQueueRange()

{

min = 0,

max = (int)UnityEngine.Rendering.RenderQueue.GeometryLast,

};

//Include all layers

opaqueRange.layerMask = ~0;

Draw Settings: How things should be drawn

通过culling和filter确定了需要绘制哪些物件,但是如何绘制,则需要通过一系列的配置来决定。DrawRenderSettings就是SRP用于存放配置信息的结构体。其中包含了:1.Sorting(物件按照什么顺序绘制,比如从前往后还是从后往前,其中有个选择是OptimizeStateChanges,Sort objects to reduce draw state changes.感觉很厉害的样子)。2.Per Renderer flags(决定每个物件的shader可以从unity获取到的信息,包括了比如逐物件light probe、逐物件lightmap等)。3.Shader Pass(使用哪个shader pass去绘制)。下面这个代码是个例子:

// Create the draw render settings

// note that it takes a shader pass name

var drs = new DrawRendererSettings(Camera.current, new ShaderPassName("Opaque"));

// enable instancing for the draw call

drs.flags = DrawRendererFlags.EnableInstancing;

// pass light probe and lightmap data to each renderer

drs.rendererConfiguration = RendererConfiguration.PerObjectLightProbe | RendererConfiguration.PerObjectLightmaps;

// sort the objects like normal opaque objects

drs.sorting.flags = SortFlags.CommonOpaque;

Drawing

这样,一个DC所需要的所有信息都获取到了:Cull Result、Filtering rule、Drawing rule。

这样,就可以开始DC了。和其他一样,SRP中的DC也就是context的一个call(context.DrawRenderers(cullResults.visibleRenderers, ref drs, opaqueRange);// draw all of the renderers)。在SRP中不需要逐物件的绘制,而是一次性绘制一堆物件in one go。如果CPU允许fast jobified execution,则会降低脚本执行负载。(Patrick:in a go是什么意思,我看了还是一个物件一个DC。fast jobified execution是什么意思,不懂。官方给的翻译是:发出一个调用,一次性渲染大批量的网格。这不仅减少了脚本执行上的开销,也使CPU上的执行得以快速作业化。)

这样的话就会把物件绘制到RT上,还可以通过命令切换RT。

好吧,这篇文章还是很简单的,主要是手把手写了一个SRP,以及写的时候的思路。

HDRP

OK,下面再来波大的,HDRP,包含延迟管线,目前游戏对光照要求越来越高,手机性能也越来越好,所以使得延迟渲染成了可能(之前延迟渲染是在带宽上)。下面抄一下Unity对HDRP的解释:HDRP是一种现代渲染管线,设计时同时考虑了PBR、线性光照、HDR光照。它使用一种可配置混合平铺(Tile) / 集群延迟(Cluster deferred)/正向光照(Forward lighting)架构构建。HDRP在Unity内置功能上增加了一些特性,改进了光照、反射探头和标准材质的选项。它提供了诸如Anisotropy、Subsurface scattering、Clear Coating这样的高级材质,以及对高级光照的支持,例如Area lights区域光。据说《死亡之书》用就是HDRP

HDRP也需要使用自己的shader,在SubShader的Tags中需要设置RenderPipeline为HDRenderPipeline,然而这样设置后改SubShader就不支持Unity build-in的渲染管线了,只能通过其他SubShader或者fallback的方式支持build-in渲染管线。

下面开始看HDRP的核心,也就是用C#写渲染管线这一块。目前我看的HDRP版本为AppData\Local\Unity\cache\packages\packages.unity.com\com.unity.render-pipelines.high-definition@3.0.0-preview(com.unity.render-pipelines.high-definition@3.3.0-preview),从这里可以看到目前项目使用的代码,还可以根据unity的发布随时更新。一眼看过去最亮眼的两个CS文件为HDRenderPipeline.cs以及HDRenderPipelineAsset.cs。其中HDRenderPipelineAsset.cs没什么好看的,整个就是一个序列化文件,用于保存开发者配置信息的。如果只使用HDRP的话,创建一个属于自己的HDRenderPipelineAsset,然后设置一些参数即可。真正使用这些参数去做事情的,是通过HDRenderPipeline.cs。下面先看下HDRenderPipeline.cs的构造函数,可以看到构造函数的传入参数就是HDRenderPipelineAsset:

public HDRenderPipeline(HDRenderPipelineAsset asset)

{

DebugManager.instance.RefreshEditor();

m_ValidAPI = true;

//设置lightsUseLinearIntensity和lightsUseColorTemperature为true

// HDRP LWRP

//reflectionProbeSupportFlags 支持 不支持

//defaultMixedLightingMode IndirectOnly 1 Subtractive 2

//supportedMixedLightingModes IndirectOnly 1 | Shadowmask 4 Subtractive 2

//supportedLightmapBakeTypes Mixed 1 | Baked 2 | Realtime 4 Mixed 1 | Baked 2

//supportedLightmapsModes NonDirectional 0 | CombinedDirectional 1 NonDirectional 0 | CombinedDirectional 1

//SupportsLightProbeProxyVolumes 支持 不支持

//SupportsMotionVectors 支持 不支持

//SupportsReceiveShadows 不支持(??) 支持

//SupportsReflectionProbes 支持 支持

//Lightmapping.SetDelegate(GlobalIlluminationUtils.hdLightsDelegate); (??)

//和LWRP一样,SceneView支持的功能也不全,比如alpha channel等显示模式都不支持。

//不支持Gamma,过滤不支持的设备

if (!SetRenderingFeatures())

{

m_ValidAPI = false;

return;

}

m_Asset = asset;

// Initial state of the RTHandle system.

// Tells the system that we will require MSAA or not so that we can avoid wasteful render texture allocation.

// TODO: Might want to initialize to at least the window resolution to avoid un-necessary re-alloc in the player

RTHandles.Initialize(1, 1, m_Asset.renderPipelineSettings.supportMSAA, m_Asset.renderPipelineSettings.msaaSampleCount);

//设置创建RT的参数,默认不用msaa,sampleCount为0,延迟渲染默认不用MSAA,否则会有性能问题

m_GPUCopy = new GPUCopy(asset.renderPipelineResources.copyChannelCS);

//使用CS来进行分channel的copy(CS需要至少ES3.1,且在不同的Android机型上thread数量不确定)

var bufferPyramidProcessor = new BufferPyramidProcessor(

asset.renderPipelineResources.colorPyramidCS,

asset.renderPipelineResources.depthPyramidCS,

m_GPUCopy,

new TexturePadding(asset.renderPipelineResources.texturePaddingCS)

);

//又是设置一堆CS文件,并get到它们的kernel

m_BufferPyramid = new BufferPyramid(bufferPyramidProcessor);

EncodeBC6H.DefaultInstance = EncodeBC6H.DefaultInstance ?? new EncodeBC6H(asset.renderPipelineResources.encodeBC6HCS);

//又一个CS文件

m_ReflectionProbeCullResults = new ReflectionProbeCullResults(asset.reflectionSystemParameters);

ReflectionSystem.SetParameters(asset.reflectionSystemParameters);

//设置ReflectionProbe的属性

// Scan material list and assign it

m_MaterialList = HDUtils.GetRenderPipelineMaterialList();

//获取项目中所有RenderPipelineMaterial的子类,只有Lit、StackLit、Unlit三个类(其实HDRP特定的Shader有很多)

// Find first material that have non 0 Gbuffer count and assign it as deferredMaterial

m_DeferredMaterial = null;

foreach (var material in m_MaterialList)

{

if (material.GetMaterialGBufferCount() > 0)

m_DeferredMaterial = material;

//这里获取到的就是Lit.cs,有4个GBuffer,不含velocity buffer(为什么要含??)

}

// TODO: Handle the case of no Gbuffer material

// TODO: I comment the assert here because m_DeferredMaterial for whatever reasons contain the correct class but with a "null" in the name instead of the real name and then trigger the assert

// whereas it work. Don't know what is happening, DebugDisplay use the same code and name is correct there.

// Debug.Assert(m_DeferredMaterial != null);

m_GbufferManager = new GBufferManager(m_DeferredMaterial, m_Asset.renderPipelineSettings.supportShadowMask);

//new 4个RenderTargetIdentifier

m_DbufferManager = new DBufferManager();

//DBuffer是啥,感觉也像是GBuffer(??)

m_SSSBufferManager.Build(asset);

//根据参数,使用一个CS和三个普通Shader配置SSS所需要的材质球

m_NormalBufferManager.Build(asset);

//空的

// Initialize various compute shader resources

m_applyDistortionKernel = m_applyDistortionCS.FindKernel("KMain");

//又一个CS文件

// General material

m_CopyStencilForNoLighting = CoreUtils.CreateEngineMaterial(asset.renderPipelineResources.copyStencilBuffer);

m_CopyStencilForNoLighting.SetInt(HDShaderIDs._StencilRef, (int)StencilLightingUsage.NoLighting);

m_CopyStencilForNoLighting.SetInt(HDShaderIDs._StencilMask, (int)StencilBitMask.LightingMask);

m_CameraMotionVectorsMaterial = CoreUtils.CreateEngineMaterial(asset.renderPipelineResources.cameraMotionVectors);

//又一个材质球

m_CopyDepth = CoreUtils.CreateEngineMaterial(asset.renderPipelineResources.copyDepthBuffer);

//又一个材质球

InitializeDebugMaterials();

//创建debug相关的材质球和blit材质球

m_MaterialList.ForEach(material => material.Build(asset));

//调用Lit、StackLit、Unlit三个类的Build函数,主要是通过PreIntegratedFGD创建对应的材质球preIntegratedFGD_GGXDisneyDiffuse和RT(Lit、StackLit),Lit中还创建了一个3层Texture2DArray的贴图作为LUT贴图

m_IBLFilterGGX = new IBLFilterGGX(asset.renderPipelineResources, bufferPyramidProcessor);

m_LightLoop.Build(asset, m_ShadowSettings, m_IBLFilterGGX);

m_SkyManager.Build(asset, m_IBLFilterGGX);

m_VolumetricLightingSystem.Build(asset);

m_DebugDisplaySettings.RegisterDebug();

#if UNITY_EDITOR

// We don't need the debug of Scene View at runtime (each camera have its own debug settings)

FrameSettings.RegisterDebug("Scene View", m_Asset.GetFrameSettings());

#endif

m_DebugScreenSpaceTracingData = new ComputeBuffer(1, System.Runtime.InteropServices.Marshal.SizeOf(typeof(ScreenSpaceTracingDebug)));

InitializeRenderTextures();

// For debugging

MousePositionDebug.instance.Build();

InitializeRenderStateBlocks();

}

构造函数主要还是在准备东西,下面还是要继续去看Render函数(Defines custom rendering for this RenderPipeline.)

public override void Render(ScriptableRenderContext renderContext, Camera[] cameras)

{

if (!m_ValidAPI)

return;

base.Render(renderContext, cameras);

RenderPipeline.BeginFrameRendering(cameras);

//SRP认为Render在一帧中可能会被调用两次(??),为了判断是当前帧第一次进入Render函数,做了一定的判断

{

// SRP.Render() can be called several times per frame.

// Also, most Time variables do not consistently update in the Scene View.

// This makes reliable detection of the start of the new frame VERY hard.

// One of the exceptions is 'Time.realtimeSinceStartup'.

// Therefore, outside of the Play Mode we update the time at 60 fps,

// and in the Play Mode we rely on 'Time.frameCount'.

float t = Time.realtimeSinceStartup;

uint c = (uint)Time.frameCount;

bool newFrame;

if (Application.isPlaying)

{

newFrame = m_FrameCount != c;

m_FrameCount = c;

}

else

{

newFrame = (t - m_Time) > 0.0166f;

if (newFrame) m_FrameCount++;

}

if (newFrame)

{

HDCamera.CleanUnused();

//判断是第一次进入后,执行该函数,对之前帧无用的camera关联的RT进行清理

// Make sure both are never 0.

m_LastTime = (m_Time > 0) ? m_Time : t;

m_Time = t;

}

}

// TODO: Render only visible probes

var isReflection = cameras.Any(c => c.cameraType == CameraType.Reflection);

if (!isReflection)

ReflectionSystem.RenderAllRealtimeProbes(ReflectionProbeType.PlanarReflection);

//调用PlanarReflection的camera(如果没有就创建),开始render,并挂载HDAdditionalCameraData组件(用于特定该camera的属性,比如allowMSAA为false、allowHDR为false等等)

// We first update the state of asset frame settings as they can be use by various camera

// but we keep the dirty state to correctly reset other camera that use RenderingPath.Default.

bool assetFrameSettingsIsDirty = m_Asset.frameSettingsIsDirty;

m_Asset.UpdateDirtyFrameSettings();

//frameSettingsIsDirty为true的时候,将属性m_FrameSettings copy到m_FrameSettingsRuntime(FrameSettings包含enableShadow、enableSSR等信息)

//(??没有先根据camera的depth,给camera排序??)

foreach (var camera in cameras)

{

if (camera == null)

continue;

RenderPipeline.BeginCameraRendering(camera);

// First, get aggregate of frame settings base on global settings, camera frame settings and debug settings

// Note: the SceneView camera will never have additionalCameraData

var additionalCameraData = camera.GetComponent();

// Init effective frame settings of each camera

// Each camera have its own debug frame settings control from the debug windows

// debug frame settings can't be aggregate with frame settings (i.e we can't aggregate forward only control for example)

// so debug settings (when use) are the effective frame settings

// To be able to have this behavior we init effective frame settings with serialized frame settings and copy

// debug settings change on top of it. Each time frame settings are change in the editor, we reset all debug settings

// to stay in sync. The loop below allow to update all frame settings correctly and is required because

// camera can rely on default frame settings from the HDRendeRPipelineAsset

FrameSettings srcFrameSettings;

if (additionalCameraData)

{

additionalCameraData.UpdateDirtyFrameSettings(assetFrameSettingsIsDirty, m_Asset.GetFrameSettings());

srcFrameSettings = additionalCameraData.GetFrameSettings();

}

else

{

srcFrameSettings = m_Asset.GetFrameSettings();

}

//每个camera都可以有自己的属性设置,如果RenderingPath.Default,则使用glocal的FrameSettings,否则,则使用自己的FrameSettings

FrameSettings currentFrameSettings = new FrameSettings();

// Get the effective frame settings for this camera taking into account the global setting and camera type

FrameSettings.InitializeFrameSettings(camera, m_Asset.GetRenderPipelineSettings(), srcFrameSettings, ref currentFrameSettings);

//根据camera、m_Asset的RenderPipelineSettings、上一步设置好的FrameSettings,得到一个最终的FrameSettings(含lightLoopSettings信息)

// This is the main command buffer used for the frame.

var cmd = CommandBufferPool.Get("");

// Specific pass to simply display the content of the camera buffer if users have fill it themselves (like video player)

if (additionalCameraData && additionalCameraData.renderingPath == HDAdditionalCameraData.RenderingPath.FullscreenPassthrough)

{

renderContext.ExecuteCommandBuffer(cmd);

CommandBufferPool.Release(cmd);

renderContext.Submit();

continue;

}

// Don't render reflection in Preview, it prevent them to display

if (camera.cameraType != CameraType.Reflection && camera.cameraType != CameraType.Preview

// Planar probes rendering is not currently supported for orthographic camera

// Avoid rendering to prevent error log spamming

&& !camera.orthographic)

// TODO: Render only visible probes

ReflectionSystem.RenderAllRealtimeViewerDependentProbesFor(ReflectionProbeType.PlanarReflection, camera);

//再一次调用PlanarReflection的camera(如果没有就创建),开始render,并挂载HDAdditionalCameraData组件(用于特定该camera的属性,比如allowMSAA为false、allowHDR为false等等),和之前一次的区别是,虽然调用的PlanarReflection可能是一样的,但是由于这次传入了camera,所以fov等参数可能不同

// Init material if needed

// TODO: this should be move outside of the camera loop but we have no command buffer, ask details to Tim or Julien to do this

if (!m_IBLFilterGGX.IsInitialized())

m_IBLFilterGGX.Initialize(cmd);

//获取IBL相关的信息,cs、材质、RT,并根据CS m_ComputeGgxIblSampleData计算GGX,将结果绘制到m_GgxIblSampleData RT上

foreach (var material in m_MaterialList)

material.RenderInit(cmd);

//调用Lit、StackLit、Unlit三个类的RenderInit函数,Lit和StackLit会通过PreIntegratedFGD进行绘制,通过材质FGD_GGXAndDisneyDiffuse(preIntegratedFGD_GGXDisneyDiffuse.shader)和API commandBuffer.DrawProcedural(Matrix4x4.identity, material, shaderPassId, MeshTopology.Triangles, 3, 1, properties);将结果绘制到上面Build创建的RT m_PreIntegratedFGD中

using (new ProfilingSample(cmd, "HDRenderPipeline::Render", CustomSamplerId.HDRenderPipelineRender.GetSampler()))

{

// Do anything we need to do upon a new frame.

m_LightLoop.NewFrame(currentFrameSettings);

//新的一帧,配置环境,创建m_ReflectionProbeCache和m_ReflectionPlanarProbeCache相关的RT,之类的。

// If we render a reflection view or a preview we should not display any debug information

// This need to be call before ApplyDebugDisplaySettings()

if (camera.cameraType == CameraType.Reflection || camera.cameraType == CameraType.Preview)

{

// Neutral allow to disable all debug settings

m_CurrentDebugDisplaySettings = s_NeutralDebugDisplaySettings;

}

else

{

m_CurrentDebugDisplaySettings = m_DebugDisplaySettings;

}

using (new ProfilingSample(cmd, "Volume Update", CustomSamplerId.VolumeUpdate.GetSampler()))

{

LayerMask layerMask = -1;

if (additionalCameraData != null)

{

layerMask = additionalCameraData.volumeLayerMask;

}

else

{

// Temporary hack:

// For scene view, by default, we use the "main" camera volume layer mask if it exists

// Otherwise we just remove the lighting override layers in the current sky to avoid conflicts

// This is arbitrary and should be editable in the scene view somehow.

if (camera.cameraType == CameraType.SceneView)

{

var mainCamera = Camera.main;

bool needFallback = true;

if (mainCamera != null)

{

var mainCamAdditionalData = mainCamera.GetComponent();

if (mainCamAdditionalData != null)

{

layerMask = mainCamAdditionalData.volumeLayerMask;

needFallback = false;

}

}

if (needFallback)

{

// If the override layer is "Everything", we fall-back to "Everything" for the current layer mask to avoid issues by having no current layer

// In practice we should never have "Everything" as an override mask as it does not make sense (a warning is issued in the UI)

if (m_Asset.renderPipelineSettings.lightLoopSettings.skyLightingOverrideLayerMask == -1)

layerMask = -1;

else

layerMask = (-1 & ~m_Asset.renderPipelineSettings.lightLoopSettings.skyLightingOverrideLayerMask);

}

}

}

VolumeManager.instance.Update(camera.transform, layerMask);

//有点类似PPS,根据layerMask选定某些volume,然后根据volume的设定global或者trigger距离等,计算出最终生效的volumes,包含所有VolumeComponent的子类ScreenSpaceLighting、VolumetricLightingController、ContactShadows、HDShadowSettings、AtmosphericScattering、SkySettings、VisualEnvironment。

}

var postProcessLayer = camera.GetComponent();

//开始给后效准备环境了

// Disable post process if we enable debug mode or if the post process layer is disabled

if (m_CurrentDebugDisplaySettings.IsDebugDisplayRemovePostprocess() || !HDUtils.IsPostProcessingActive(postProcessLayer))

{

currentFrameSettings.enablePostprocess = false;

}

var hdCamera = HDCamera.Get(camera);

if (hdCamera == null)

{

hdCamera = HDCamera.Create(camera, m_VolumetricLightingSystem);

//感觉HDCamera就是用于保存camera的一些HDRP相关的属性,然后附带个体积光

}

// From this point, we should only use frame settings from the camera

hdCamera.Update(currentFrameSettings, postProcessLayer, m_VolumetricLightingSystem);

//更新属性

Resize(hdCamera);

ApplyDebugDisplaySettings(hdCamera, cmd);

UpdateShadowSettings(hdCamera);

//根据volume的HDShadowSettings的设定,更新m_ShadowSettings

m_SkyManager.UpdateCurrentSkySettings(hdCamera);

//将volume中的VisualEnvironment设置给m_LightingOverrideSky.skySettings

ScriptableCullingParameters cullingParams;

if (!CullResults.GetCullingParameters(camera, hdCamera.frameSettings.enableStereo, out cullingParams))

{

renderContext.Submit();

continue;

}

m_LightLoop.UpdateCullingParameters(ref cullingParams);

hdCamera.UpdateStereoDependentState(ref cullingParams);

#if UNITY_EDITOR

// emit scene view UI

if (camera.cameraType == CameraType.SceneView)

{

ScriptableRenderContext.EmitWorldGeometryForSceneView(camera);

}

#endif

if (hdCamera.frameSettings.enableDBuffer)

{

// decal system needs to be updated with current camera, it needs it to set up culling and light list generation parameters

DecalSystem.instance.CurrentCamera = camera;

DecalSystem.instance.BeginCull();

}

ReflectionSystem.PrepareCull(camera, m_ReflectionProbeCullResults);

using (new ProfilingSample(cmd, "CullResults.Cull", CustomSamplerId.CullResultsCull.GetSampler()))

{

CullResults.Cull(ref cullingParams, renderContext, ref m_CullResults);

//cull也就这一句话,具体怎么cull的还要看unity源码。Culling results (visibleLights, visibleOffscreenVertexLights, visibleReflectionProbes, visibleRenderers)

}

m_ReflectionProbeCullResults.Cull();

m_DbufferManager.EnableDBUffer = false;

using (new ProfilingSample(cmd, "DBufferPrepareDrawData", CustomSamplerId.DBufferPrepareDrawData.GetSampler()))

{

if (hdCamera.frameSettings.enableDBuffer)

{

DecalSystem.instance.EndCull();

m_DbufferManager.EnableDBUffer = true; // mesh decals are renderers managed by c++ runtime and we have no way to query if any are visible, so set to true

DecalSystem.instance.UpdateCachedMaterialData(); // textures, alpha or fade distances could've changed

DecalSystem.instance.CreateDrawData(); // prepare data is separate from draw

DecalSystem.instance.UpdateTextureAtlas(cmd); // as this is only used for transparent pass, would've been nice not to have to do this if no transparent renderers are visible, needs to happen after CreateDrawData

}

}

renderContext.SetupCameraProperties(camera, hdCamera.frameSettings.enableStereo);

PushGlobalParams(hdCamera, cmd, diffusionProfileSettings);

//m_SSSBufferManager、m_DbufferManager、m_VolumetricLightingSystem等设置一些参数

// TODO: Find a correct place to bind these material textures

// We have to bind the material specific global parameters in this mode

m_MaterialList.ForEach(material => material.Bind());

//调用Lit、StackLit、Unlit三个类的Bind函数,通过PreIntegratedFGD进行Bind,给材质球设置参数_PreIntegratedFGD_GGXDisneyDiffuse(Lit、StackLit)

// Frustum cull density volumes on the CPU. Can be performed as soon as the camera is set up.

DensityVolumeList densityVolumes = m_VolumetricLightingSystem.PrepareVisibleDensityVolumeList(hdCamera, cmd, m_Time);

// Note: Legacy Unity behave like this for ShadowMask

// When you select ShadowMask in Lighting panel it recompile shaders on the fly with the SHADOW_MASK keyword.

// However there is no C# function that we can query to know what mode have been select in Lighting Panel and it will be wrong anyway. Lighting Panel setup what will be the next bake mode. But until light is bake, it is wrong.

// Currently to know if you need shadow mask you need to go through all visible lights (of CullResult), check the LightBakingOutput struct and look at lightmapBakeType/mixedLightingMode. If one light have shadow mask bake mode, then you need shadow mask features (i.e extra Gbuffer).

// It mean that when we build a standalone player, if we detect a light with bake shadow mask, we generate all shader variant (with and without shadow mask) and at runtime, when a bake shadow mask light is visible, we dynamically allocate an extra GBuffer and switch the shader.

// So the first thing to do is to go through all the light: PrepareLightsForGPU

bool enableBakeShadowMask;

using (new ProfilingSample(cmd, "TP_PrepareLightsForGPU", CustomSamplerId.TPPrepareLightsForGPU.GetSampler()))

{

enableBakeShadowMask = m_LightLoop.PrepareLightsForGPU(cmd, hdCamera, m_ShadowSettings, m_CullResults, m_ReflectionProbeCullResults, densityVolumes);

}

ConfigureForShadowMask(enableBakeShadowMask, cmd);

StartStereoRendering(renderContext, hdCamera);

//vr相关,直接跳过

ClearBuffers(hdCamera, cmd);

//准备RT m_CameraColorBuffer、m_CameraDepthStencilBuffer、m_CameraSssDiffuseLightingBuffer、m_GbufferManager.GetBuffersRTI()

// TODO: Add stereo occlusion mask

RenderDepthPrepass(m_CullResults, hdCamera, renderContext, cmd);

//终于开始干正事了,绘制Depth pass。分三种情况:

//1.enableForwardRenderingOnly:开启WRITE_NORMAL_BUFFER,设置好color RT NormalBuffer和depth RT m_CameraDepthStencilBuffer后,通过API renderContext.DrawRenderers(cull.visibleRenderers, ref drawSettings, filterSettings, stateBlock.Value);针对cull.visibleRenderers,过滤出HDRenderQueue.k_RenderQueue_AllOpaque renderqueue 2000-2500的物件,按照SortFlags.CommonOpaque的顺序,使用它们材质球中的"DepthOnly"、"DepthForwardOnly"这两个pass进行绘制。

//其中比较有意思的是,除了上述的三个参数之外,还可以通过RendererConfiguration改变PerObjectLightProbe等属性(虽然这个时候还没想明白怎么使用??),或者通过API SetOverrideMaterial改变绘制使用的材质球和pass,亦或者通过RenderStateBlock改变blendState、stencilState等

//由于"DepthOnly"、"DepthForwardOnly"这两个pass后面经常会用到,所以这里我们通过Lit.shader和StackLit.shader看下这两个pass做了什么。首先是"DepthOnly",其VS和PS在ShaderPassDepthOnly.hlsl中,然而VS的输入结构体AttributesMesh的定义是在VaryingMesh.hlsl中,其实也就是传统的几种属性的输入,而VS的输出结构体PackedVaryingsType则是定义在VertMesh.hlsl中,实际上也就是做了一些常规操作,但是最终调用了PackVaryingsMeshToPS这个函数,用于将VS的输出进行pack,这个会减少光栅化的数据量和计算量,是个好办法。PS比较复杂,不过总的来说在开启WRITE_NORMAL_BUFFER的时候,会把packNormalWS和perceptualRoughness写入NormalBuffer,深度写入m_CameraDepthStencilBuffer,如果没有开启WRITE_NORMAL_BUFFER,则RT中写入float4(0.0, 0.0, 0.0, 0.0)。"DepthForwardOnly"和"DepthOnly"基本一样,只是"DepthForwardOnly"肯定会写入WRITE_NORMAL_BUFFER

//2.enableDepthPrepassWithDeferredRendering || EnableDBUffer:关闭WRITE_NORMAL_BUFFER,先设置RT为m_CameraDepthStencilBuffer,针对cull.visibleRenderers,过滤出HDRenderQueue.k_RenderQueue_AllOpaque renderqueue 2000-2500的物件,按照SortFlags.CommonOpaque的顺序,使用它们材质球中的"DepthOnly"pass进行绘制,写一遍深度,然后设置好color RT NormalBuffer和depth RT m_CameraDepthStencilBuffer后,针对cull.visibleRenderers,按照HDRenderQueue.k_RenderQueue_AllOpaque的顺序,使用它们材质球中的"DepthForwardOnly"pass进行绘制。

//这样得到的结果,和1中的区别为:不包含"DepthForwardOnly"pass,只包含"DepthOnly"pass的物件的normal不会写入NormalBuffer

//3.其它情况:关闭WRITE_NORMAL_BUFFER,先设置RT为m_CameraDepthStencilBuffer,针对cull.visibleRenderers,过滤出min = (int)RenderQueue.AlphaTest, max = (int)RenderQueue.GeometryLast - 1的物件,按照SortFlags.CommonOpaque的顺序,使用它们材质球中的"DepthOnly"pass进行绘制,写一遍深度,然后设置好color RT NormalBuffer和depth RT m_CameraDepthStencilBuffer后,针对cull.visibleRenderers,按照HDRenderQueue.k_RenderQueue_AllOpaque的顺序,使用它们材质球中的"DepthForwardOnly"pass进行绘制。

//这样得到的结果,和2中的区别为:只包含"DepthOnly"pass的renderqueue 2000-2450的物件的depth不会写入depth RT m_CameraDepthStencilBuffer了。

//如果打开了enableTransparentPrepass,针对cull.visibleRenderers,过滤出HDRenderQueue.k_RenderQueue_AllTransparent renderqueue 2650-3100的物件,按照SortFlags.CommonTransparent的顺序,使用它们材质球中的"TransparentDepthPrepass"这个pass进行绘制。

//"TransparentDepthPrepass"和"DepthOnly"的区别就是"TransparentDepthPrepass"的ColorMask为0

// This will bind the depth buffer if needed for DBuffer)

RenderDBuffer(hdCamera, cmd, renderContext, m_CullResults);

//先把DepthBuffer m_CameraDepthStencilBuffer copy到m_CameraDepthBufferCopy(console不用,可能是因为console中一个RT可以同时读和写??),然后设置color RT为前面创建的包含3个RT的m_DbufferManager(在这里会clear一下), depth RT为m_CameraDepthStencilBuffer。然后针对cull.visibleRenderers,过滤出HDRenderQueue.k_RenderQueue_AllOpaque renderqueue 2000-2500的物件,按照SortFlags.CommonOpaque的顺序,使用它们材质球中的"DBufferMesh"pass进行绘制。

//DBufferMesh中把baseColor、normalWS、mask分别写入了3个RT的m_DbufferManager,ZWrite Off,ZTest LEqual,Blend SrcAlpha OneMinusSrcAlpha, Zero OneMinusSrcAlpha。

RenderGBuffer(m_CullResults, hdCamera, enableBakeShadowMask, renderContext, cmd);

//这才是正事,GBuffer。先设置color RT为前面创建的包含4个RT的m_GbufferManager(如果enableShadowMask,则为5个),depth RT为m_CameraDepthStencilBuffer。然后针对cull.visibleRenderers,过滤出HDRenderQueue.k_RenderQueue_AllOpaque renderqueue 2000-2500的物件,按照SortFlags.CommonOpaque的顺序,使用它们材质球中的"GBuffer"pass进行绘制,rendererConfiguration为:RendererConfiguration.PerObjectLightProbe | RendererConfiguration.PerObjectLightmaps | RendererConfiguration.PerObjectLightProbeProxyVolume。

//outGBuffer0 : baseColor, specularOcclusion / float4(sssData.diffuseColor, PackFloatInt8bit(sssData.subsurfaceMask, sssData.diffusionProfile, 16))

//outGBuffer1 : float4(packNormalWS, normalData.perceptualRoughness)

//outGBuffer2 : float3(surfaceData.specularOcclusion, surfaceData.thickness, outGBuffer0.a) / float3(surfaceData.anisotropy * 0.5 + 0.5, sinOrCos, PackFloatInt8bit(surfaceData.metallic, storeSin | quadrant, 8) / float3(surfaceData.iridescenceMask, surfaceData.iridescenceThickness,

PackFloatInt8bit(surfaceData.metallic, 0, 8)) / FastLinearToSRGB(fresnel0) , PackFloatInt8bit(coatMask, materialFeatureId, 8)

//outGBuffer3 : float4(bakeDiffuseLighting, 0.0)

// We can now bind the normal buffer to be use by any effect

m_NormalBufferManager.BindNormalBuffers(cmd);

//GBuffer(1)保存(包含"forwarddepthonly"pass + "GBuffer"pass材质球的)物件的normal信息

// In both forward and deferred, everything opaque should have been rendered at this point so we can safely copy the depth buffer for later processing.

CopyDepthBufferIfNeeded(cmd);

RenderDepthPyramid(hdCamera, cmd, renderContext, FullScreenDebugMode.DepthPyramid);

//??

// TODO: In the future we will render object velocity at the same time as depth prepass (we need C++ modification for this)

// Once the C++ change is here we will first render all object without motion vector then motion vector object

// We can't currently render object velocity after depth prepass because if there is no depth prepass we can have motion vector write that should have been rejected

RenderObjectsVelocity(m_CullResults, hdCamera, renderContext, cmd);

//绘制pre-object的motion vector:如果enableMotionVectors && enableObjectMotionVectors,打开depthTextureMode的DepthTextureMode.MotionVectors | DepthTextureMode.Depth,设置color RT为m_VelocityBuffer,depth RT为m_CameraDepthStencilBuffer。然后针对cull.visibleRenderers,过滤出HDRenderQueue.k_RenderQueue_AllOpaque renderqueue 2000-2500的物件,按照SortFlags.CommonOpaque的顺序,使用它们材质球中的"MotionVectors"pass进行绘制,rendererConfiguration为:RendererConfiguration.PerObjectMotionVectors。

//"MotionVectors"pass的vs中很神奇的可以通过TEXCOORD4获取到该点上一帧的模型空间位置,然后转换成mvp后的clip空间位置后,计算出来二维速度向量保存在color RT。ZWrite On

RenderCameraVelocity(m_CullResults, hdCamera, renderContext, cmd);

//绘制pre-camera的motion vector:如果enableMotionVectors,打开depthTextureMode的DepthTextureMode.Depth,使用材质球m_CameraMotionVectorsMaterial(CameraMotionVectors.shader)的第一个pass,目标color RT为m_VelocityBuffer,depth RT为m_CameraDepthStencilBuffer。绘制一个三顶点、一instance的MeshTopology.Triangles的procedural geometry。

//"CameraMotionVectors.shader"Shader是用于计算由于camera移动,造成两帧相机矩阵不同,当前屏幕空间上的一点对应的世界空间坐标系下的坐标点(如果该点已经在pre-object的motion vector中计算过了,则通过stenciltest不再重复计算了),在不同相机矩阵中对应的不同屏幕空间坐标系下的坐标位置,计算出来二维速度向量保存在color RT。Cull Off ZWrite Off ZTest Always

//绘制完毕后还可以通过m_CurrentDebugDisplaySettings.fullScreenDebugMode = FullScreenDebugMode.MotionVectors进行展现。

//ScreenView没有motion vector,把m_VelocityBuffer清空

// Depth texture is now ready, bind it (Depth buffer could have been bind before if DBuffer is enable)

cmd.SetGlobalTexture(HDShaderIDs._CameraDepthTexture, GetDepthTexture());

// Caution: We require sun light here as some skies use the sun light to render, it means that UpdateSkyEnvironment must be called after PrepareLightsForGPU.

// TODO: Try to arrange code so we can trigger this call earlier and use async compute here to run sky convolution during other passes (once we move convolution shader to compute).

UpdateSkyEnvironment(hdCamera, cmd);

//设置rendersetting中skybox等参数

StopStereoRendering(renderContext, hdCamera);

//vr相关,直接跳过

if (m_CurrentDebugDisplaySettings.IsDebugMaterialDisplayEnabled())

{

RenderDebugViewMaterial(m_CullResults, hdCamera, renderContext, cmd);

PushColorPickerDebugTexture(cmd, m_CameraColorBuffer, hdCamera);

}

else

{

StartStereoRendering(renderContext, hdCamera);

//vr相关,直接跳过

using (new ProfilingSample(cmd, "Render SSAO", CustomSamplerId.RenderSSAO.GetSampler()))

{

// TODO: Everything here (SSAO, Shadow, Build light list, deferred shadow, material and light classification can be parallelize with Async compute)

RenderSSAO(cmd, hdCamera, renderContext, postProcessLayer);

//通过MultiScaleVO GenerateAOMap

}

// Clear and copy the stencil texture needs to be moved to before we invoke the async light list build,

// otherwise the async compute queue can end up using that texture before the graphics queue is done with it.

// TODO: Move this code inside LightLoop

if (m_LightLoop.GetFeatureVariantsEnabled())

{

// For material classification we use compute shader and so can't read into the stencil, so prepare it.

using (new ProfilingSample(cmd, "Clear and copy stencil texture", CustomSamplerId.ClearAndCopyStencilTexture.GetSampler()))

{

HDUtils.SetRenderTarget(cmd, hdCamera, m_CameraStencilBufferCopy, ClearFlag.Color, CoreUtils.clearColorAllBlack);

// In the material classification shader we will simply test is we are no lighting

// Use ShaderPassID 1 => "Pass 1 - Write 1 if value different from stencilRef to output"

HDUtils.DrawFullScreen(cmd, hdCamera, m_CopyStencilForNoLighting, m_CameraStencilBufferCopy, m_CameraDepthStencilBuffer, null, 1);

//使用材质球m_CopyStencilForNoLighting(CopyStencilBuffer.shader)的第2个pass,目标color RT为m_CameraStencilBufferCopy,depth RT为m_CameraDepthStencilBuffer。绘制一个三顶点、一instance的MeshTopology.Triangles的procedural geometry。

//"CopyStencilBuffer.shader"Shader是用于将stencil buffer 不等于1的地方,将其color RT m_CameraStencilBufferCopy设置为1。其中使用了[earlydepthstencil]的语法,Force the stencil test before the UAV write.

}

}

StopStereoRendering(renderContext, hdCamera);

//vr相关,直接跳过

GPUFence buildGPULightListsCompleteFence = new GPUFence();

//异步计算

if (hdCamera.frameSettings.enableAsyncCompute)

{

GPUFence startFence = cmd.CreateGPUFence();

renderContext.ExecuteCommandBuffer(cmd);

cmd.Clear();

buildGPULightListsCompleteFence = m_LightLoop.BuildGPULightListsAsyncBegin(hdCamera, renderContext, m_CameraDepthStencilBuffer, m_CameraStencilBufferCopy, startFence, m_SkyManager.IsLightingSkyValid());

}

using (new ProfilingSample(cmd, "Render shadows", CustomSamplerId.RenderShadows.GetSampler()))

{

// This call overwrites camera properties passed to the shader system.

m_LightLoop.RenderShadows(renderContext, cmd, m_CullResults);

//核心是使用renderContext.DrawShadows(ref dss)绘制

// Overwrite camera properties set during the shadow pass with the original camera properties.

renderContext.SetupCameraProperties(camera, hdCamera.frameSettings.enableStereo);

hdCamera.SetupGlobalParams(cmd, m_Time, m_LastTime, m_FrameCount);

if (hdCamera.frameSettings.enableStereo)

hdCamera.SetupGlobalStereoParams(cmd);

}

using (new ProfilingSample(cmd, "Deferred directional shadows", CustomSamplerId.RenderDeferredDirectionalShadow.GetSampler()))

{