本节内容,来自WWDC2020_Build GPU binaries with Metal

关联阅读:

- First up I'll provide an overview of Metal's current Shader compilation model.

- Next I'm going to introduce Metal Binary Archives, a new way for you to take control over shader caching, and ship precompile GPU executables to your users.

- I'm excited to share the new support for dynamic libraries in metal.This feature will allow you to link your compute shaders against utility libraries dynamically.

- And finally Ravi will present in detail the set of tools that you have in your

Metal Shader compilation model

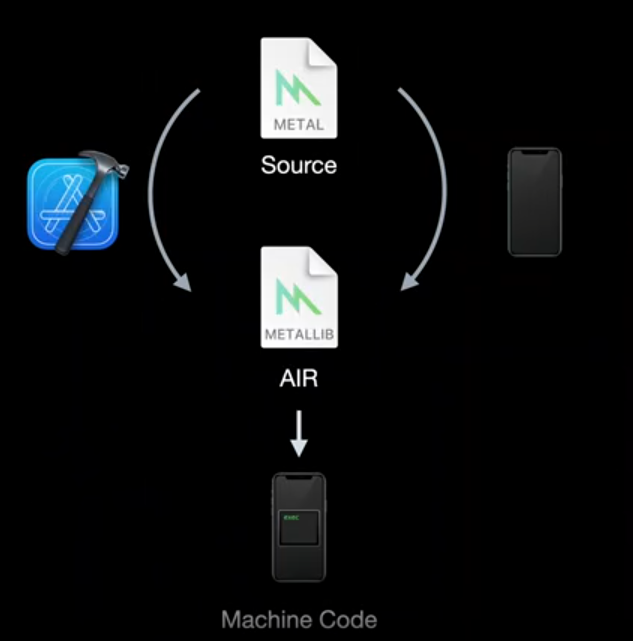

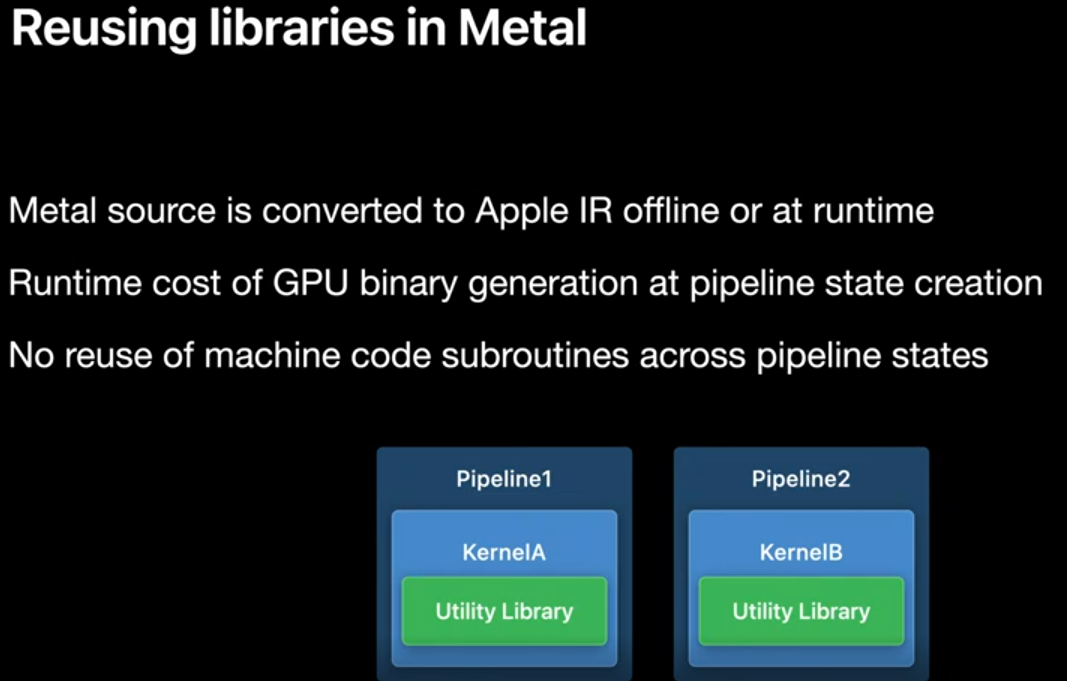

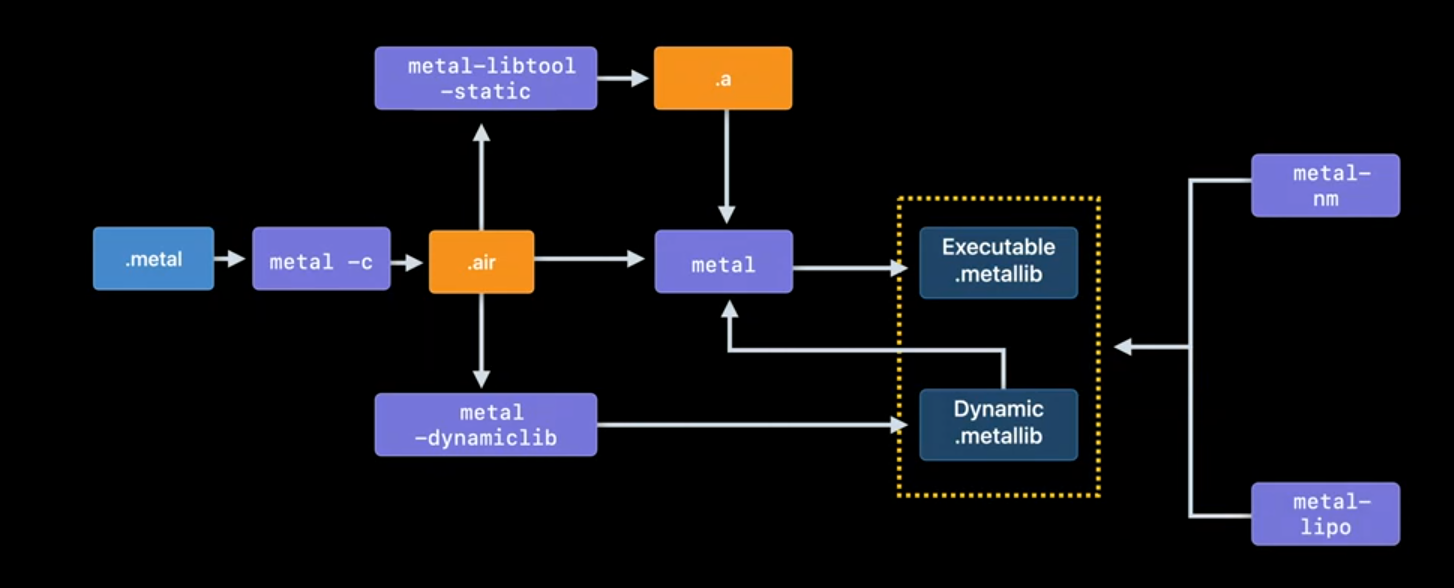

As you know the metal shading language is our programming language for shaders. Metal compiles this code into Apple's Intermediate Representation also known as AIR. This can be done off-line, in Xcode, or at runtime on the target device itself. Building off-line avoids the runtime cost of compiling source code to AIR.

In both cases, however when creating pipeline state objects. this intermediate representation is further compiled on device to generate machine specific code needed for each particular GPU. This process occurs for every pipeline state.

To accelerate recompilation and recreation of pipelines we cache the Metal Function variants produced in this step for future pipeline creation. This process is great, and has served us well of building pipeline objects early on to provide a hitch-free experience, this process can potentially result in long loading screens. Additionally under this model apps are unable to re-use any previously generated machine code subroutines across different pipeline state objects.

You might want a way to save the entire time cost of pipeline state compilation, from source, to AIR, to a GPU binary. You might also want a mechanism that enables sharing common subroutines and utility functions without the need of compiling the same code twice or having it loaded in memory more than once. Having the ability to ship apps that already include the final compiled code for executables as well as libraries, gives you the tools to provide a fantastic first-time launch experience. And allowing you to share these executables and libraries with other developers make their development easier.

Metal Binary Archives

One of the ways we're addressing these needs is via the Metal Binary Archives.

Since the beginnings of Metal, apps have benefited from a system-wide shader cache that accelerates creating pipeline objects that have been created from previous runs of the application.

With Metal Binary Archives, explicit control over pipeline state caching is being provided to you. This direct control over caching gives you the opportunity to manually collect compiled pipeline state objects, organize them into different archives based off your usage or need, and even harvest them from a device and distribute them to other compatible devices. Binary archives can be thought of as any other asset type. You have full control over the binary archive lifetimes and these persist as long as desired. Binary Archives are a feature of the Metal GPU family, Apple3(A9_iPhone6s, A10_iPhone7) and Mac1.

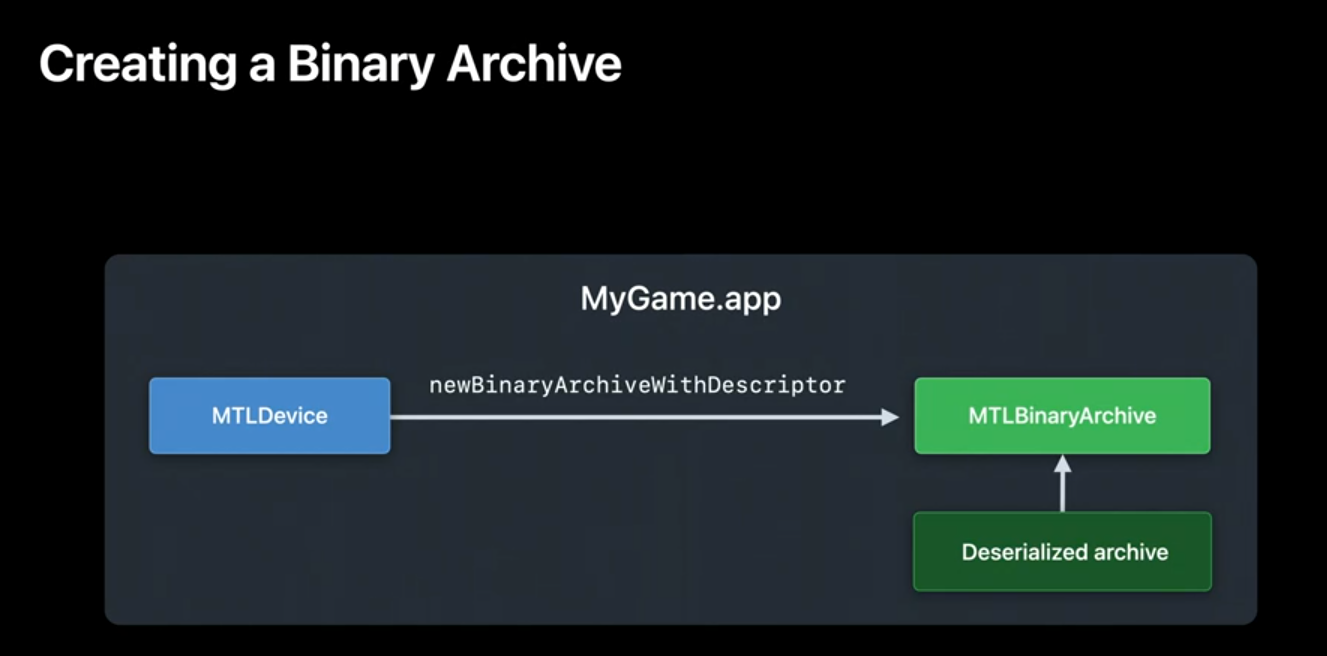

Creating a binary archive is simple. For this feature, we created a new descriptor type for binary archives. I use this descriptor to create a new MTLBinaryArchive from the device. This descriptor contains a URL property and this is used to determine if I want to create a new, empty archive or if I want to load one from disk. When we request a new archive be loaded, this file will be memory-mapped in and we can immediately start to use these loaded archives to accelerate our subsequent pipeline build requests.

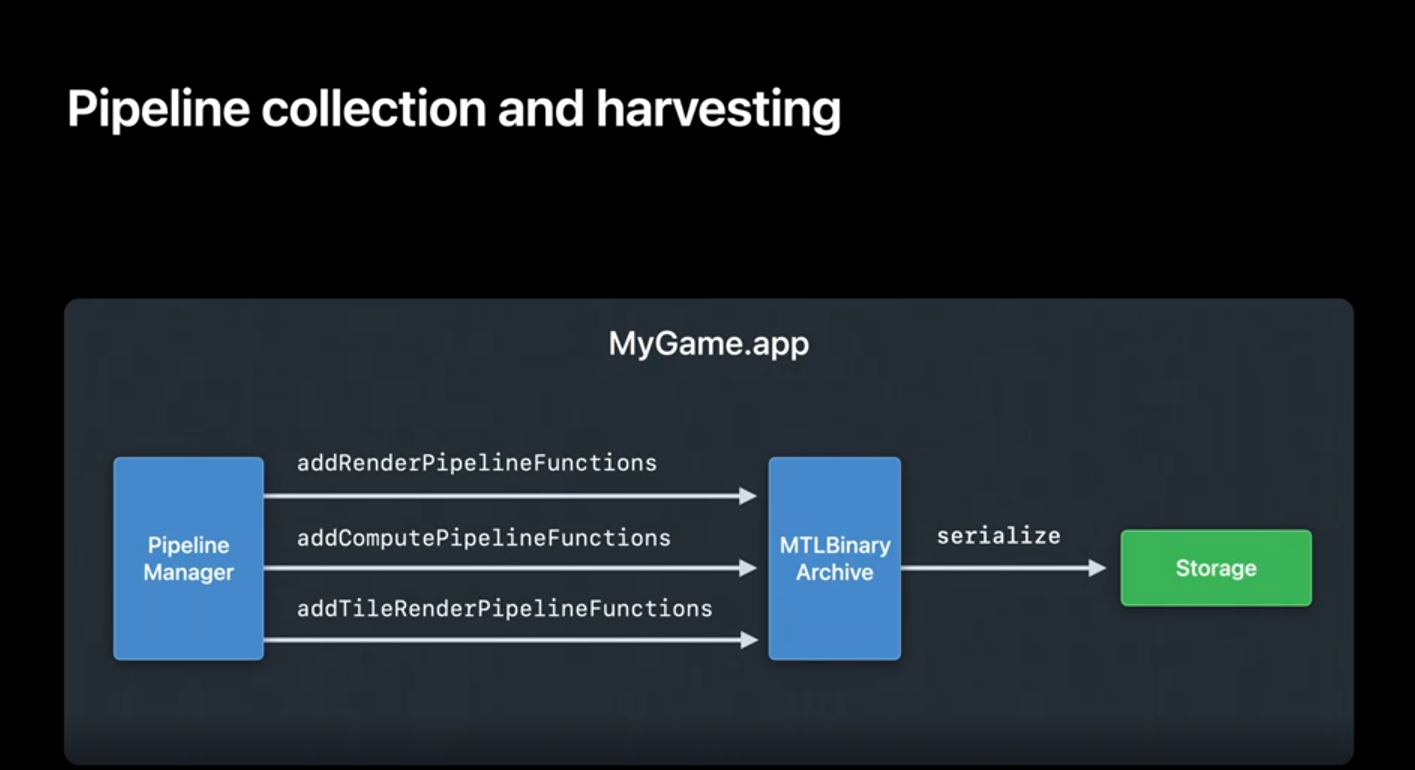

The binary archive API allows me to directly add pipelines I'm interested in to the archive. I can add Render, Compute, and tileRender pipelines. Adding a pipeline to the binary archive causes a backend compilation of the shader source, generating the machine code to be stored in the archive. Finally, once I'm done collecting all the pipeline objects I'm interested in, I call serializeToURL to save the archive to disk.

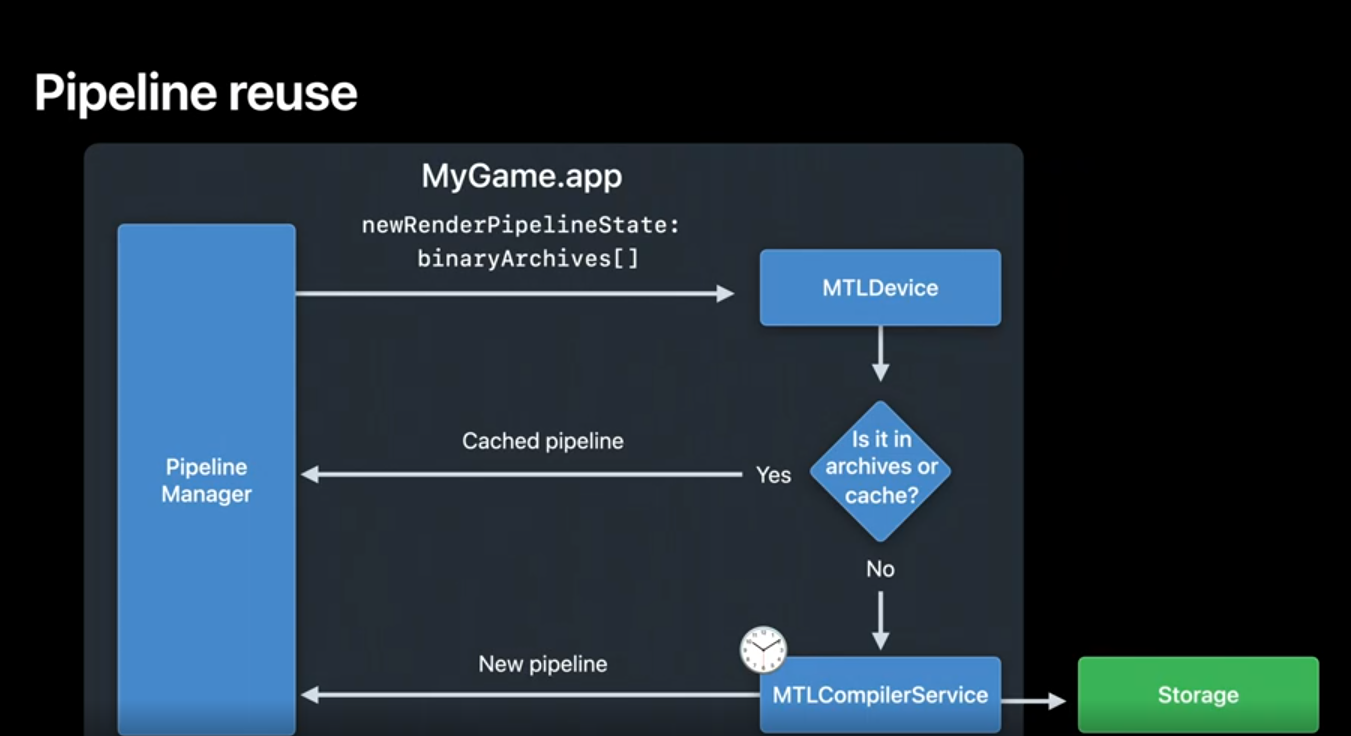

Once I have my binary archives on disk, I can harvest them from device and deploy them on other compatible devices to accelerate their pipeline state builds. The only requirement is that these other devices have the same GPU, and are running the same operating system build. If there's a mismatch the Metal framework will fall back on runtime compilation of the pipeline functions. Once I have my binary archive populated, reusing a cached pipeline is straightforward.

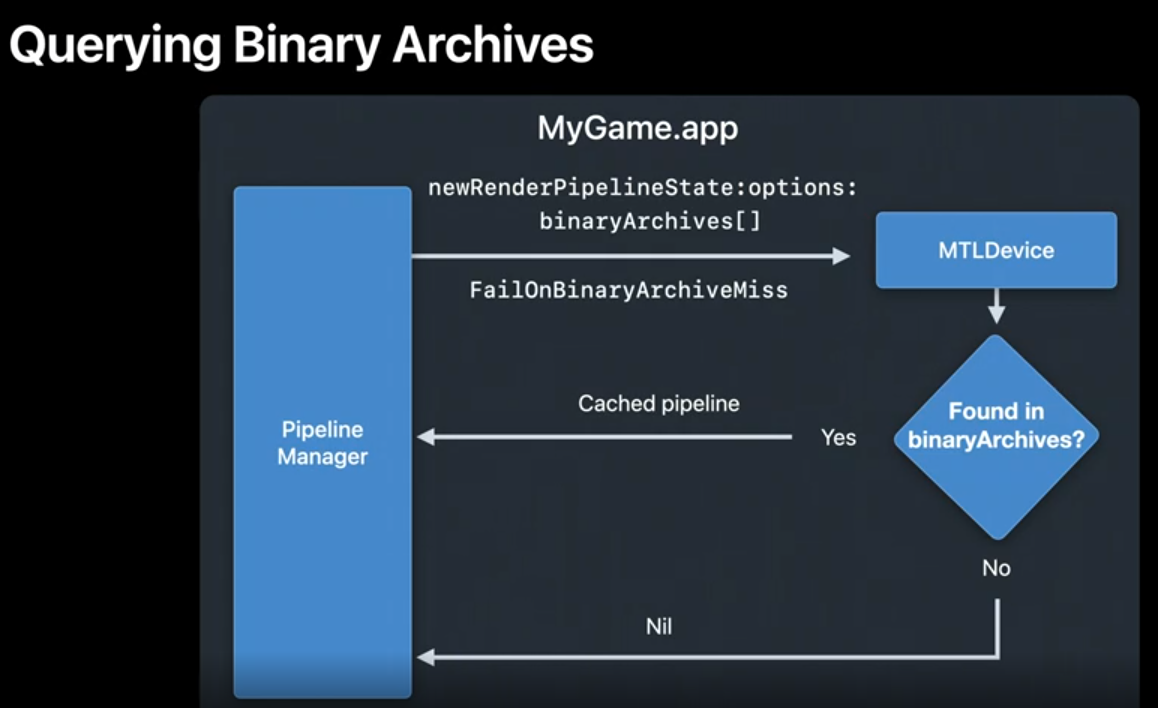

When creating a pipeline I set the pipeline descriptor's binary archives property to an array of archives. The framework will then search the array linearly for the function binaries. If the pipeline is found in any of the binary archives on the list it will be returned to you. Avoiding the compilation process entirely and will not impact the Metal Shader Cache. In the case of the pipeline is not found the OS's MTLCompilerService will kick into gear and compile my AIR source to machine code, return the result, and cache the result in the Metal Shader Cache. This process takes time but the pipeline will be cashed in the Metal Shader Cache to accelerate any subsequent pipeline build requests.

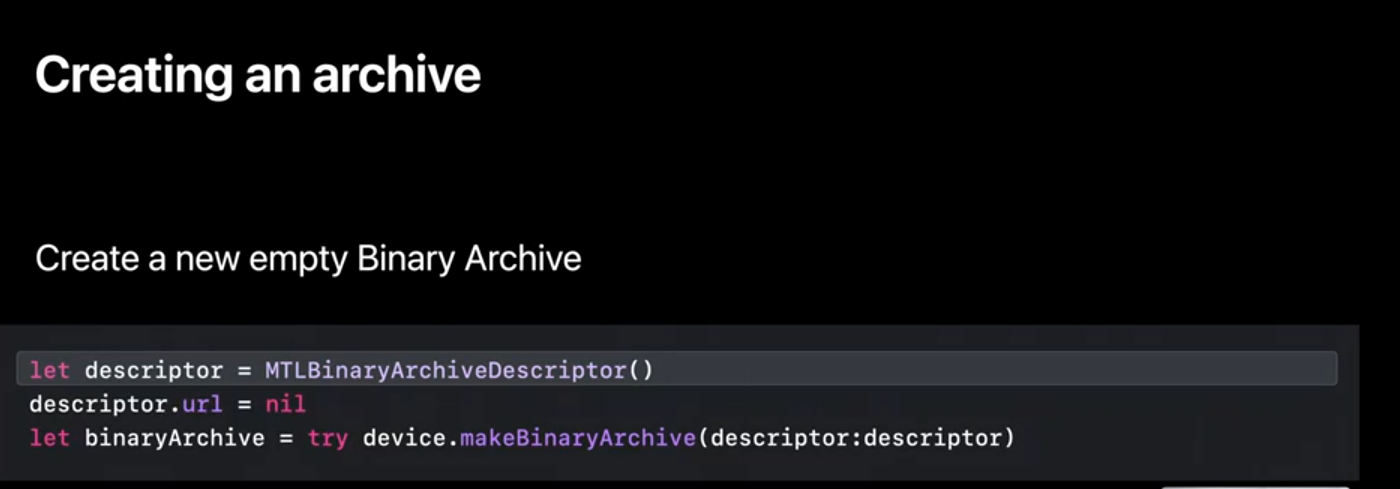

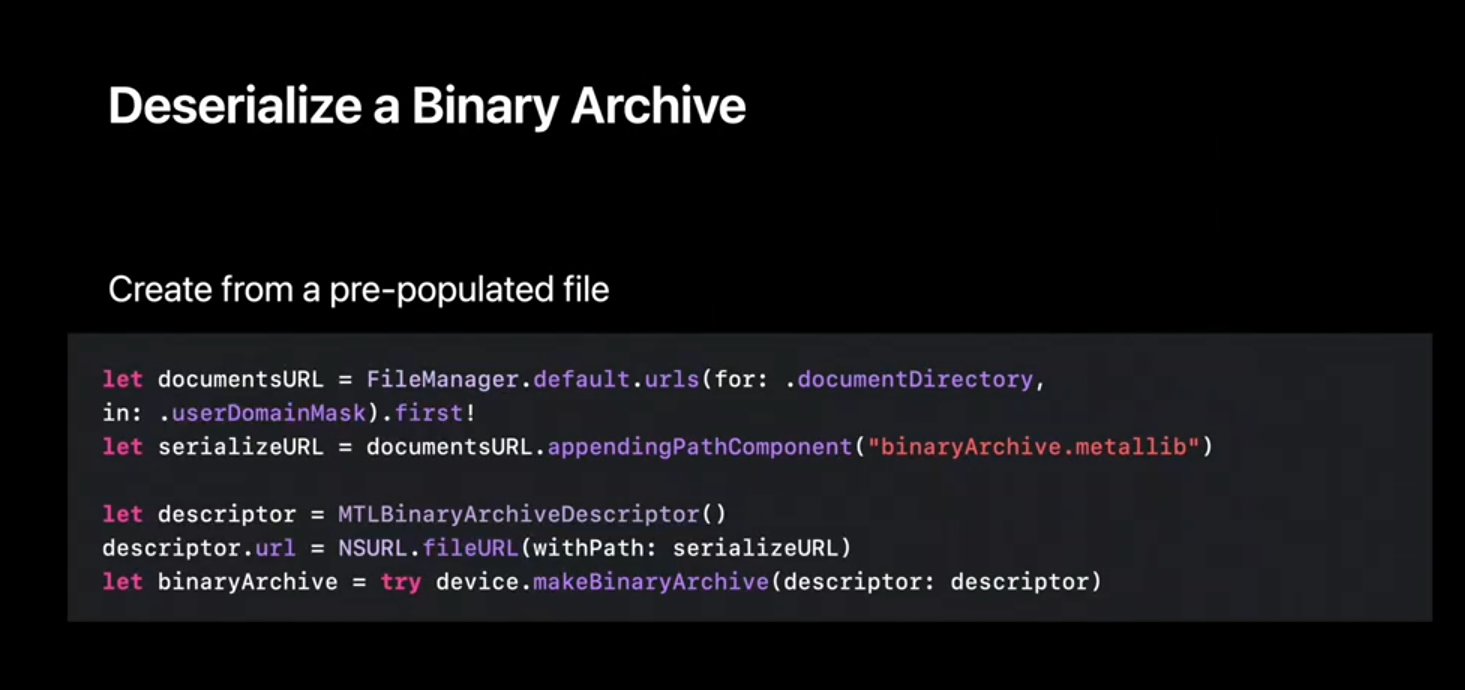

Now that I've gone over the workflow let's take a look at the API to accomplish it. First I create the MTLBinaryArchiveDescriptor. This is used to determine whether I want to create a new, empty archive or to load an existing one. Creating a binary archive is always done from a descriptor. In this case, I set the URL to nil and the device will create a new, empty archive. Finally I call the function makeBinaryArchive to create it.

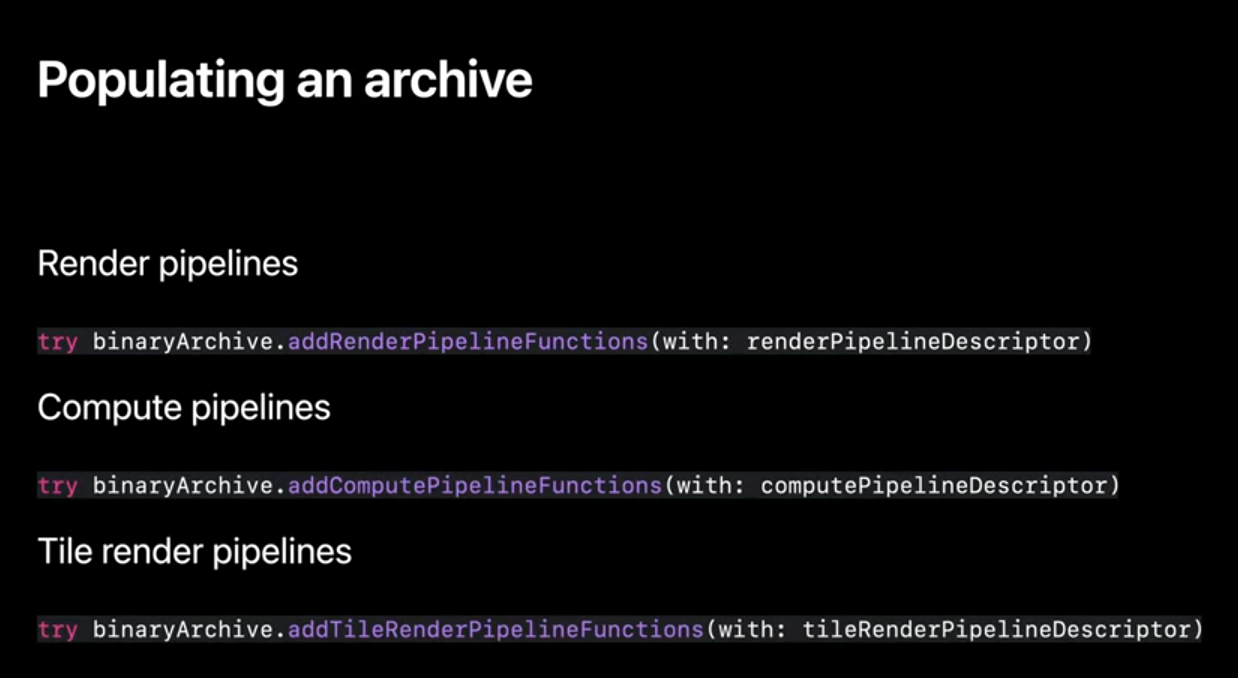

Next I'll populate a binary archive using pipeline descriptors. I can add Render, Compute and tileRender descriptors to the binary archive.

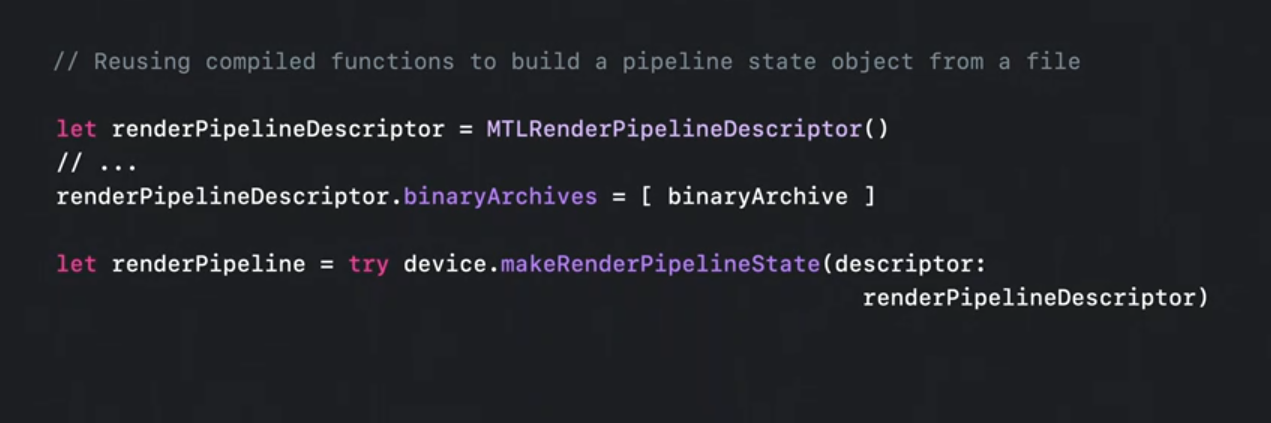

Reusing compiled functions from binary archive allows me to skip the back end function compilation. I can create my pipeline descriptors just as I always have and use the new binaryArchives property to indicate which archives be searched. I want to do this before creating the pipeline.

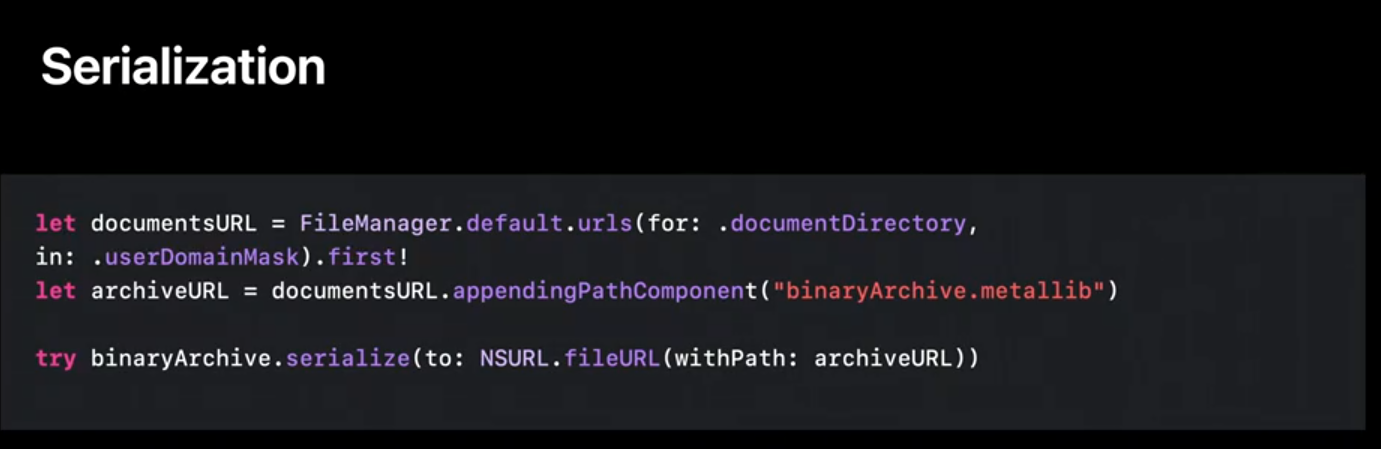

Once you have collected all the pipelines you're interested in. You can serialize the binary archive to a rideable file location on disk. Using the method 'serialize.' Here I I'm serializing the archive to my applications 'documents' directory.

On the next run of my app I can now deserialize the archive to avoid recompiling the pipelines that were previously added and serialized. I simply set the URL to point to the location of an existing pre-populated cache on disk.

Now, one final note about archive search. Depending on your use case you may find it helpful to be able to short circuit the fallback behavior of compiling a pipeline when it's not found in the archive. In this case you can specify the Pipeline. Compile Option failOnBinaryArchiveMiss. If the pipeline is found in any of the archives it is returned to you as usual. However in the case that it's not found the device will return nil. One use case I recommend, is using this workflow for debugging purposes. Avoiding the compilation process will let you diagnose any problems in your app's logic, or your archive's data.

Let's take a moment to discuss the memory considerations of using binary archives. As mentioned before, it is important to note that the binary archive file is memory-mapped in. This means that we need to reserve a range of virtual memory in order to access the archive's contents. This virtual memory range will be released when you release your cache, so it's important to close any binary archives that are no longer needed for optimal use of the virtual address space.

When collecting new pipelines Binary Archives present a similar memory footprint to using the system's Metal Shader Cache. But unlike when using the Metal Shader Cache, we have the chance to free up this memory. Having explicit control over archive lifetime allows you to serialize and release a Metal Binary Archive when you are done collecting pipelined state objects. In addition, when you reuse an existing archive the pipelines in this archive do not count against your active app memory. You can serialize and then reopen this archive and only use it for retrieving cache pipelines, effectively freeing the memory that was used in the collection process. This is not possible when relying on the system's Metal Shader Cache.

I'd like to wrap up this part of the session by discussing some of the best practices for working with Binary Archives. Although there's no size limit for Binary Archives, I recommend dividing your game assets into several different caches. Games are an excellent candidate for breaking up caches into a frequently used pipelines and per-level pipelines. Dividing the cache gives you the opportunity to completely release no longer needed caches. This will free memory, in case we've collected any new pipelines as well as a virtual memory range in use. When following this guidance, Binary Archives gives you granular control and should be favored over pre-warming the Metal Shader Cache. Modern apps often have too many unique permutations of shader variants that are generated based off of user choices. With Metal Binary Archives you can now capture them all at runtime.

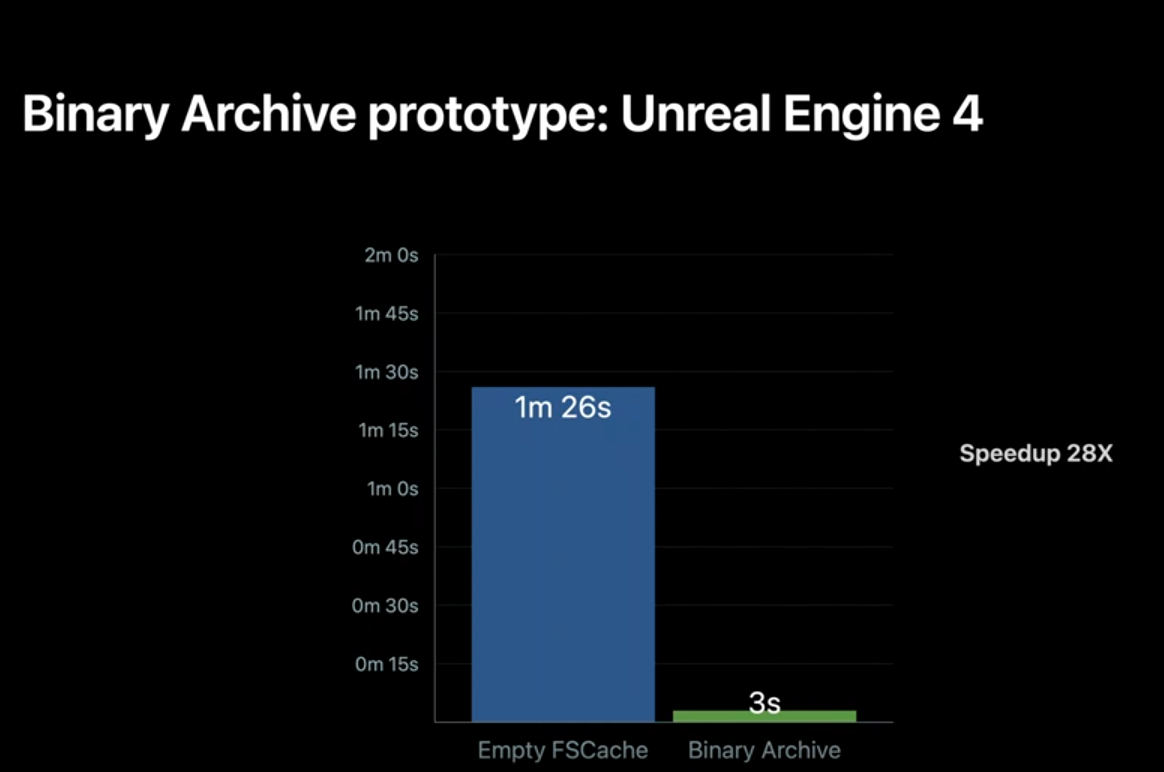

Let's take a look how this all comes together in practice. We've partnered with Epic Games to quantify exactly how reusing a pre-harvested binary archive can help improve pipelined state object creation times, as well as the developer workflow in the context of Unreal Engine. For this test, we use the pipeline state workload of a AAA title, fortnight.Fortnite is a large game. It's got a big world and many character and item customization options. This makes for a large number of shader function variants and pipeline state objects over 11,000 in fact. Epic Games follows the Metal best practices and compiles any needed pipeline state objects at load time which allows minimizing hitching at runtime, and delivers the smoothest experience possible to users. But apps as we mentioned can not benefit from the Metal Shader Cache before it's been populated, so the upfront compilation time adds up potentially making the first time launch experience take longer than desired. By preceding a harvested Metal Binary Archive that had collected function variants from seventeen hundred pipelined state objects we observed a massive speedup in the creation times when we compare against starting with an empty Metal Shader Cache.

These results are measured on a six core three gigahertz MAC mini with 32 gigabytes of RAM. When we focus on pipeline build times, we go from spending one minute and twenty six seconds building pipelines to just three seconds. Overall a speed up of over twenty eight times.

To summarize Metal Binary Archives allow you to manually manage pipeline caches. These can be harvested from a device and deployed on other, compatible devices to dramatically reduce the pipeline creation times the first time a game or app is installed and after a device reboots in the case of iOS. AAA games and other apps bound by a very large number of pipelined state objects can benefit from this feature to obtain extra ordinary gains in pipelined creation times under these conditions. Building and shipping GPU executable binaries with your application allows you to accelerate your first-time launch experience and cold-boot app experiences. I hope you take advantage of this new feature. And that's it for Metal Binary Archives.

Dynamic Libraries

Next I'm going to talk about another new feature we're bringing to the compilation model, Dynamic Libraries. Metal Dynamic Libraries are a new feature that will allow you to build well abstracted and reusable compute shader library code for your applications. I'll be discussing the concept, execution, and details of dynamic libraries. Today developers may choose to create utility libraries of Metal functions to compile with their kernels. Offline compilation can save time while generating these libraries, but there's still two costs that occur when using a utility library. Every app pays the costs of generating machine code for the utility library at PSO generation. In addition, compiling multiple pipelines with the same utility library results in duplicated machine code for subroutines. This can result in longer pipeline load times due to back end compilation and increased memory usage.

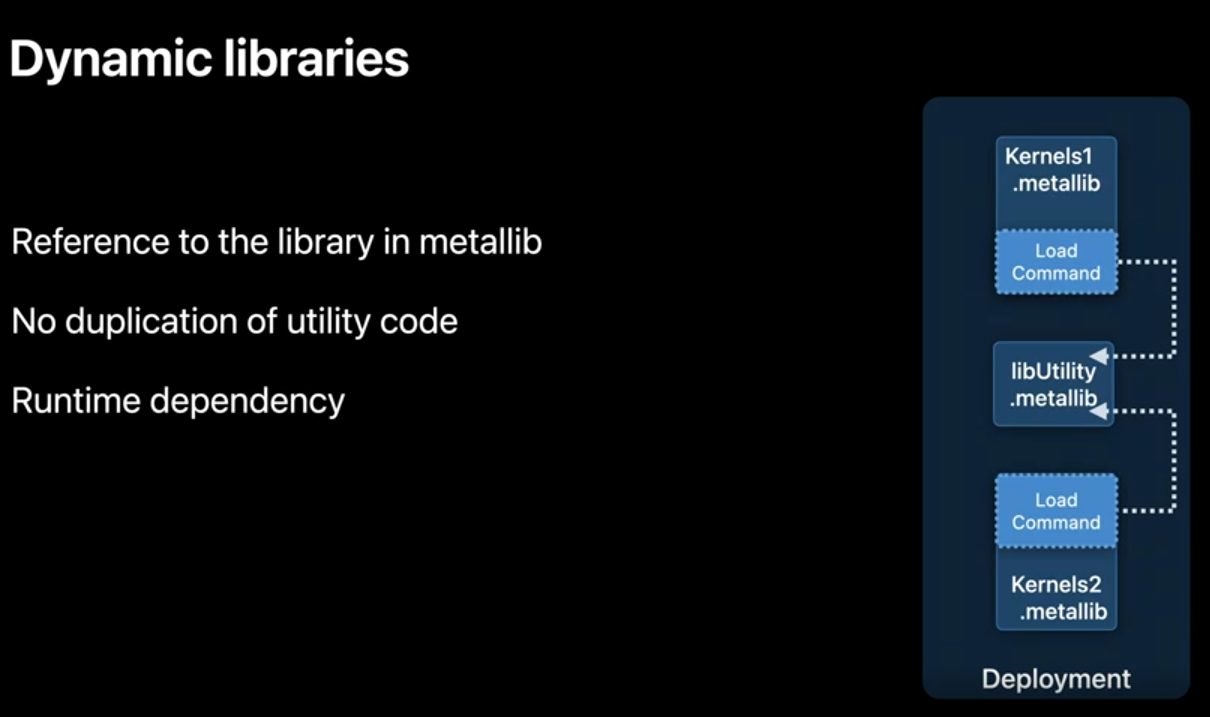

And this year we're introducing a solution to this problem. The Metal Dynamic Library. The MTLDynamicLibrary enables you to dynamically link, load, and share utility functions in the form of machine code. This code is reusable between multiple compute pipelines eliminating duplicate compilation and the storing of shared subroutines. In addition much like the MTLBinaryArchive, the MTLDynamicLibrary is serializable, and shippable as an asset in your application.

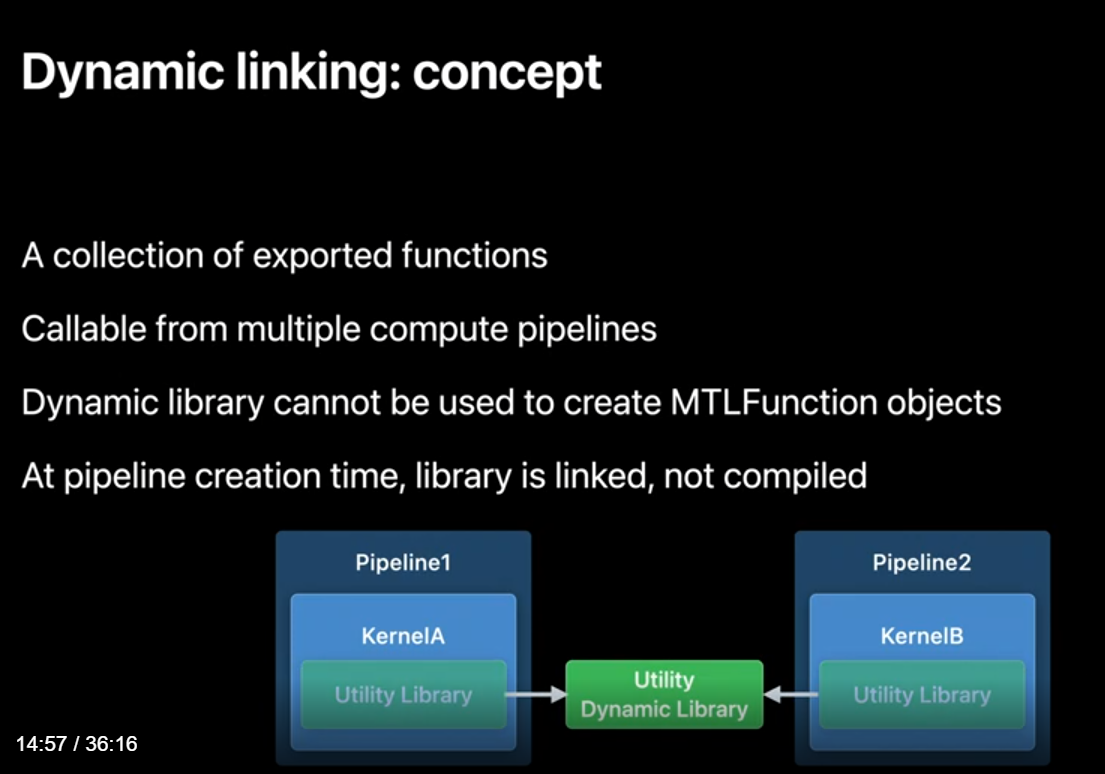

Before we dive into the API, let's talk about what a MTLDynamicLibrary is. A MTLDynamicLibrary is a collection of exported functions that can be called from multiple compute pipelines. Later we'll discuss which functions in your dylib are exported and how to manage them. Unlike an executable MTLLibrary, dynamic libraries can not be used to create MTLFunctions. However, standard MTLLibraries can import functions that are implemented in a dynamic library. At pipeline creation time, the dynamic library is linked to resolve any imported functions much like a dynamic library is used in a typical application.

So why might you want to use dynamic libraries. If your application can be structured into or relies on a shared utility code base, dynamic libraries are for you. Using dynamic libraries in your app prevents recompiling and duplicating machine code across pipeline states. If you're interested in developing Metal Middleware, dynamic libraries provide you the ability to ship a utility library to your users. Unlike before where you would have to ship sources to developers or compile their code with a static Metal library, a dynamic library can be provided and updated without requiring users to rebuild their own Metallib files. Finally dynamic libraries give you the power to expose hooks for your users to create custom kernels. The Metal API exposes the ability to change which libraries are loaded at pipeline creation time allowing you the ability to inject user defined behavior into your shaders, without creating the MTLLibrary and MTLFunctions containing your entry points. To determine if male dynamic libraries are supported for your GPU, check the feature query 'supportsDynamicLibraries.

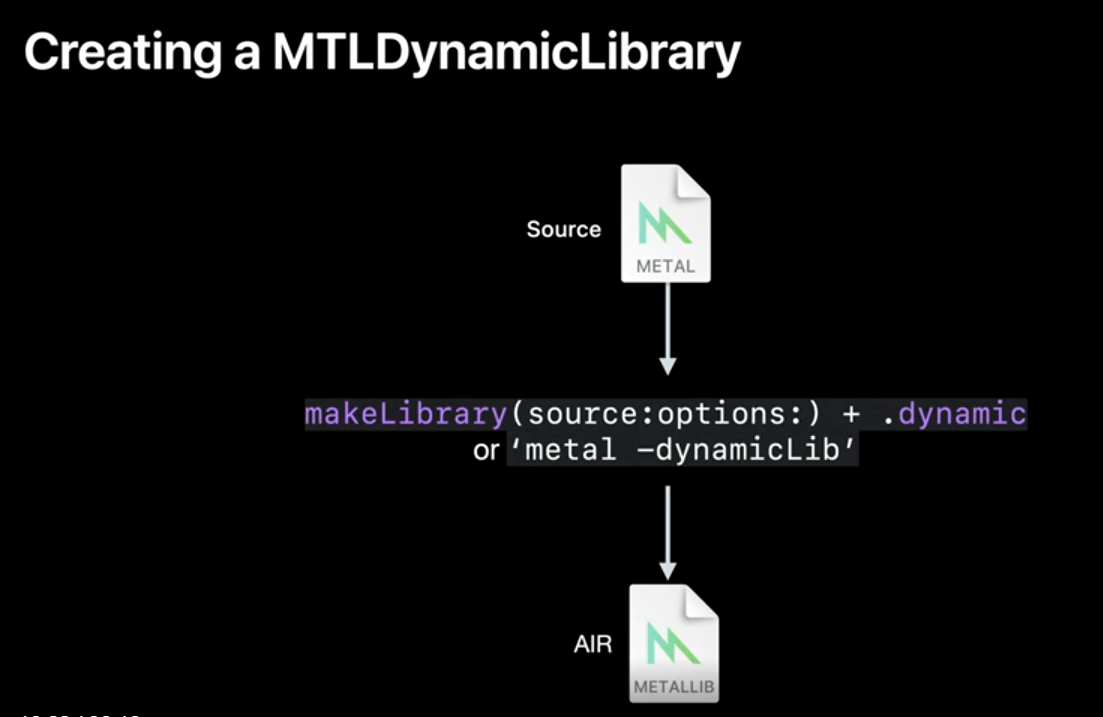

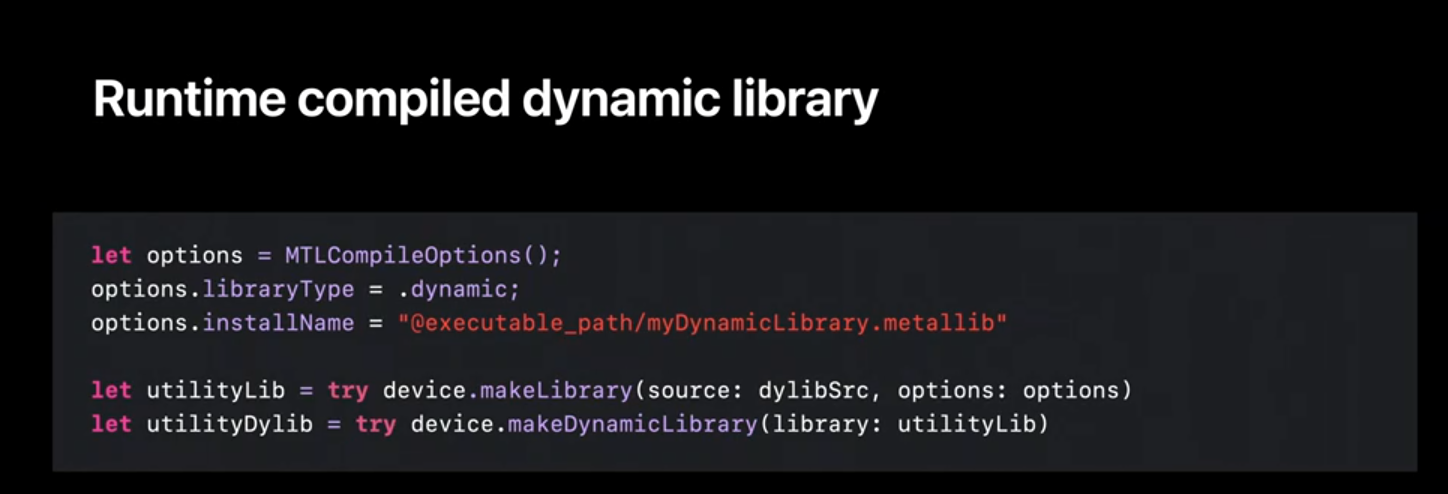

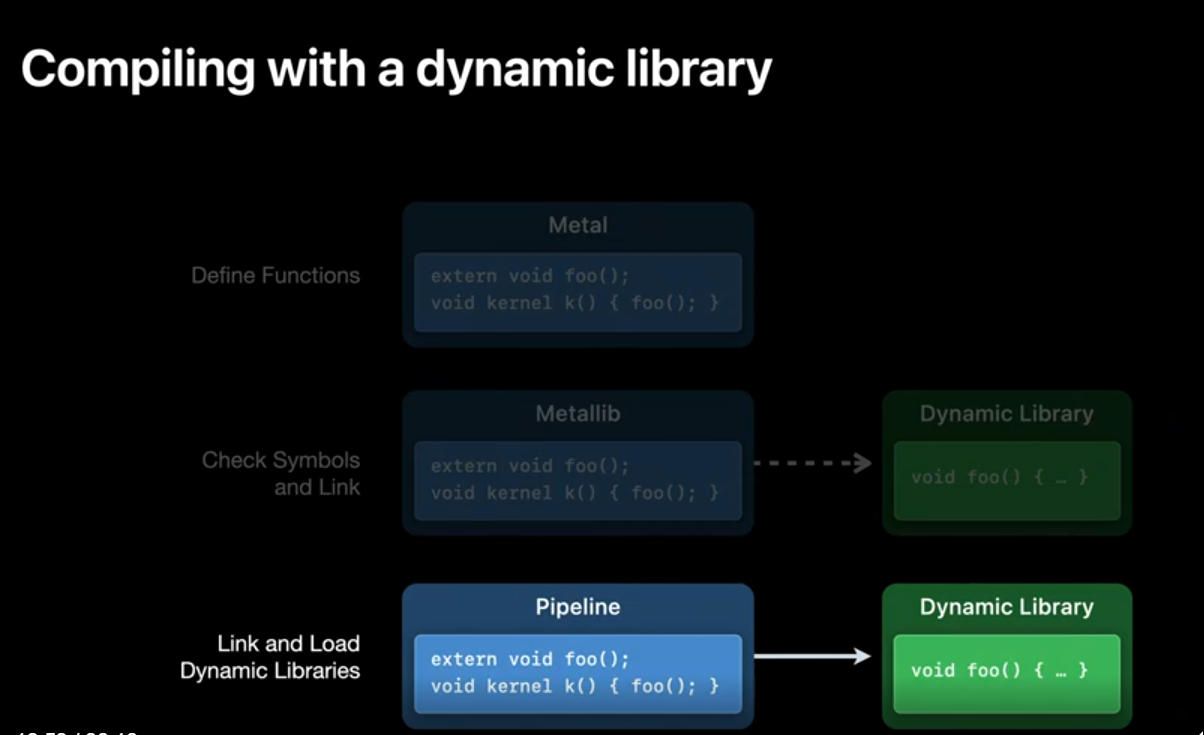

In the next few slides we'll work through an example of how to create a dynamic library, how symbols are resolved, and some more advanced linking scenarios. A standard MTLLibrary is compiled to AIR through either a makeLibrary with source call at runtime or through compiling your library with the Metal toolchain. To create a MTLDynamicLibrary we begin with a similar workflow. We start by creating a MTLLibrary. But when doing so, we specify that we'd like this library to be used as a dynamic library.

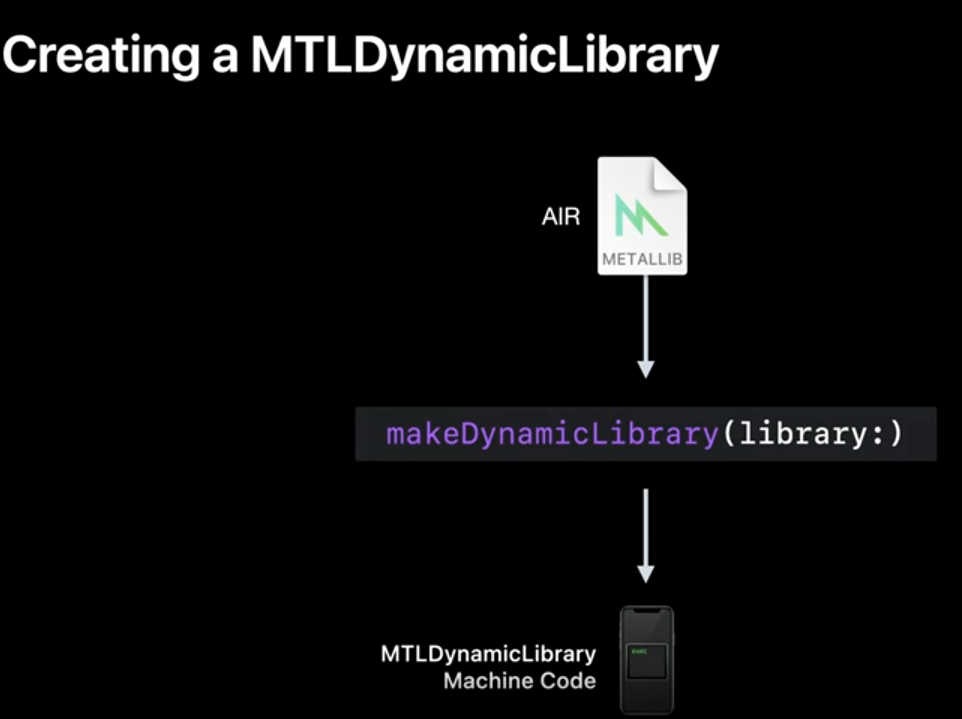

Next we call the function makeDynamicLibrary which will backend compile our Metal code to machine code. This is the only time you will need to compile the dynamic library.

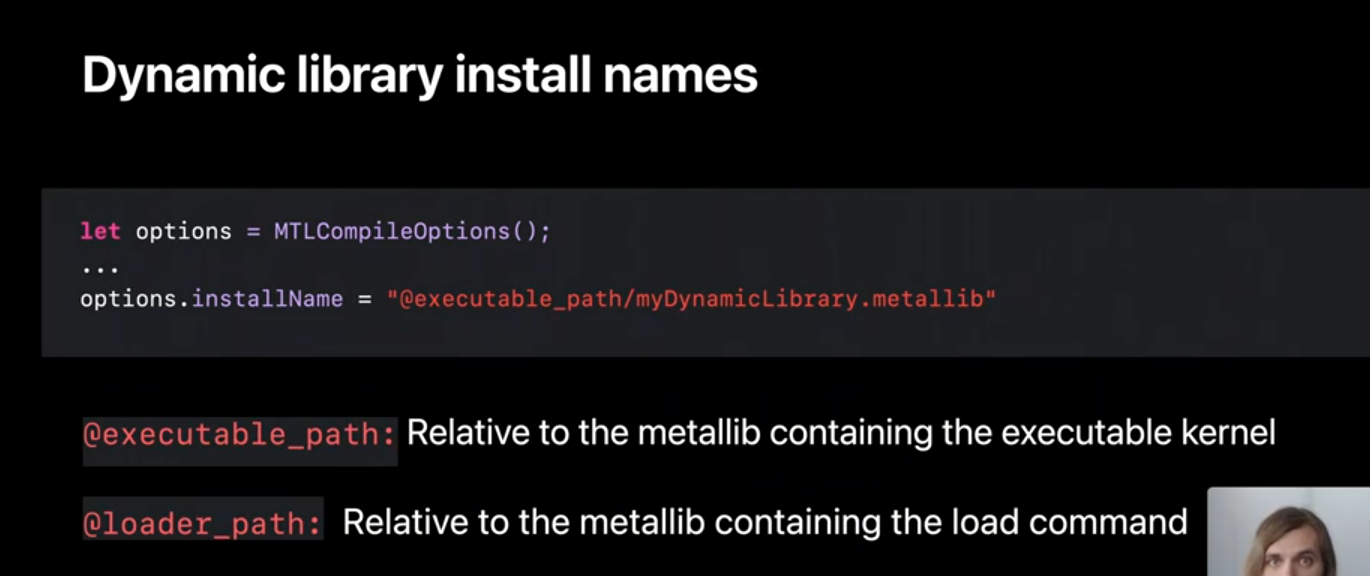

We need one more bit of information: a unique install-name. At pipeline creation time, these names are used by the linker to load the dynamic library. The linker supports two relative paths @executable_path, which refers to the Metallib containing an executable kernel and @loader_path which refers to the Metallib containing the load command. An absolute path can also be used.

With an installName and a libraryType I'm now ready to created a dynamic library. Once I've set the compile options I create a MTLLibrary which will compile my library from source to AIR. Then I called the API method makeDynamicLibrary on the MTLDevice which will compile my dynamic library into machine code.

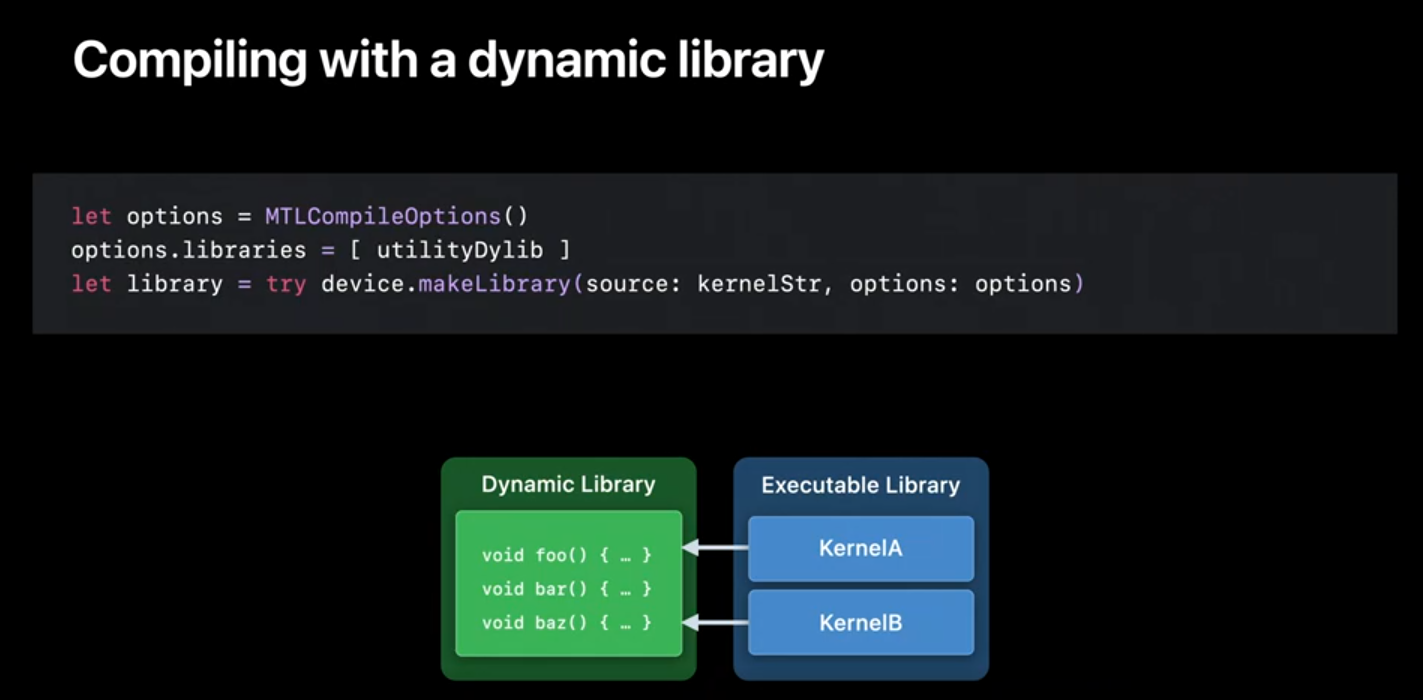

Now that we've covered how to create a dynamic library, let's take a look at how we can use it. In these next few examples, I'll be discussing how we can link dynamic libraries at runtime. These operations can also be achieved when compiling metal libraries offline. And we'll discuss our offline workflow later in the session. To link a dynamic library when compiling a MetalLib from source, add the dynamic library to the 'libraries' property of the MTLCompileOptions, before you compile your library. The specified library will be linked at pipeline creation time. However symbol resolution will be checked at compile time to make sure at least one implementation of the function exists.

To review these steps: when creating a MetalLib from source, source files should include headers that define functions available in your dynamic libraries. At compile time dynamic libraries included in the 'libraries' option are searched for at least one matching function signature, if no signature is found, compilation will fail explaining which symbols are missing. However, unlike when compiling with static libraries, or header libraries, this compilation does not bind the function call to the function implementation. At pipeline creation time, libraries are linked and loaded and a function implementation is chosen.

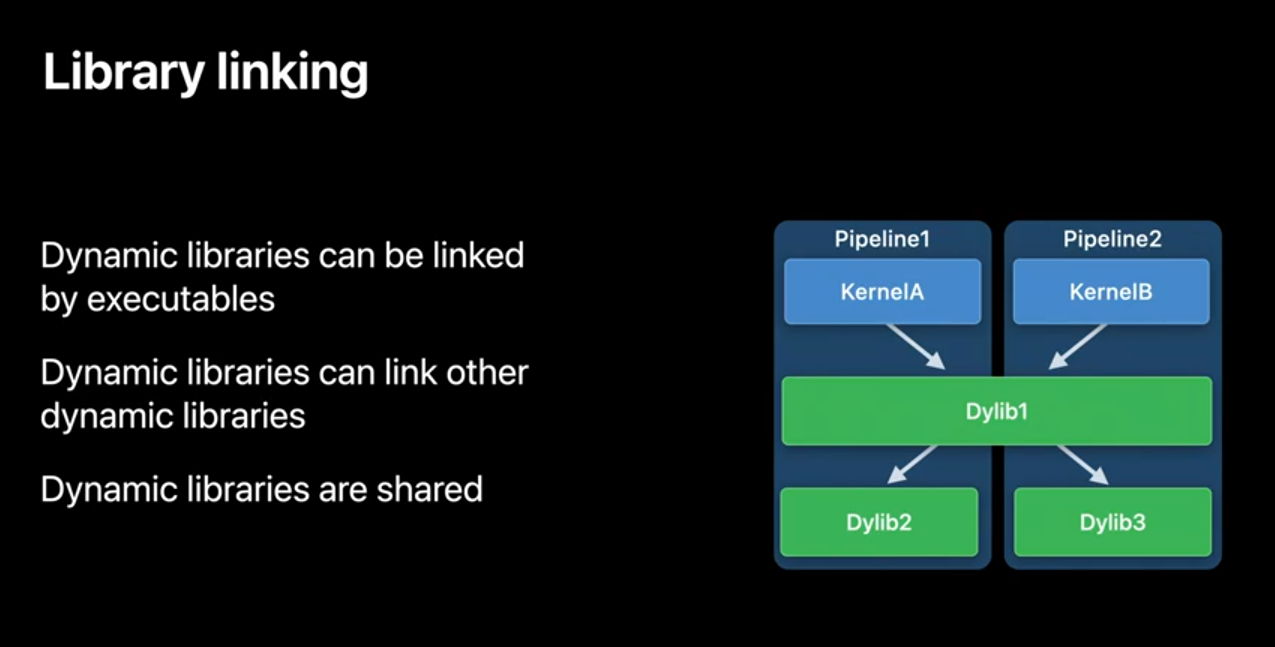

We'll go over the case where multiple dynamic libraries export the same function in just a moment. In addition to executable MTLLibraries linking dynamic libraries, dynamic libraries can also reference other dynamic libraries. If all these libraries were created from source at runtime, linking Dylib2 to and Dylib3 to Dylib1 is as simple as setting the MTLCcompileOptions 'libraries' property on creation. And to reiterate one more time, dylibs are shared between kernels. Although multiple kernels link the same dylib, only one instance of the dylib exists in memory.

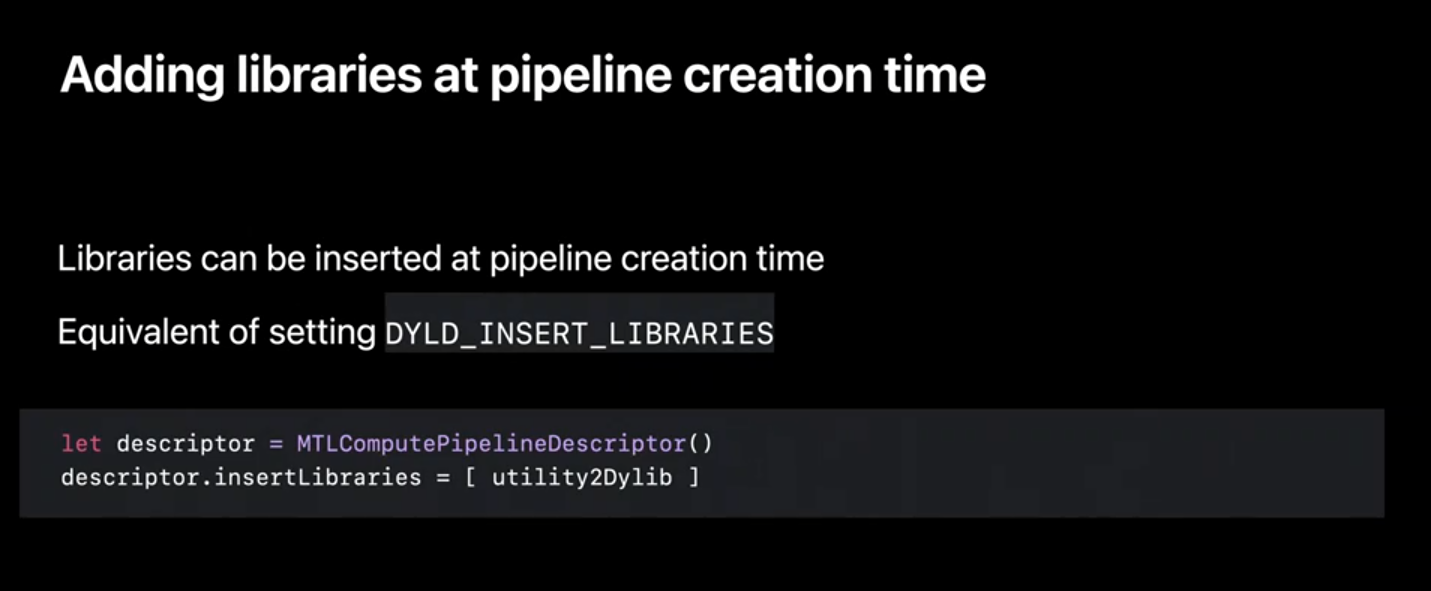

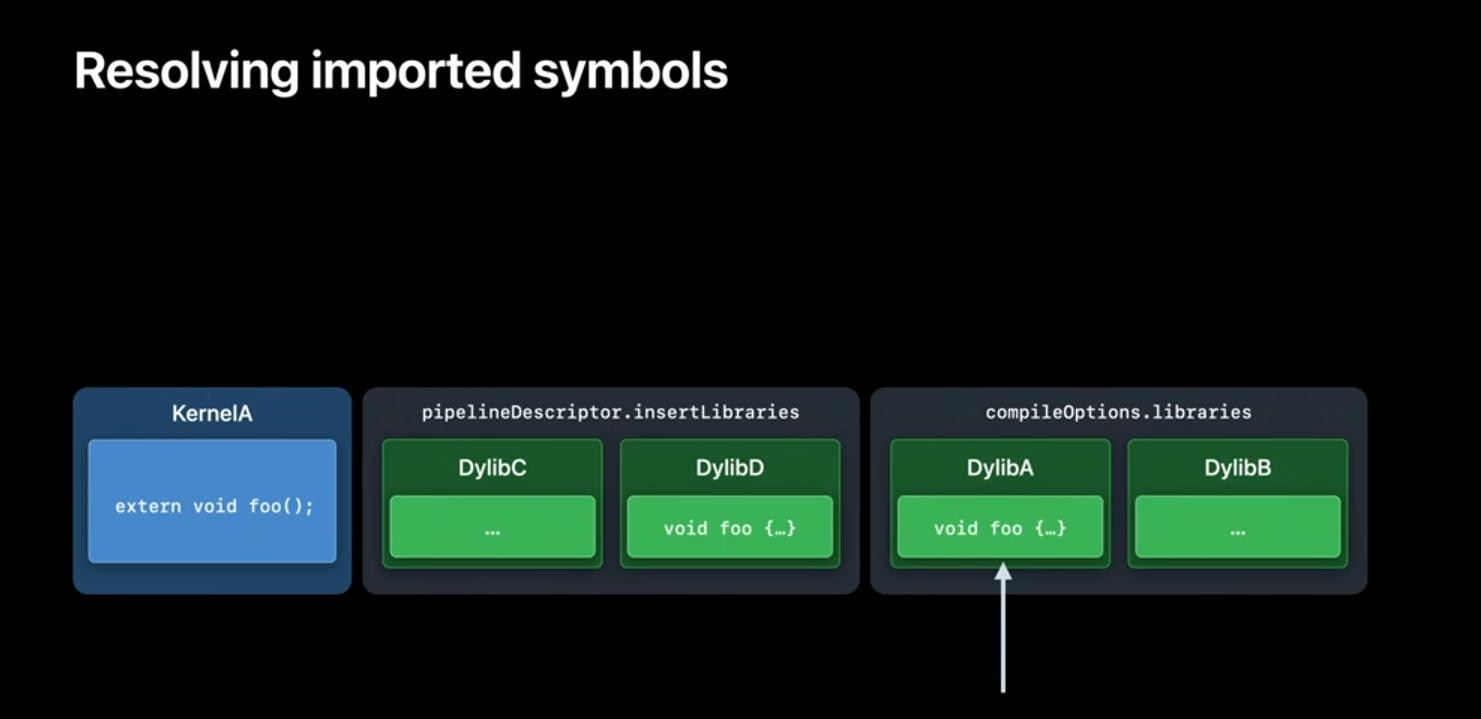

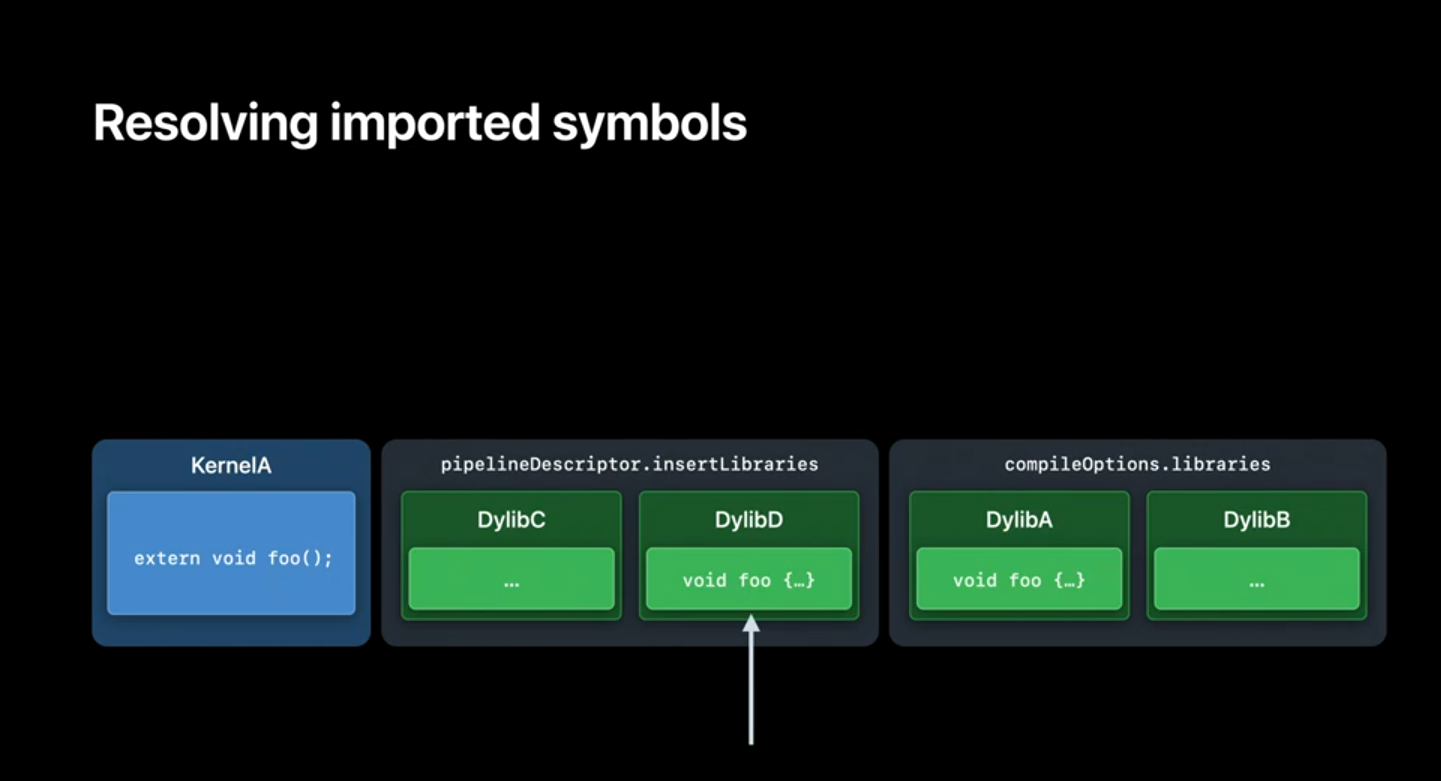

Because linking is deferred to pipeline creation time, we can replace functions, or even full libraries with new implementations by using the insertLibraries property of the compute pipeline descriptor. Setting this option is comparable to setting the DYLD_INSERT_LIBRARIES environment variable. At pipeline creation time the linker will first search through inserted libraries to find imported symbols, before looking through the kernel's linked libraries for any remaining imported symbols.

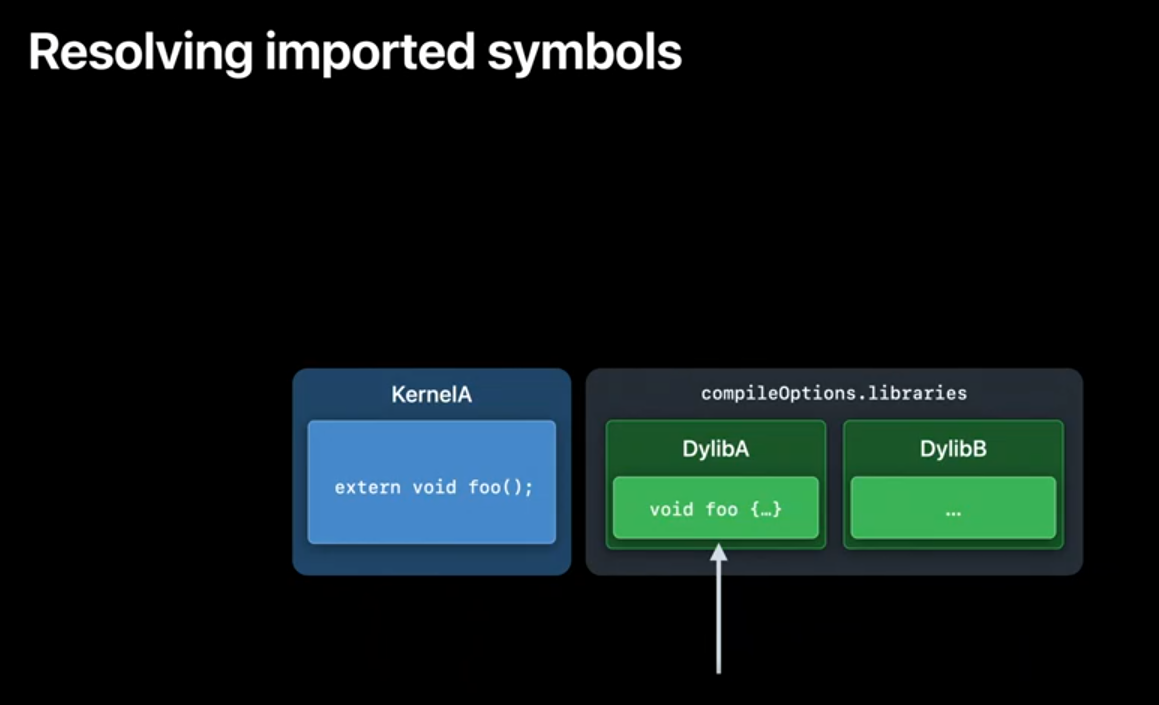

In this example dylib A exports the function foo(). And when we create the compute pipelined state food will be linked to the implementation in dylib A.

When we use insertLibraries both dylib A and dylib D export the function foo.

When we create the pipeline state, we walk down the list of imported libraries to resolve the symbol, and instead of linking the implementation of foo() in dylib A, we will instead link from foo() in dylib D.

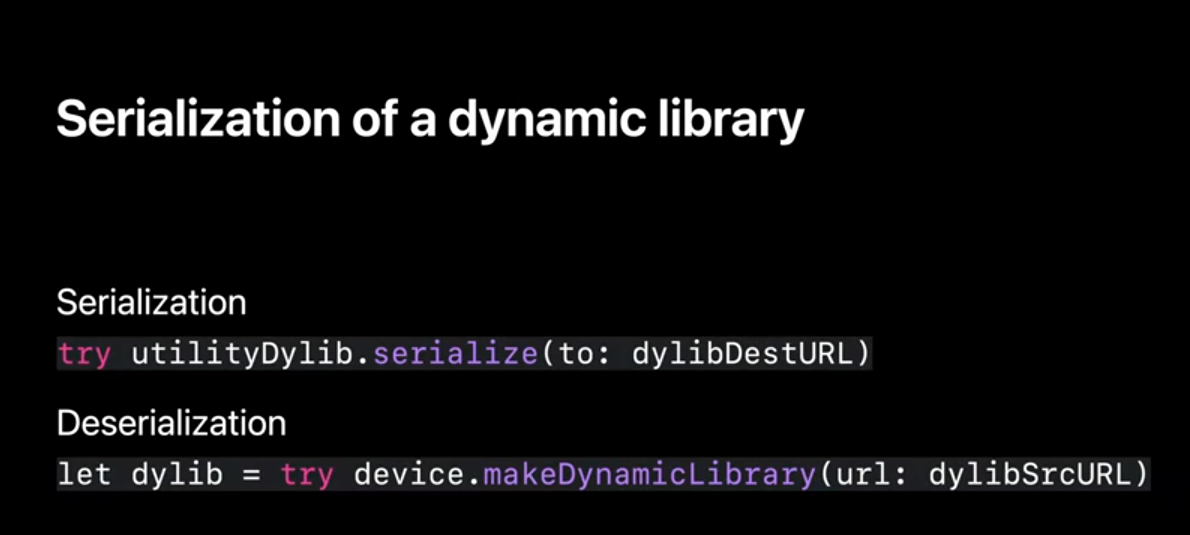

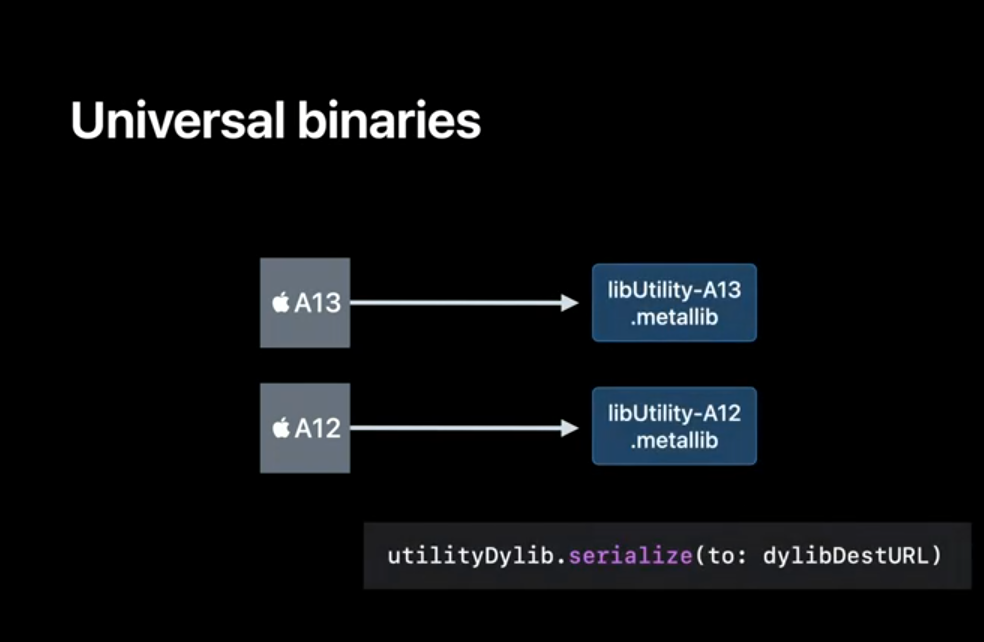

Finally, let's discuss distributing your dynamic libraries. Much like Binary Archives, Compiled Dynamic Libraries can be serialized out to URL. Both the pre-compiled binary and the generic AIR for the MTLLibrary are serialized. If you end up distributing the dynamic library as an asset in your project, the metal framework will recompile the AIR slice into machine code if the target device can not use the pre- compiled binary. This would occur when loading your dylib on a different architecture or OS. This compilation is not added to the Metal Shader Cache so make sure to serialize, and load your library next time to save compilation time.

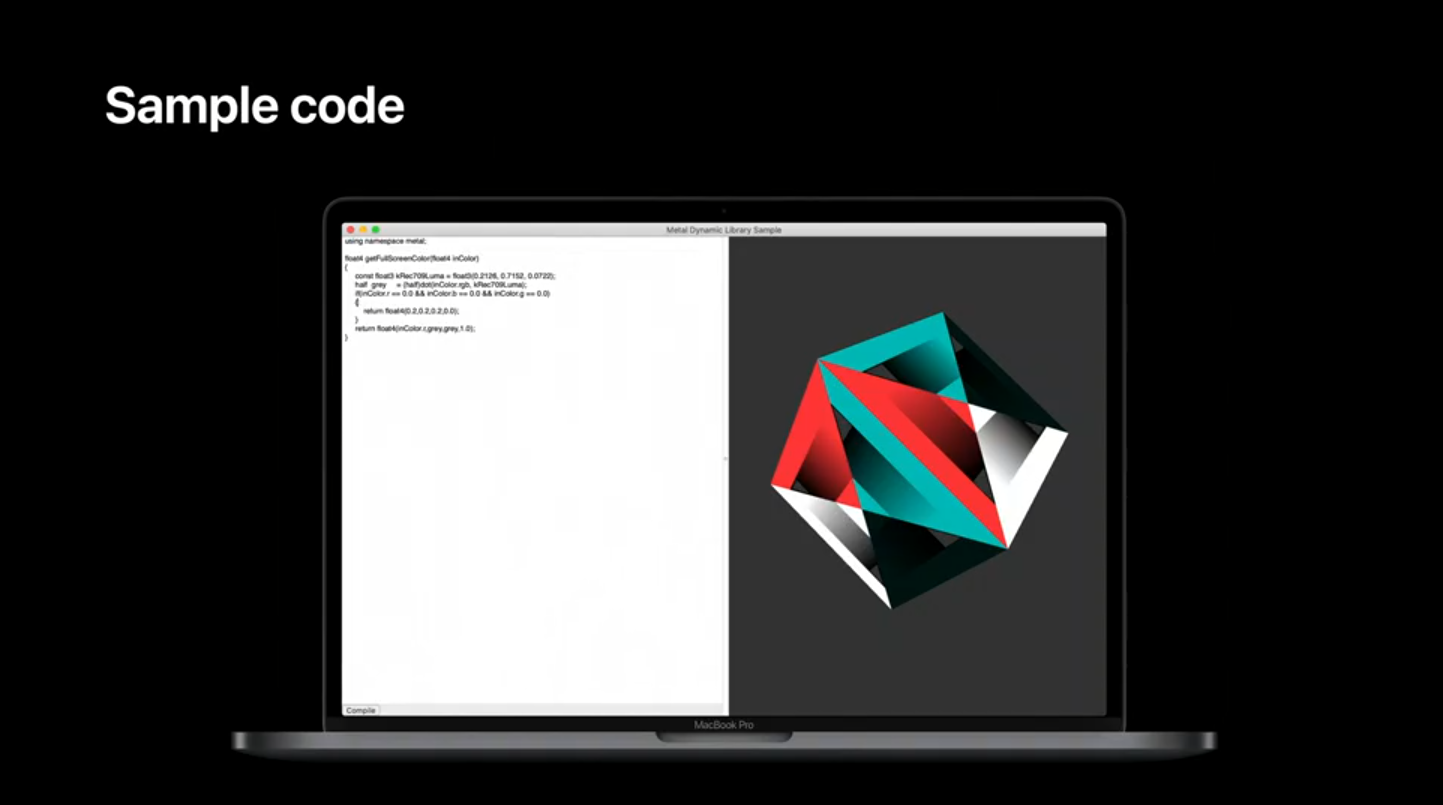

To help you adopt this API and to work through a small example, we've provided a simple example Xcode project available on developer.apple.com. The sample uses a compute shader to apply a full-screen post processing effect. The compute shader calls into a dynamic library to determine the pixel color. At runtime, we demonstrate how insertLibraries can be used to change which function is linked against. If you're interested in running this code yourself, head to developer.apple.com and download the Metal Dynamic Library sample.

We're really excited to bring these new features. Dynamic libraries allow you to write reusable library code without paying the cost in time or memory of recompiling your utilities. And like Binary Archives, Dynamic Libraries are serializable and shippable. In the sample code we've demonstrated how you can use dynamic libraries to allow users to write their own methods without requiring users to write their own kernel entry points. This feature is supported this year in iOS and macOS. Check the feature query on your device to see if your GPU supports and dynamic libraries.

Tools

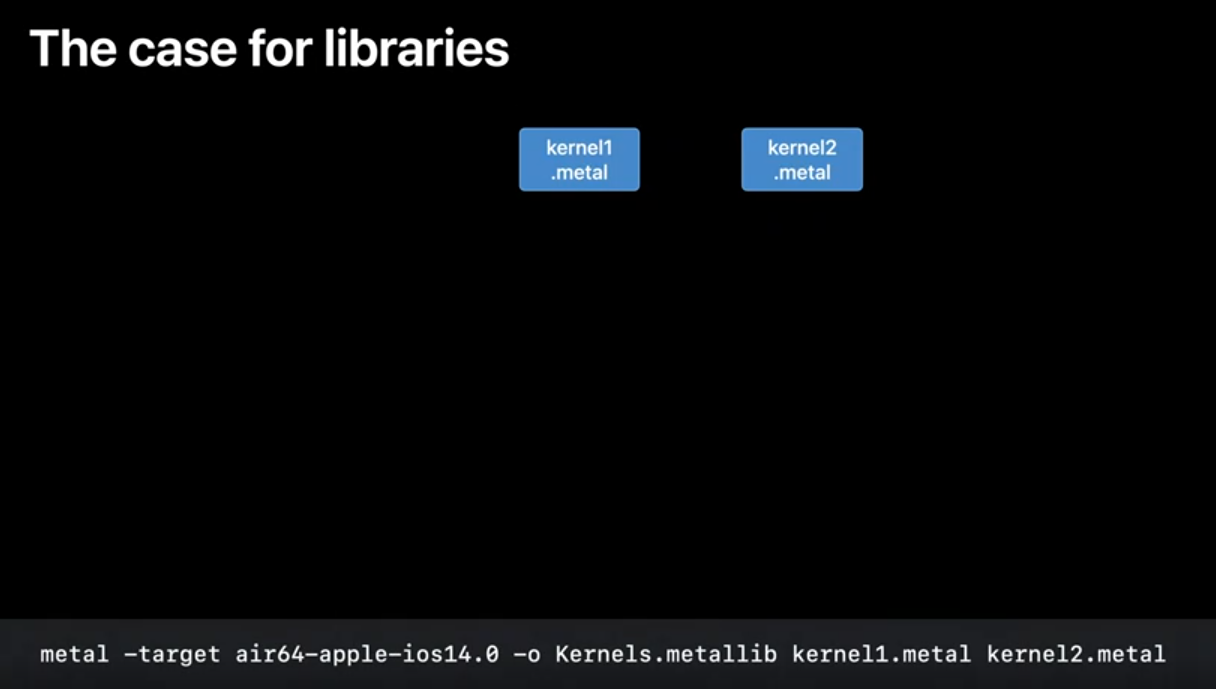

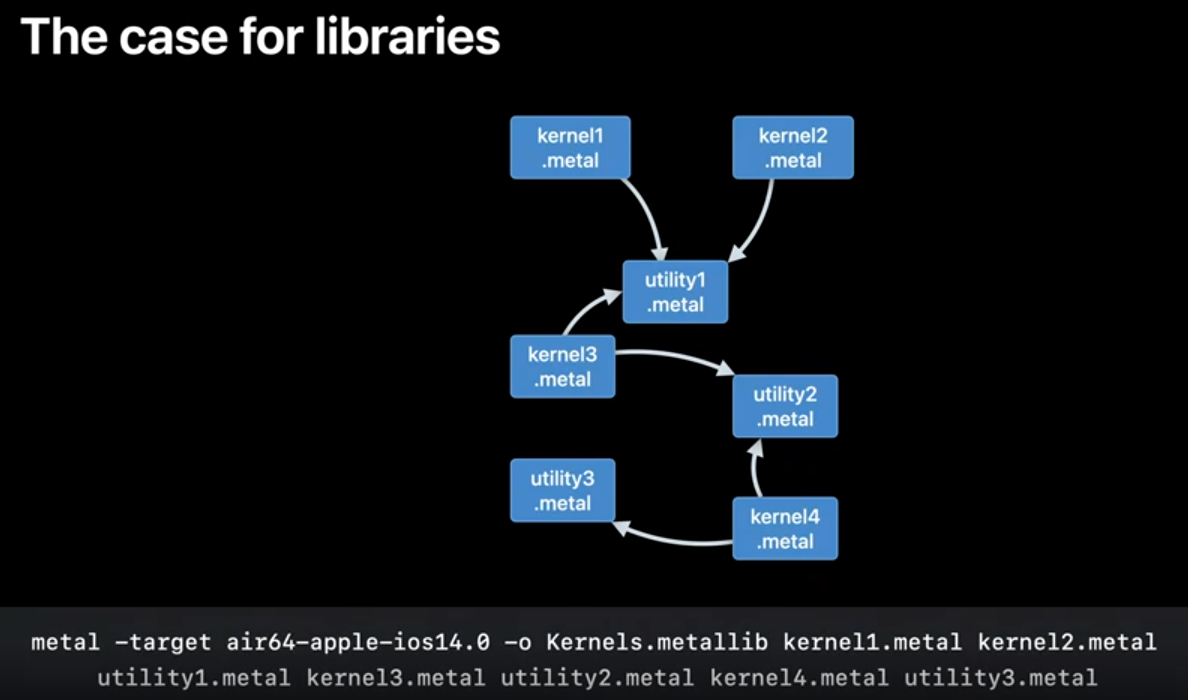

So far we've talked about some of the ways we're updating our shader model in Metal this year and the final part of our talk, we'll be discussing additional updates to our offline tool chains. To help me cover this topic, I'm going to hand you over to my colleague Ravi. Thank you Kyle, and hello everyone. In the previous sections we heard about how to create and use Binary Archives and Dynamic Libraries using the Metal API. I'm Ravi Ramaseshan from the Metal Frontend Compiler Team. And in this section I'm going to talk to you about how to create and manipulate these objects using the Metal Developer Tools. With a small code base you can put all your code into a couple of Metal files and build a MetalLib using a command line like the one shown below.

As your code base grows, you keep adding files to the same command line, but it becomes hard to track dependencies between all your shader sources.

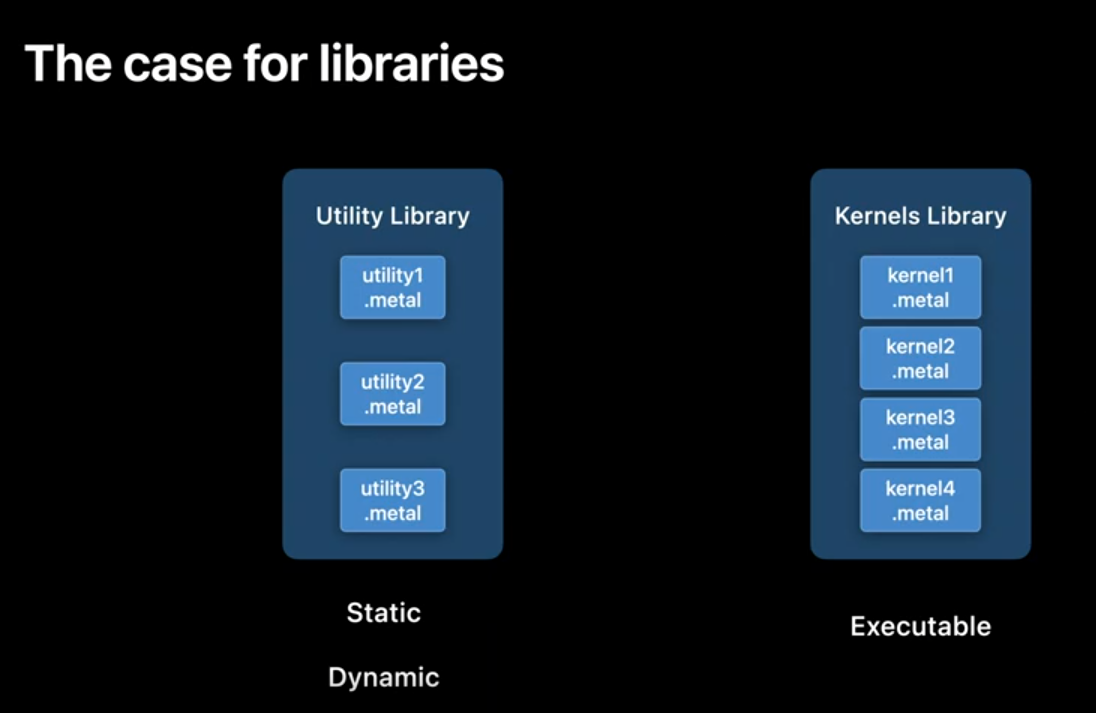

To address this we are bringing libraries to Metal! Libraries come in three flavors. The kind of MetalLibs that you've been building, up until now. The ones you use to create your MetalFunctions are what we call executable MetalLibs. For your non-entrypoint, or utility code, you can now create Static or Dynamic Libraries.

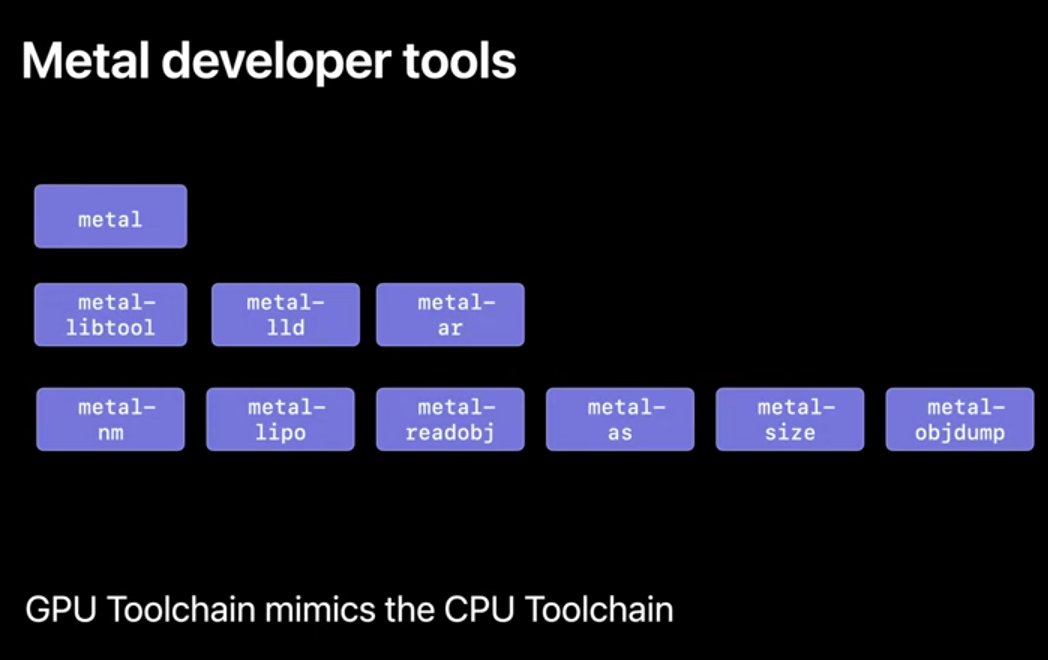

Along with Metal libraries we are also bringing more tools to metal which mimic the CPU toolchain. All these tools together form the Metal Developer Tools which can be found in your Xcode toolchain. We'll see how to use these tools to improve your shader compilation workflows.

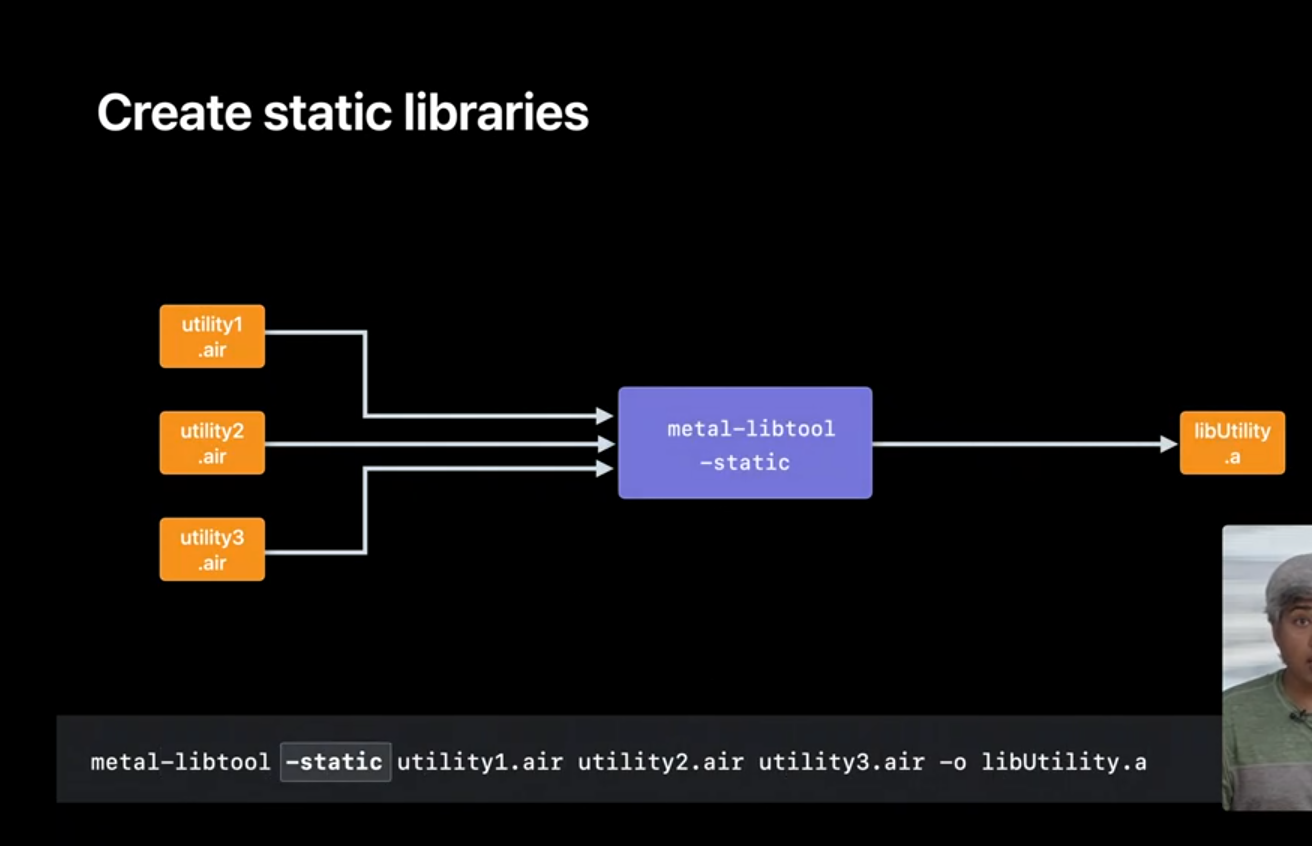

To get started let's use the Metal compiler on our Metal sources to get the corresponding AIR files using the command line below.

With that out of the way let's use a new tool in the tool chain. 'metal-libtool' which like its CPU counterpart is used to build libraries. The 'static' option archives all the AIR files together to build a static library.

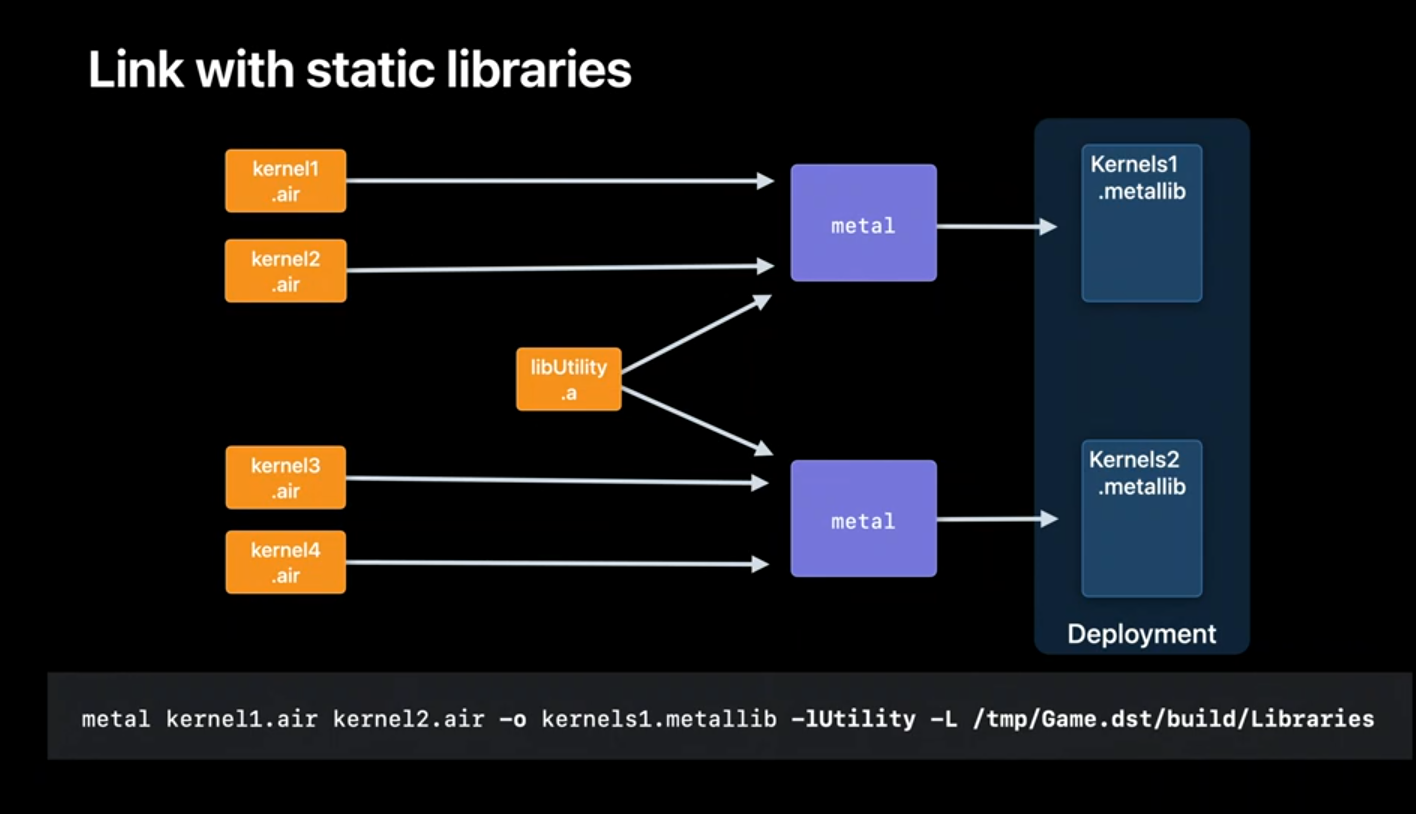

We'll then run the linker, through the Metal compiler to link the AIR files with the static library to create our executable MetalLibs. The 'lowercase-L' option, followed by a name is how you get the linker to link against your library. You can also use the 'uppercase-L' option to get the linker to search directories in addition to the default system paths.

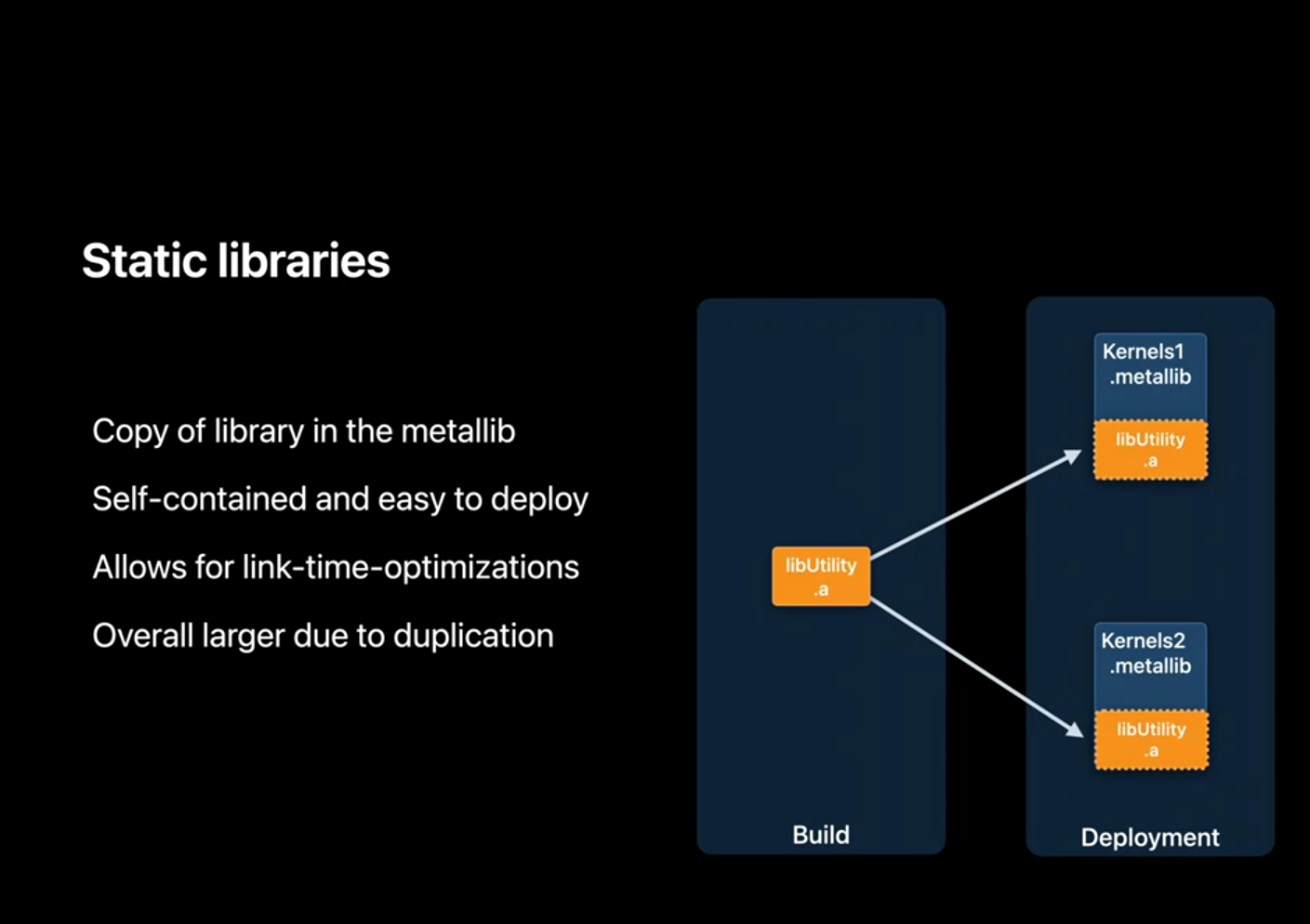

The way to think about static linking is that each of your executable MetalLib's has a copy of the static library. This has a few implications. On the bright side your MetalLibs are self-contained and easy to deploy since they have no runtime dependencies. Also the compiler and linker have access to the concrete implementations of your library so they can perform link- time optimizations resulting in potentially smaller and faster code. On the downside, you may be duplicating the library into each of your MetalLibs resulting in a larger app bundle.

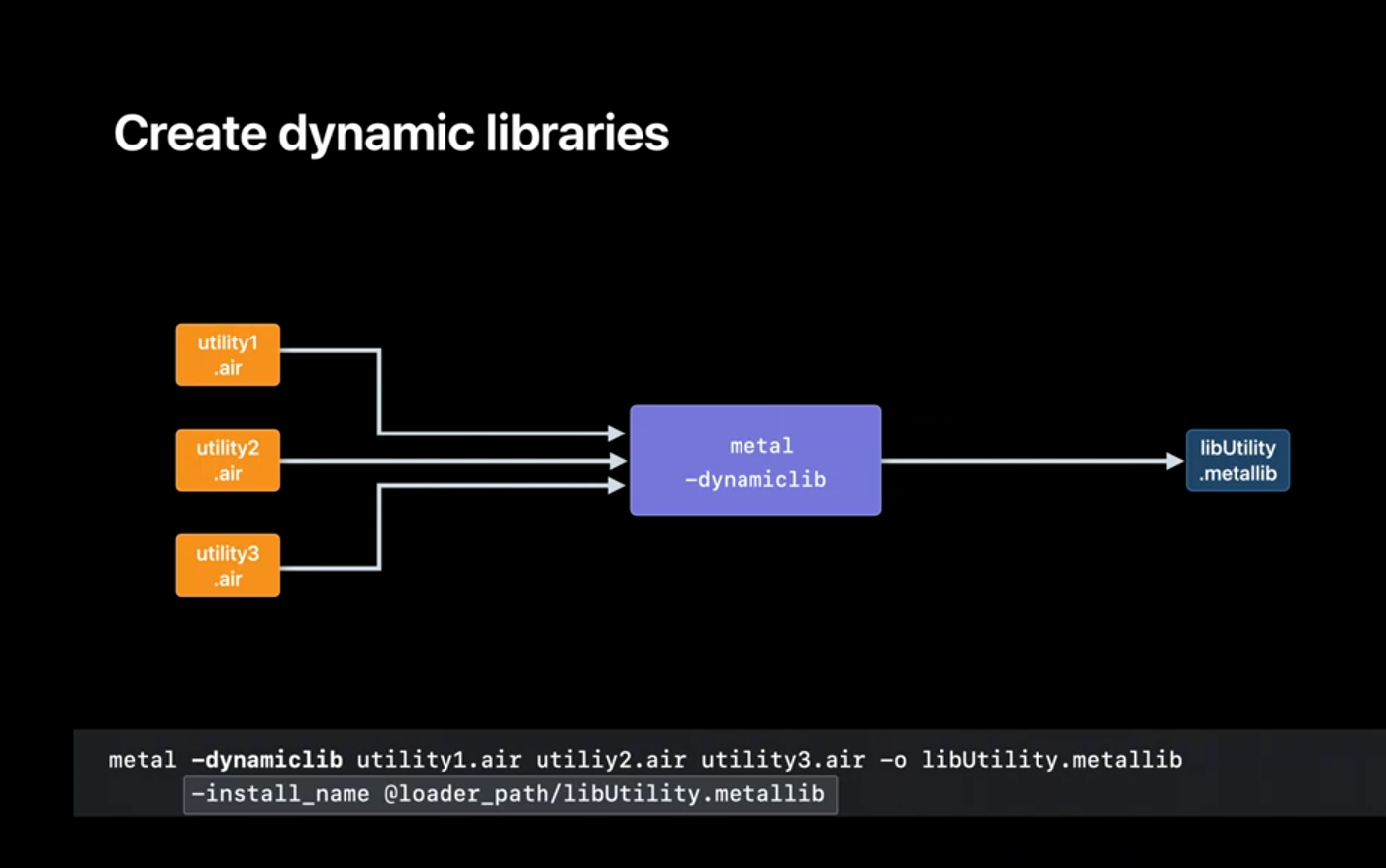

Fortunately dynamic linking is a powerful mechanism to address this problem. To create dynamic libraries we'll invoke the linker by using the '-dynamiclib' option.The '-instal-name' option to the linker is the toolchain counterpart of the Metal API you saw earlier. The install name is recorded in to the MetalLib. for the loader to find the library at runtime.

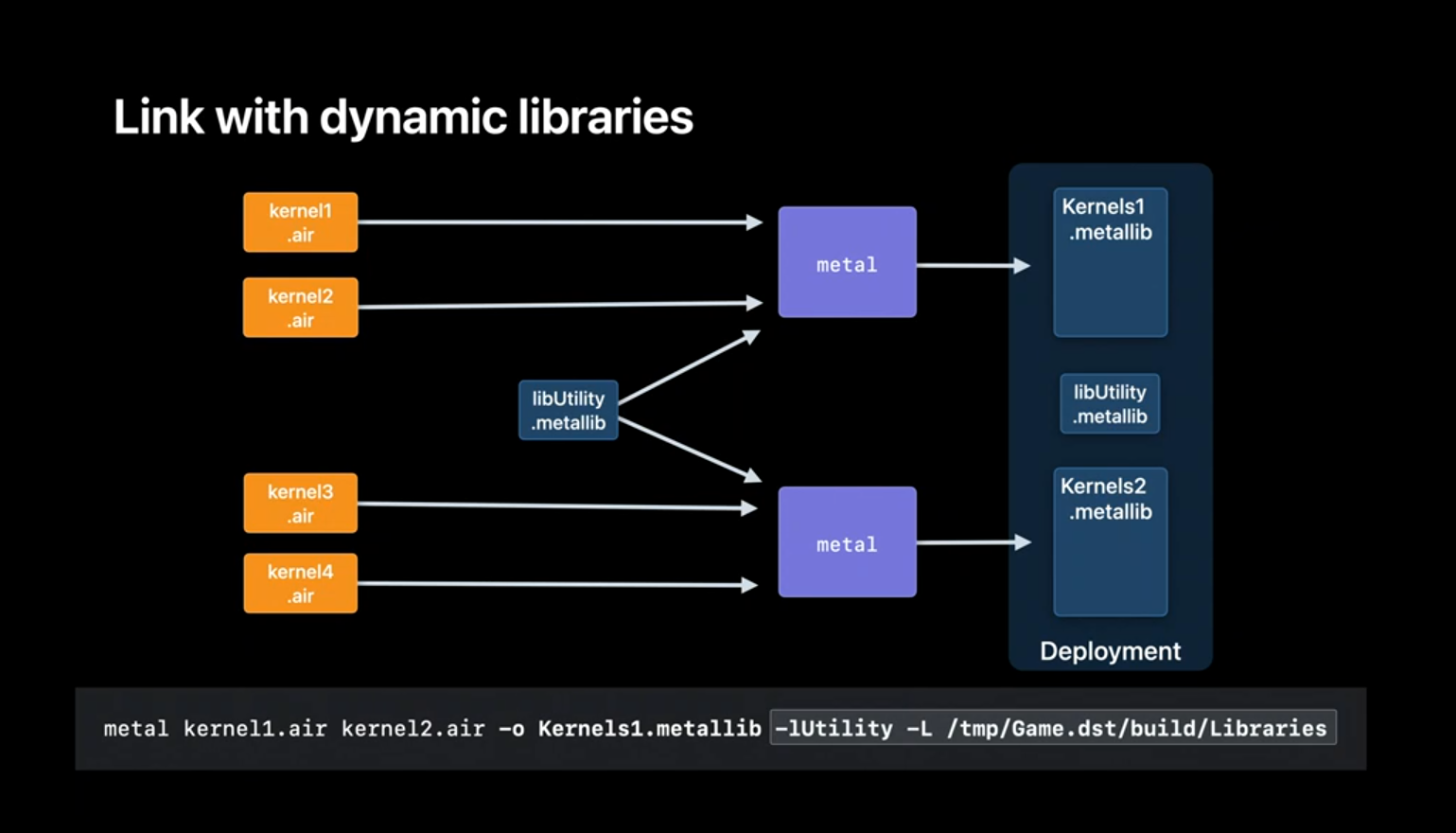

Now, we'll link the utility library with our AIR files to get our executable MetalLibs. With dynamic linking the utility library does not get copied in to the executable MetalLibs but instead has to be deployed on the target system separately.

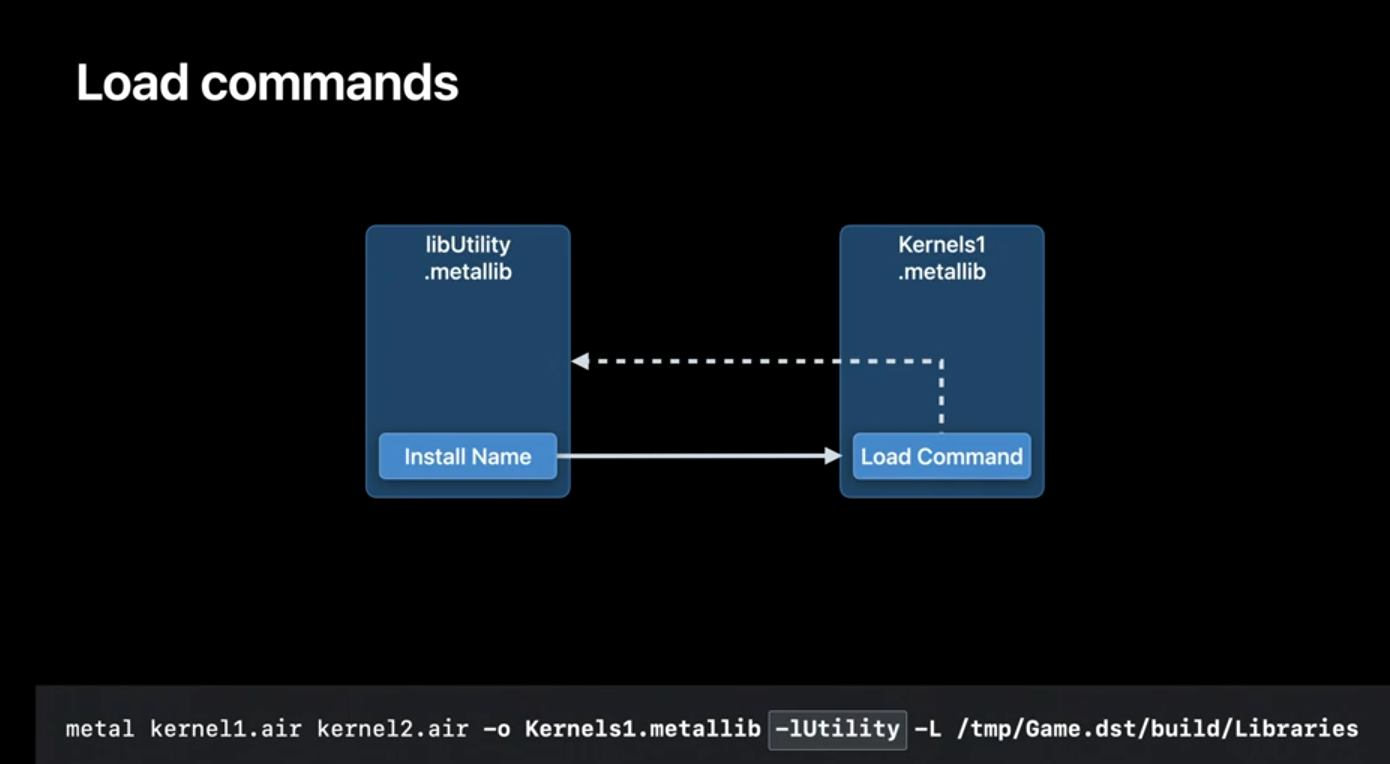

Let's see how the loader finds the dynamic library at runtime. It starts when you build the library. The linker uses the install_name of the dylib to embed a load command into the resulting MetalLib. Think of it as a reference to the dyllib that this MetalLib depends on. The load command is how the loader locates and loads the dylib at runtime.

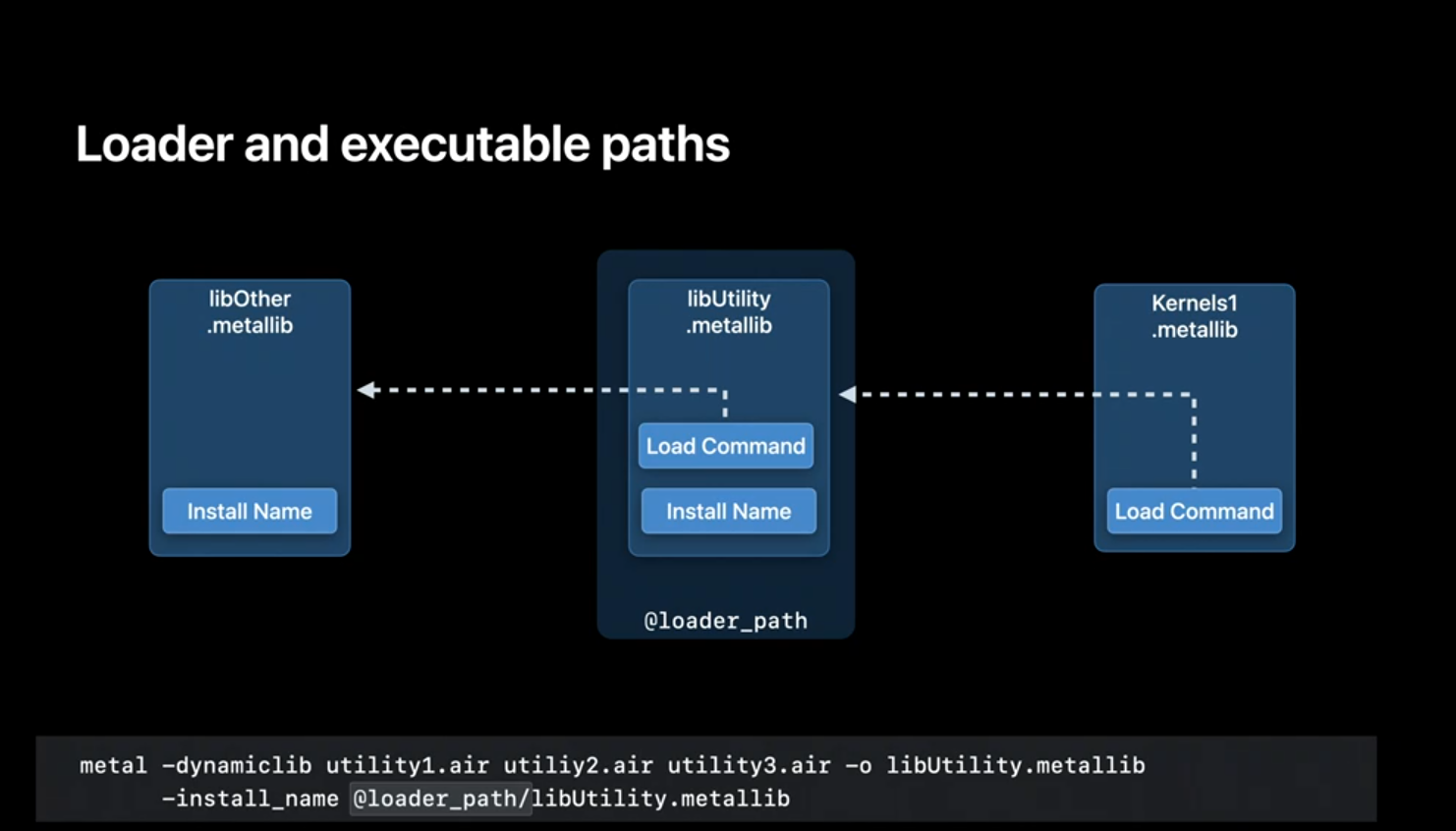

You can have multiple of these load commands if you link with more than one library. Finally let's revisit the 'instal_name' option we used when building our dylib, and see how a couple of special names work. Let's assume that libUtility depends on another dylib and focus on how the loader resolves these special names in the load command for LibUtility. At runtime the loader finds the library to be loaded using the install name but replaces @loader_path with the path of the MetalLib. containing the load command.

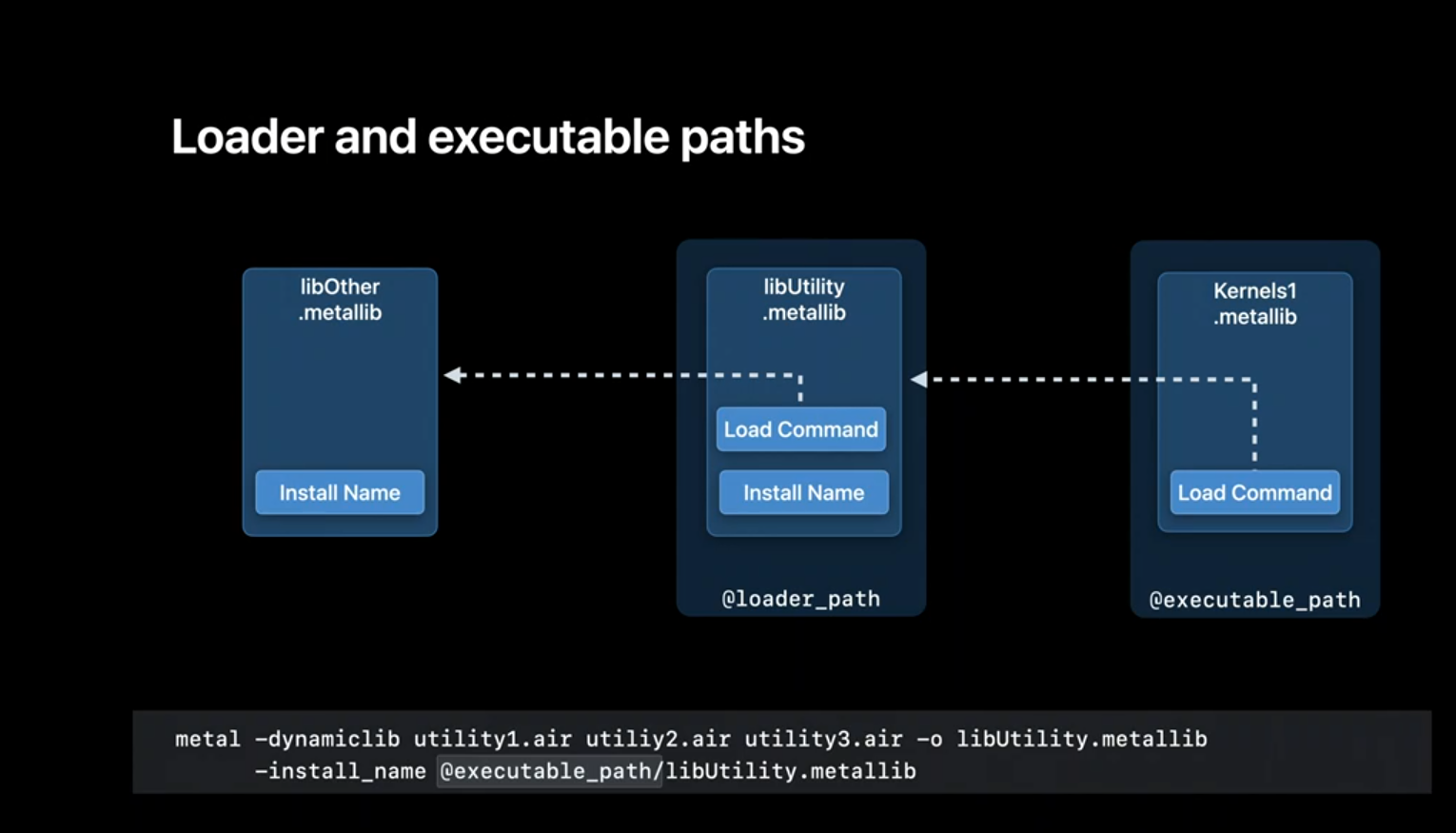

Metal also supports @executable_path which the loader resolves to the path of the executable MetalLib containing the entrypoint function.

You can probably see that for executable MetalLibs, both these special names resolved to the same location.Through load commands, each of your MetalLibs record only a reference to the dylib. Binding an implementation of the symbol to its reference is done at runtime by the loader. This too has some implications. On the positive side using dynamic libraries solves the duplication problem we saw with static libraries. The downside is that at runtime the dylib needs to exist for your executable MetalLib to work and the loader must be able to find it.

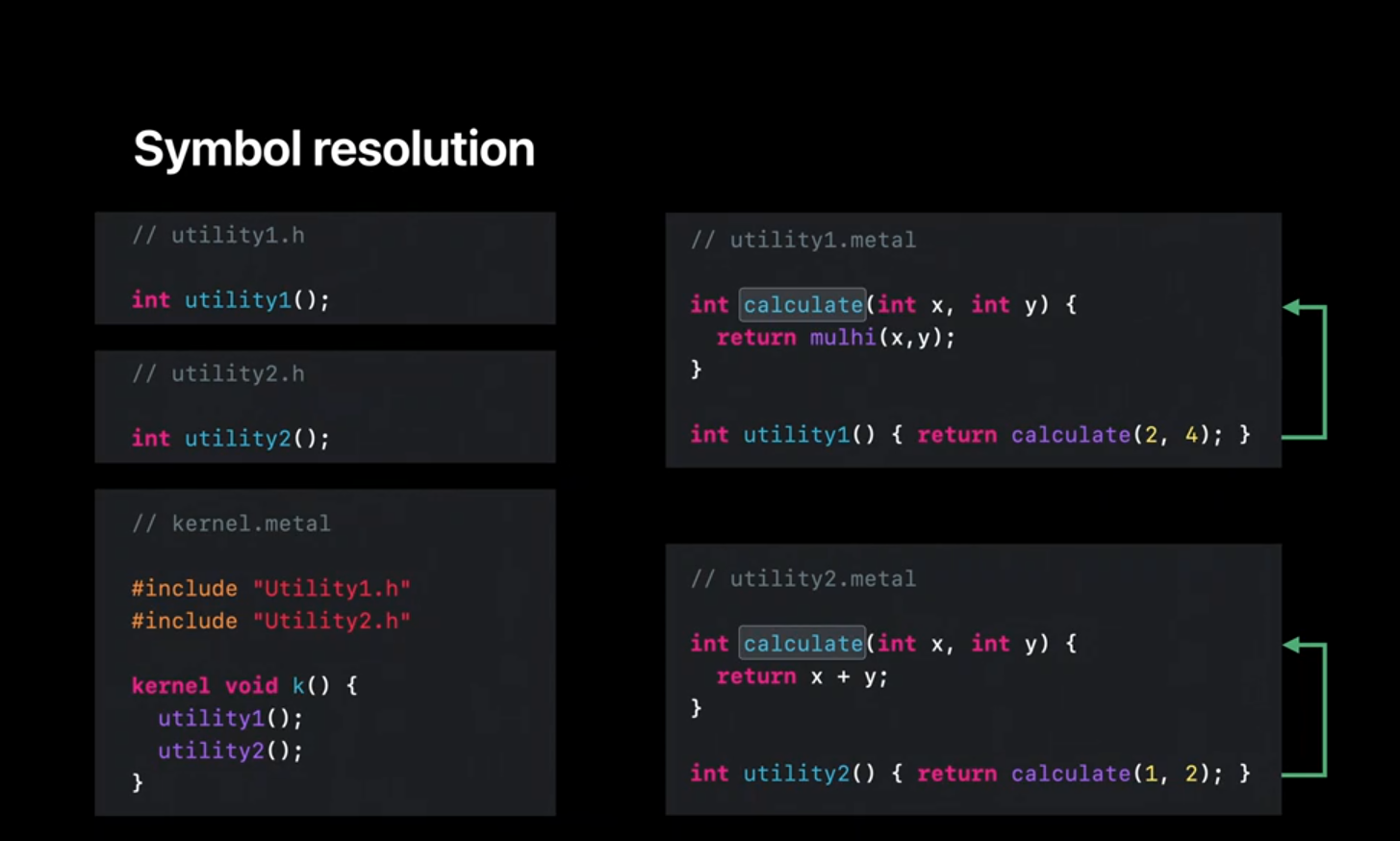

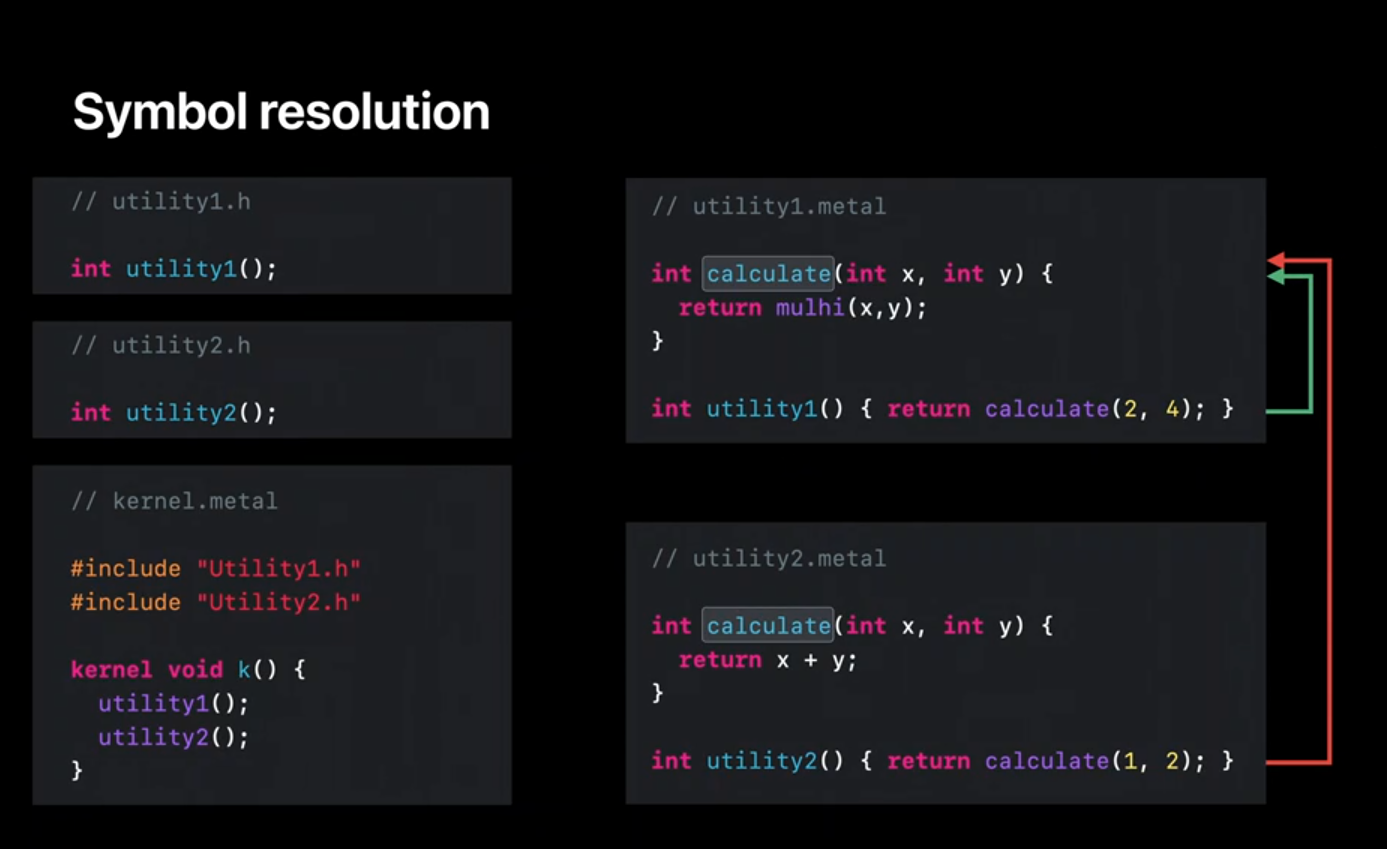

Since libraries can be written by multiple authors you run the risk of name collisions between the libraries. Like in this example the two libraries unintentionally export the same symbol, 'calculate.' The expected behavior was for each library to use its own 'calculate' function. In fact, with static linking you would have gotten an error at build time. However with dynamic linking the loader just picks one definition and binds all references to it. Because of this you may only get an incorrect result observed at runtime and can be quite hard to track down.

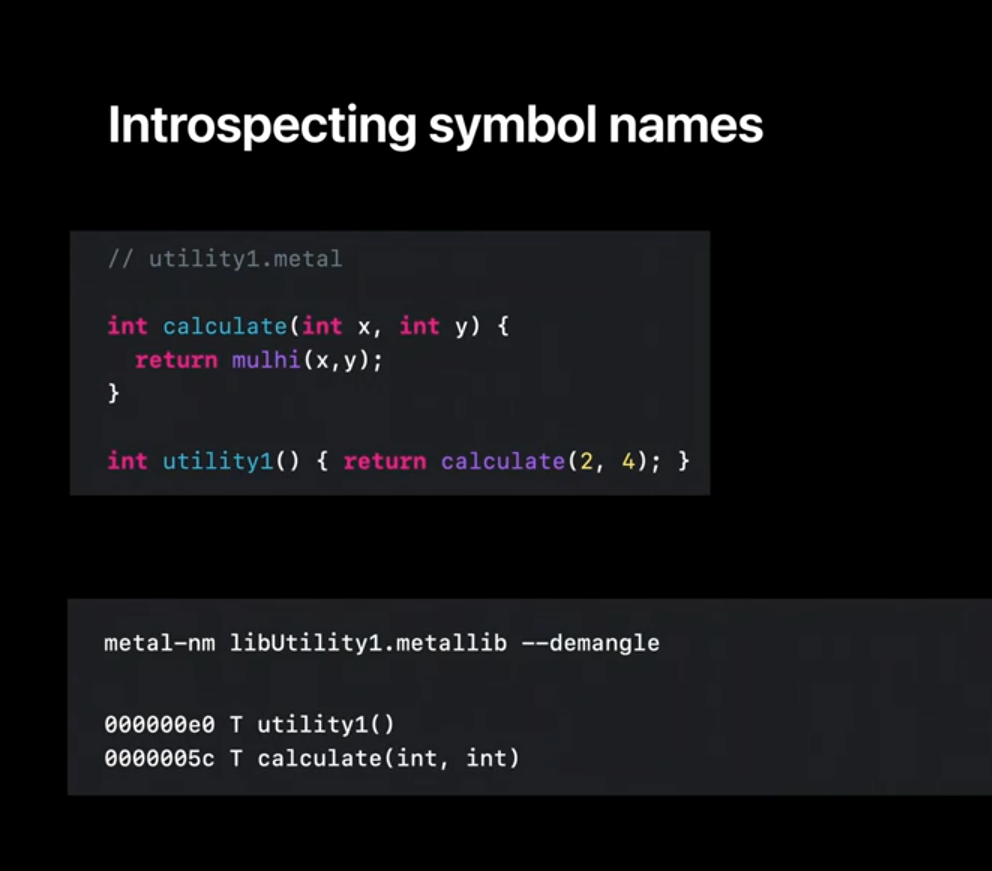

So why does the 'calculate' function participate in dynamic linking? That's because just like with the CPU compiler and linker, by default Metal exports all the symbols in your library. You can quickly check the symbols in your library using 'metal-nm' which like its CPU counterpart, lets you inspect the names of the symbols that are exported by your MetalLib.

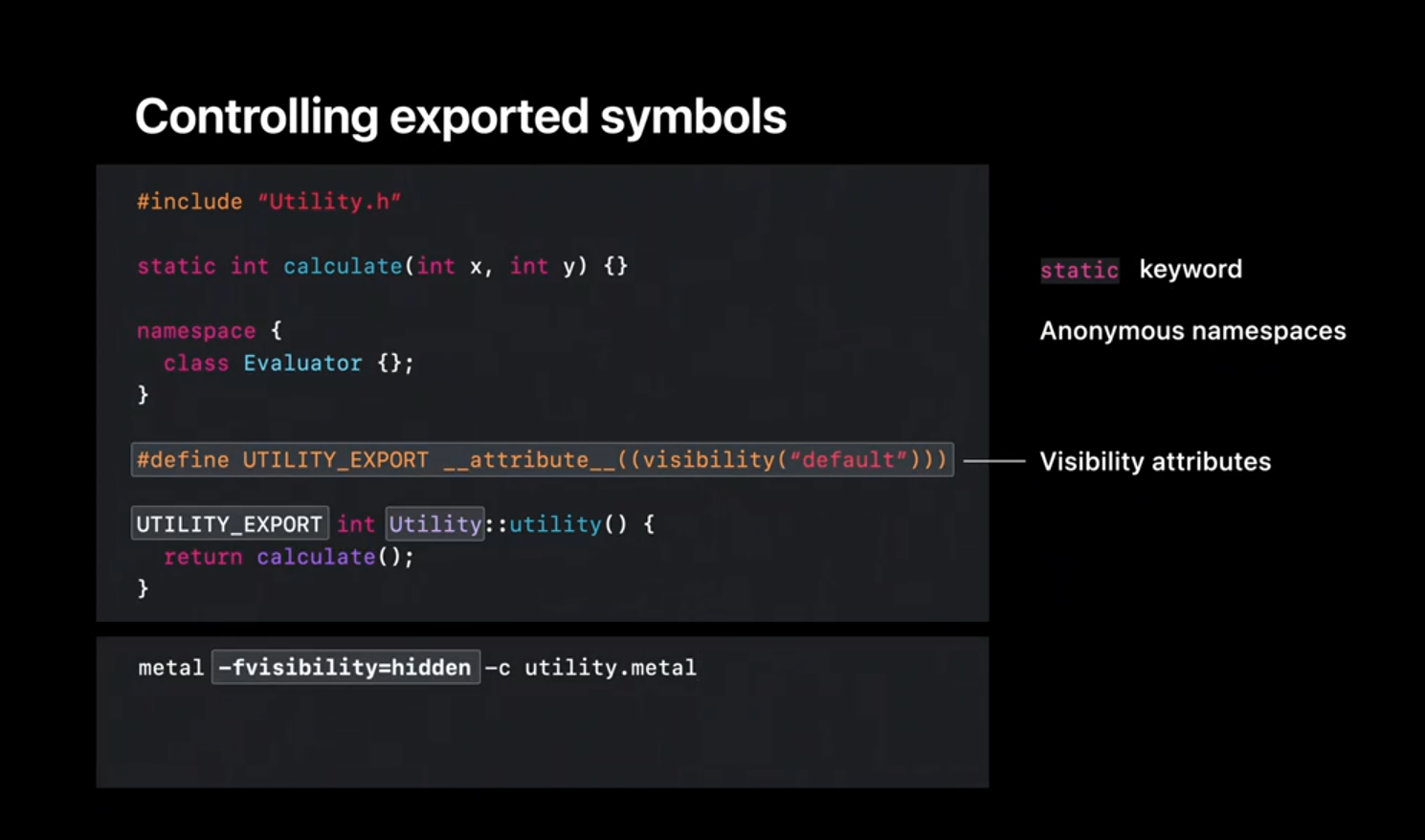

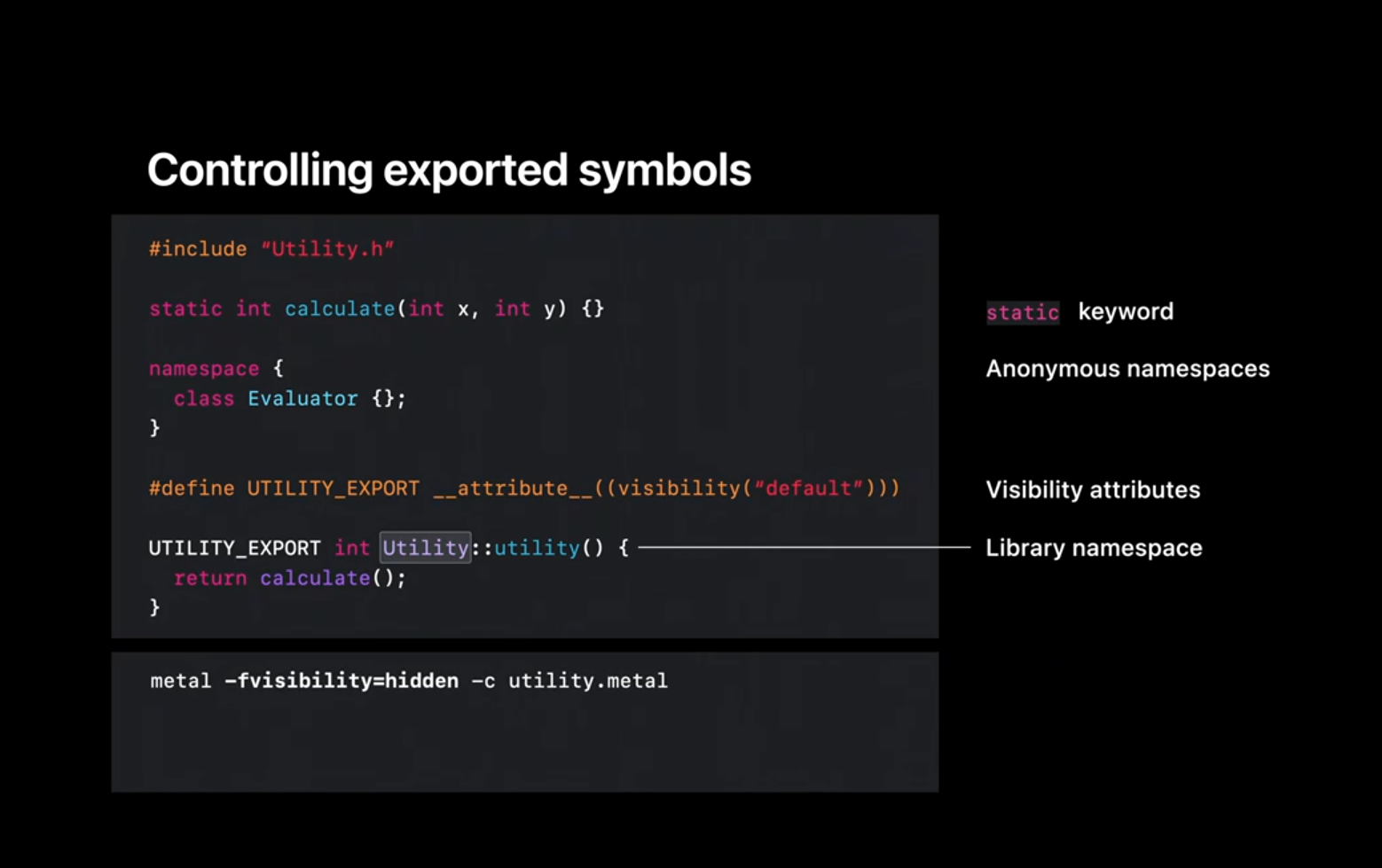

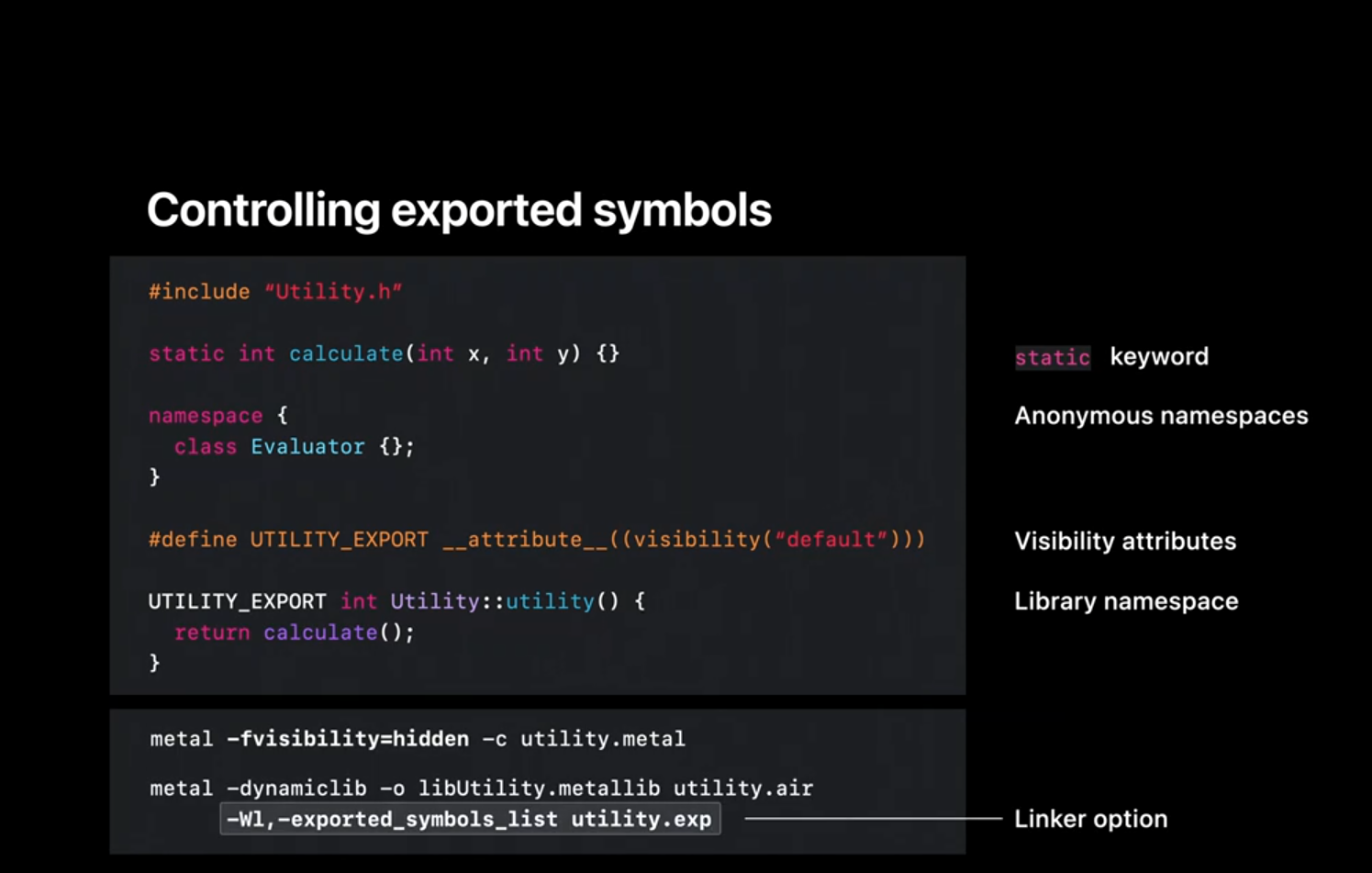

The question then is how do we control what symbols are exported by our library? Just like on the CPU side you can use the static keyword, anonymous namespaces, and the visibility attributes, to control which symbols are exported by your library.It's also a good idea to use namespaces when defining your interfaces. And finally, we support the 'exported- symbols-list' linker option. For more details there's some great documentation on our developer Web site on Dynamic Libraries.

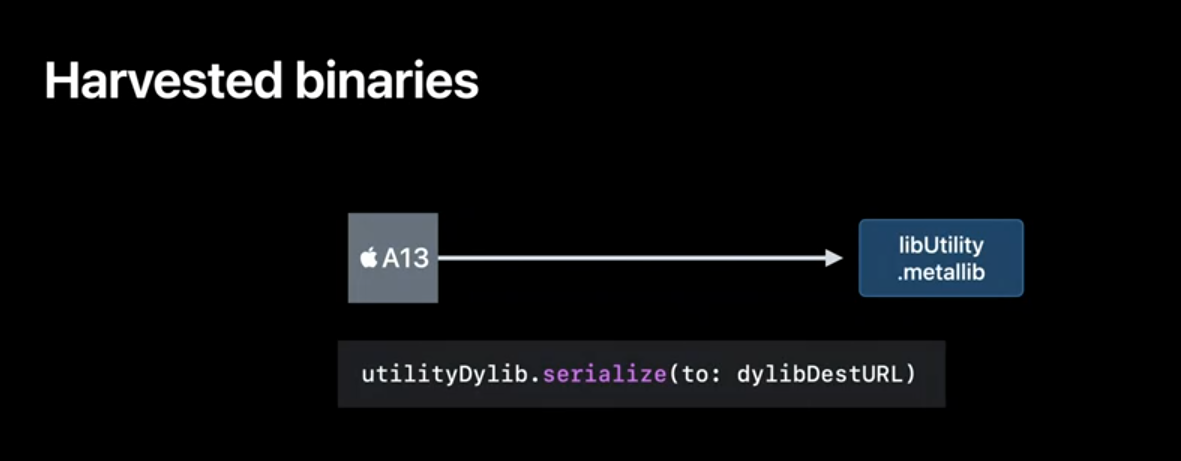

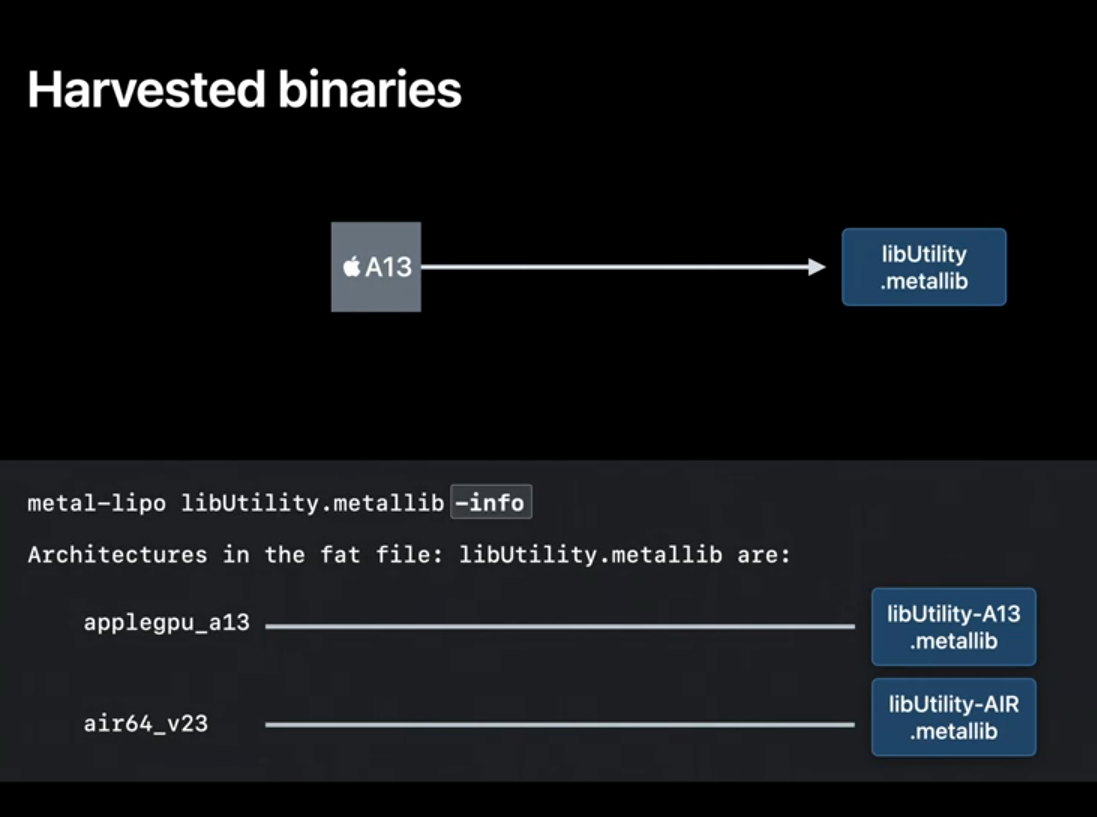

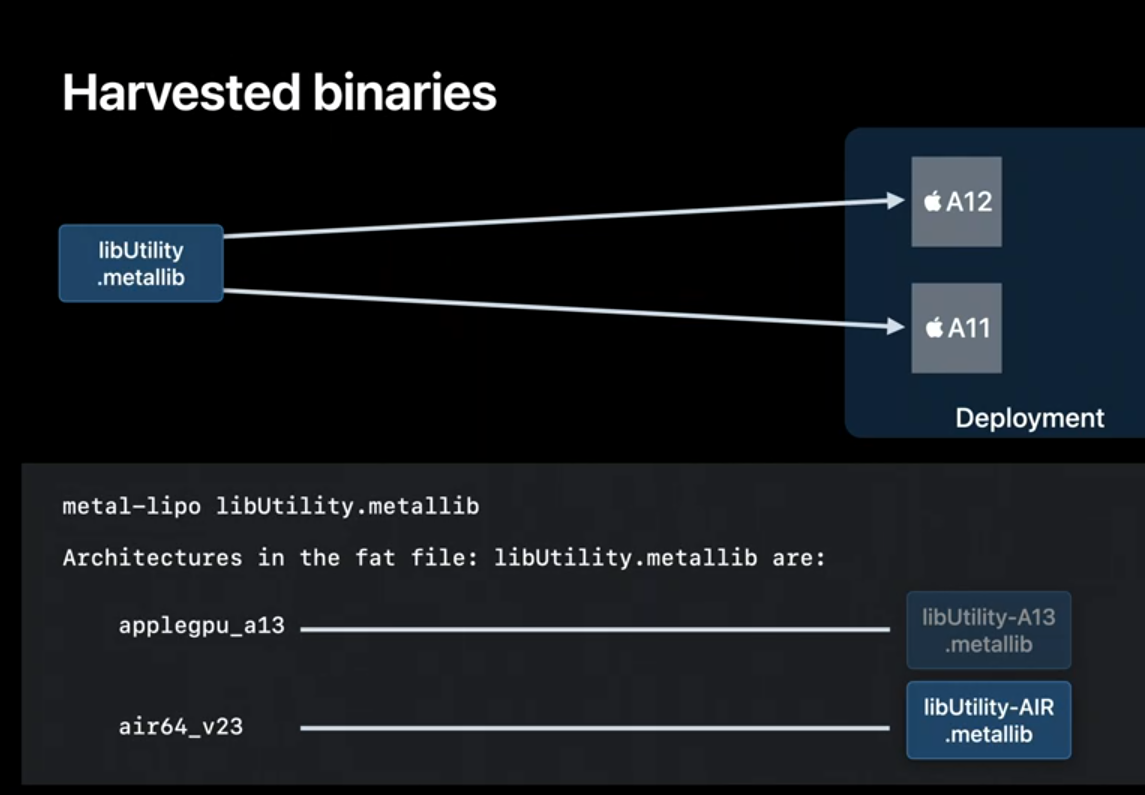

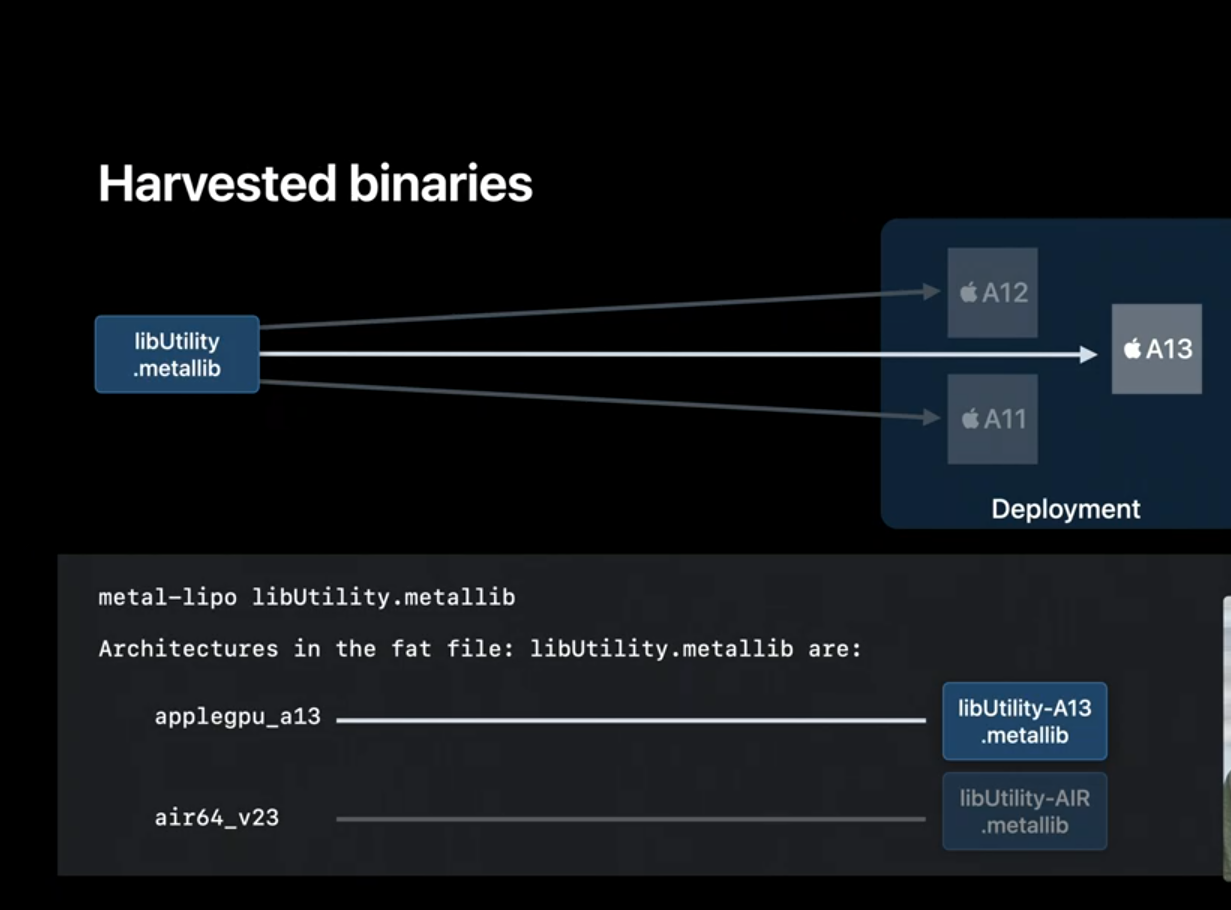

The other exciting concept that we introduced you earlier was harvesting fully compiled binaries from the device. We've harvested such a MetalLib for LibUtility on an A13 device using the Metal API that we saw in the previous section.

To work with such objects does a new tool called 'metal-lipo'. Let's use it to peek into what's in the MetalLib that we just harvested using the 'info' option. The tool reports that this MetalLib contains two architectures. The way to think about that is it is a fat binary that really contains two independent MetalLibs called slices. The A13 slice which is back back-end compiled and the generic AIR slice.

When the harvested MetalLib. is deployed on Non A13 devices, Metal will use the AIR slice just as it does today. That means spinning up the Metal Compiler Service and invoking the back end compiler to build your pipeline. This allows the MetalLib to be used on all iOS devices, not just A13- based ones.

However if the same MetalLib is downloaded on an A13 device, metal will use the A13 slice, skip back end compilation completely, and potentially improve your app's loading performance.

Now let's say you want to improve the experience of your app users that are also on A12 devices. Besides the A13 device let's also harvest a MetalLib from an A12 device.

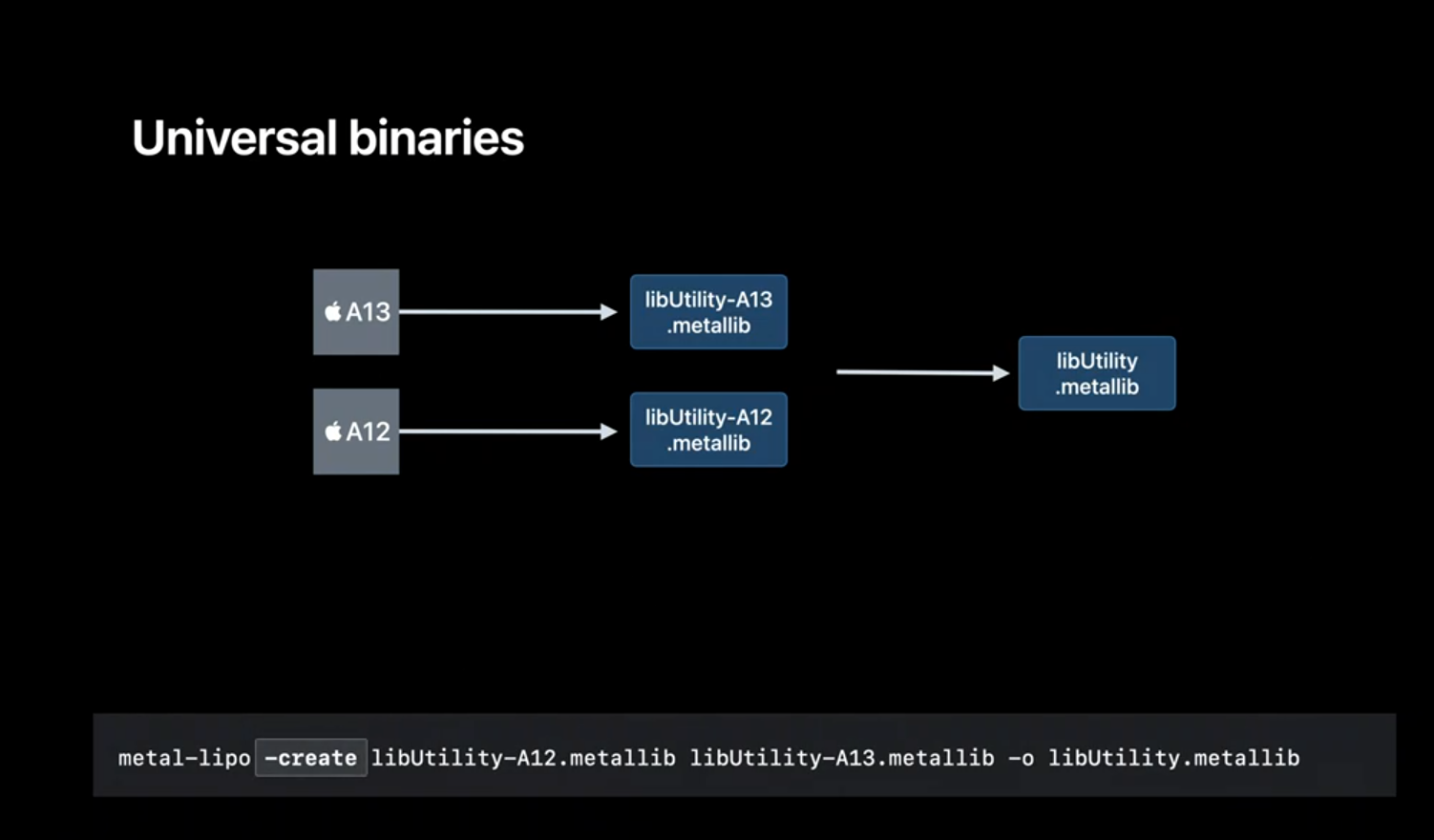

To simplify your App deployment you might want to bundle all the slices into a single MetalLib. 'metal-lipo' allows you to do just that and create an even fatter universal binary. This technique can even be used with Binary Archives. Obviously the more slices that you pack into your binary the larger your app bundle becomes. So keep that in mind when deciding which slices you want to pack into your universal binary.

So let's do a quick recap of the different workflows we've seen today. We started by using the Metal compiler to turn our Metal sources into AIR files. We then used 'metal-libtool' to create static libraries, a new workflow to replace your existing 'metal-ar' based one. We then built a new kind of MetalLib, a dynamic library. We also saw how to combine AIR, static, and dynamic libraries to create executable MetalLibs. Along the way we also saw using 'metal-nm' to inspect the symbols exported by a MetalLib. And finally we used 'metal-lipo' to work with slices in our harvested MetalLibs.

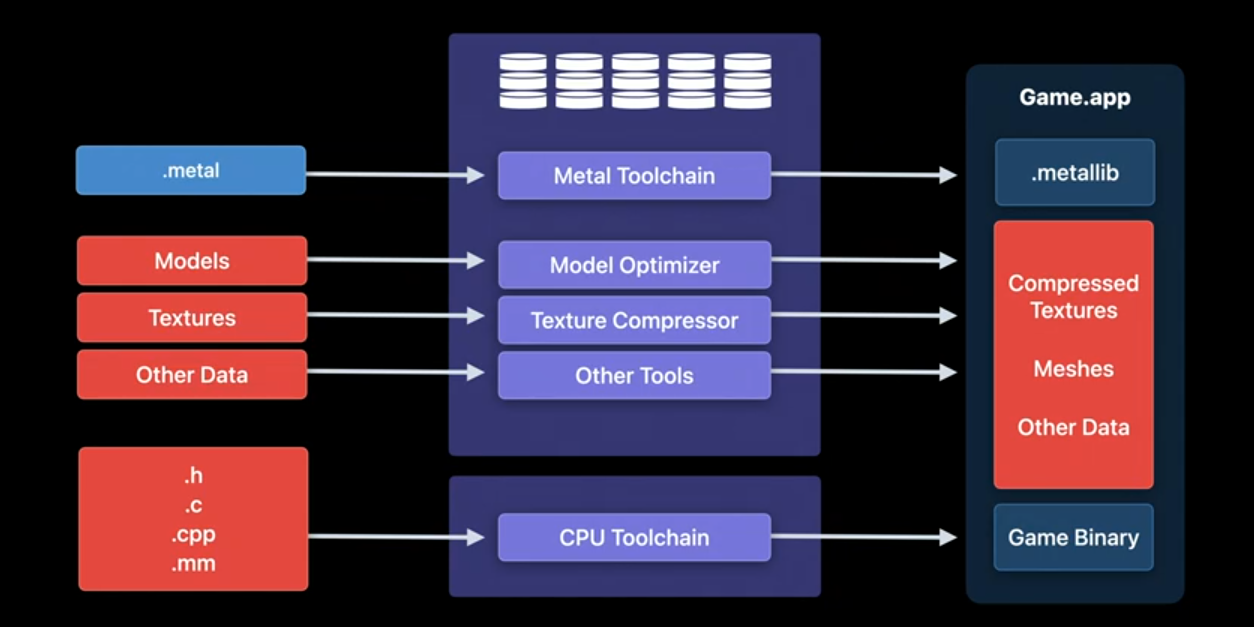

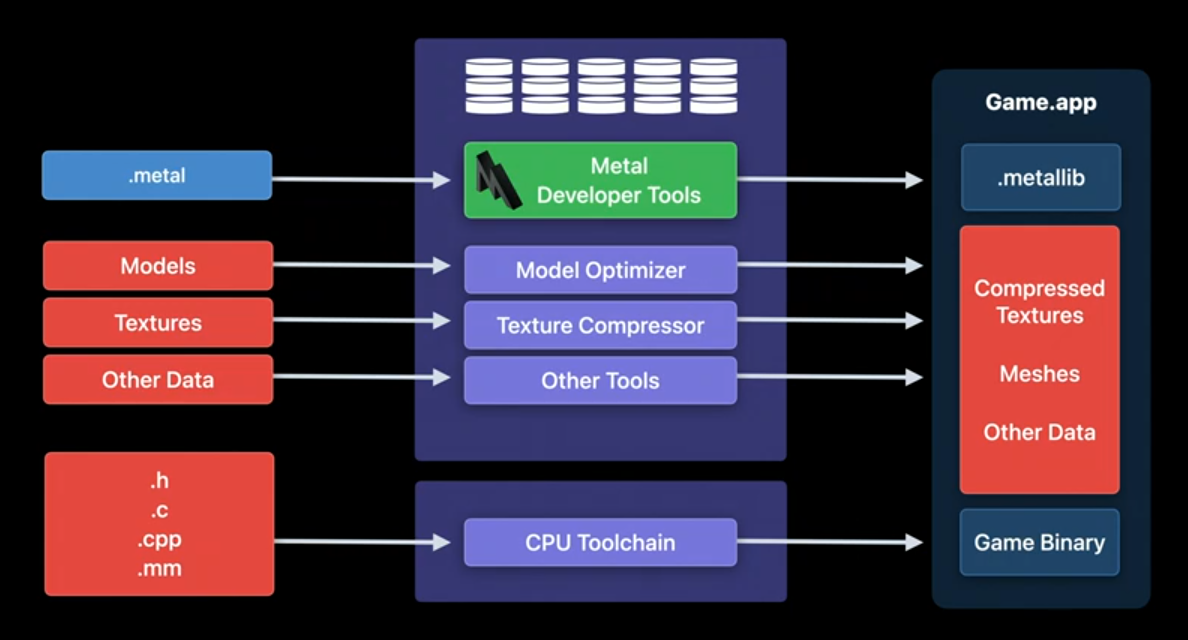

The last thing we want to show you is a use case shared to us by some of our game developers. Here's a high- level view of their workflow. As you can see they use a variety of tools including the CPU and GPU toolchains to build the app bundle. In some cases developers have pooled their machines together into a server farm that they use to build their assets. This workflow works great, as long as these tools run on Mac OS.

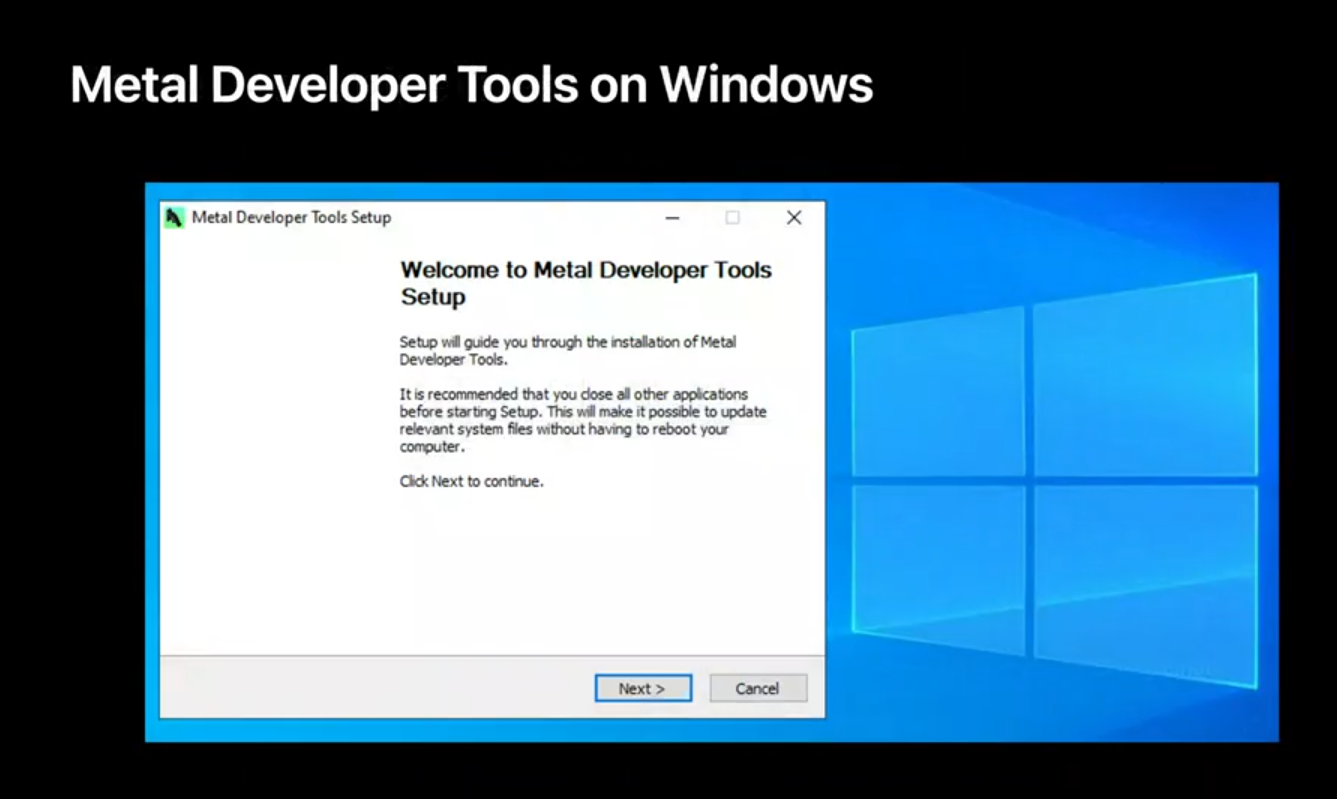

However some of these developers have established game and graphic asset creation pipelines that are based on Microsoft Windows infrastructure. In order to support these developers, this year we are introducing the Metal Developer Tools on Windows. With this, you will now have the flexibility to build your MetalLibs targeting Apple platforms from macOS, Windows, or even a hybrid setup. The tools are available as a Windows installer and can be downloaded from the Apple developer Web site today. All the workflows that are supported by the toolchain we release with Xcode are also supported by the Windows hosted tools. That brings us to the end of this session.

Let's recap what we covered here. We introduced you to Binary Archives a mechanism which you can employ to avoid spending time on back end compilation for some of your critical pipelines.

We then presented Dynamic Libraries in Metal as an efficient and flexible way to decouple your library code from your shaders.

And finally we went over some new and important compilation workflows by directly using the Metal Developer Tools. We hope this presentation gets you started with adopting the new compilation model for your new and existing workflows. Thanks for watching this session. And enjoy the rest of WW DC.

虽然并非全部原创,但还是希望转载请注明出处:电子设备中的画家|王烁 于 2021 年 10 月 22 日发表,原文链接(http://geekfaner.com/shineengine/WWDC2020_BuildGPUbinarieswithMetal.html)